10 Tech Innovations From Arm in July 2025 That Are Shaping the Future of AI and Cloud Development

From cloud-native AI innovations to faster mobile inference, July 2025 was packed with innovation across the Arm ecosystem. July 2025’s roundup explores how developers, startups, and global partners are pushing performance boundaries using Arm-powered tools and platforms.

Whether you’re optimizing edge AI, enhancing 3D rendering, or modernizing software security, these highlights showcase how Arm continues to enable high-efficiency computing everywhere.

How SiteMana Cut ML Costs and Latency by Migrating to Arm-Based AWS Graviton3

SiteMana, an AI-powered martech platform, boosted the performance and efficiency of its real-time machine learning (ML) pipeline by migrating from x86 (AWS t3.medium) to Arm-based AWS Graviton3 (m7g.medium) instances. The switch eliminated CPU credit throttling, increased network bandwidth 2.5×, reduced TensorFlow inference latency by ~15%, and cut infrastructure costs by ~25%.

Peter Ma, co-founder of SiteMana, shares how Arm’s innovation—featuring Neon SIMD and optimized TensorFlow—helped streamline SiteMana’s high-throughput workloads. The move underscores how Arm-based cloud infrastructure enables scalable, cost-efficient solutions for latency-sensitive, prediction-driven services.

W4 Games Enhances Godot with Arm Performance Studio

In part two of a multi-blog series, Clay John, Rendering Maintainer and Godot Engine Board Member, explores how W4 Games used Arm Performance Studio, namely Streamline, Performance Advisor, and the Mali Offline Compiler, to optimize Godot’s Vulkan renderer for Arm GPUs.

By profiling a real-world scene and addressing fragment shader bottlenecks, the team cut shader overhead and boosted frame rates. The work brings desktop-level rendering closer to mobile performance standards, showcasing how Arm’s tools enable smoother 3D experiences on Arm-powered devices.

How Senior Engineers Coach AI Coding Agents for Better Results

Senior engineers are shifting from hands-on coding to guiding AI coding agents in platforms, like Replit and Windsurf. Alex Spinelli, SVP of AI and Developer Platforms and Services at Arm, explains how these large language model–powered agents accelerate development but depend on human expertise to define tasks, enforce standards, and resolve logic issues. This evolving workflow boosts iteration speed and code quality—mirroring Arm’s focus on AI-native tools that elevate developer productivity.

Championing Diversity in STEM with Aston Martin Aramco Formula One™ Team

To mark International Women in Engineering Day, Arm hosted a panel at its Cambridge HQ featuring engineers and leaders from Arm and the Aston Martin Aramco Formula One™ Team. The event spotlighted personal stories of navigating gender bias, building confidence, and balancing careers, and launched a new peer mentoring program to support women in STEM and motorsport. Watch the discussion and hear how these trailblazers are driving progress in engineering and motorsport.

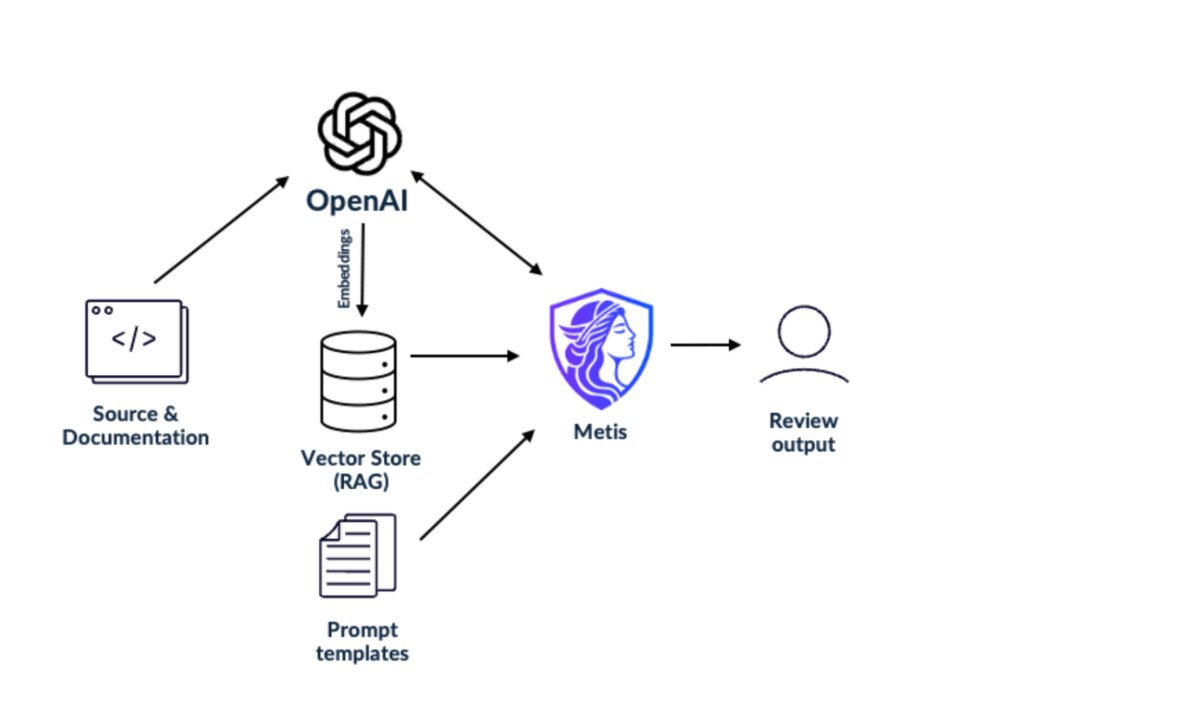

Arm Metis Enhances Software Code Security

Traditional static analysis often misses logic bugs and design flaws. Metis, a new AI-driven tool developed by Arm, brings architectural context into code reviews to uncover issues earlier in the development cycle.

Michalis Spyrou, Staff Software Security Engineer at Arm, explains how Metis supports secure-by-design principles and helps engineers build more resilient software. The blog also highlights how Arm’s innovations are advancing AI-enabled security practices through automation and expert collaboration.

Arm Development Studio 2024.1 is Optimized for Next-Gen Cortex-A and Cortex-X CPUs

Arm Development Studio 2024.1 is available, delivering a more efficient and secure development environment for the latest Arm-based designs. The release adds support for the new Arm Cortex‑A725 and Cortex‑X925 CPU processors, along with enhancements to debugging, semihosting, and performance analysis tools.

Stephen Theobald, Principal Applications Engineer at Arm, shares how these updates help streamline code reviews and accelerate development for next-gen applications.

How Arm SME2 Powers Faster, Smarter AI on Mobile Devices

Arm Scalable Matrix Extension 2 (SME2) is a set of advanced CPU instructions in the Armv9 architecture designed to accelerate matrix multiplications—that are essential to computer vision and generative AI workloads—on mobile devices.

Eric Sondhi, Senior Manager Developer Marketing Strategy at Arm, outlines how SME2 enables advanced generative AI capabilities in mobile apps, like image captioning, audio generation, and real-time summarization to run efficiently on-device, without requiring developers to modify their code.

The advancements significantly boost performance, as demonstrated by a 6x speedup in on-chat response times using the Google Gemma 3 model and XNNPack runtime library. SME2 reflects Arm’s broader strategy to embed AI acceleration directly into the CPU, making mobile AI development more seamless and power-efficient.

SME2 and KleidiAI Drive 30%+ Gains in Edge AI Performance

Gian Marco Iodice, Principal Software Engineer at Arm, reflects on a year of integrating Arm KleidiAI into XNNPack—unlocking seamless AI acceleration for edge devices without changing a line of code. With support for Int4/Int8 matrix multiplication and dynamic quantization, developers see over 30% performance gains in models, like Gemma 2 and Stable Audio Open Small.

Now enhanced by SME2 in Armv9 CPUs, this integration brings high-throughput efficiency to both convolutional and generative AI. It’s a clear step toward Arm’s vision of scalable, portable AI performance across the compute spectrum.

How Arm Innovations are Powering Real-Time Safety in Software-Defined Vehicles

Developers can build safe, modular software-defined vehicle (SDV) systems on Arm using functional safety containers and DDS-based real-time communication. Odin Shen, Principal Solutions Architect at Arm, draws on industry‑leading Arm Automotive Enhanced (AE) IP and certified Software Test Libraries to show how containerized workloads on Arm Neoverse platforms support ISO 26262‑compliant architectures with scalable fault isolation. This architecture modularizes workloads, accelerates development workflows, and ensures deterministic safety behavior for software‑defined vehicles.

Zilliz Cloud Accelerates AI with Arm Neoverse and AWS Graviton

To meet the rising demand for scalable, low-latency vector search in AI applications, Zilliz Cloud, a highly performant, scalable, and fully managed vector database platform, migrated its Milvus database to Arm Neoverse-based AWS Graviton CPUs.

Jiang Chen, Head of Ecosystem and Developer Relations at Zilliz, and Ashok Bhat, Senior Product Manager at Arm, describe how this migration resulted in sub-20ms query times, billion-scale indexing, and up to 70% lower infrastructure costs. By combining innovations like AutoIndex, Cardinal, and RaBitQ with Arm’s energy-efficient Neoverse architecture, Zilliz delivers high-throughput search for use cases, like RAG, visual search, and anomaly detection without compromising performance.

Any re-use permitted for informational and non-commercial or personal use only.