Arm Moves Compute Closer to Data

Arm is committed to maximizing the value of Internet of things (IoT) data by enabling compute as close as possible to where data is generated. In achieving this, we focus on three key areas within compute infrastructure: the endpoint, the edge and computational storage.

Why is it so important to move compute closer to data? It comes down to the fact that in so many IoT use cases, the value of data can be measured in milliseconds. From emergency healthcare to monitoring gas flow to predicting traffic collisions, any delay in extracting insight reduces the value of that insight—potentially to zero.

Consider then that the level of compute required to extract that value has traditionally been found only in powerful cloud data centers. With the clock ticking, data must be transferred from endpoint to cloud over potentially thousands of physical miles. The latency introduced in doing so limits that data’s value significantly while putting great strain on the overall infrastructure and increasing energy (and therefore cost) usage. Ultimately, it can make or break the value of a use case.

This problem would only get worse as more and more IoT sensors come online, sending potentially thousands of gigabytes of data per day upstream.

Arm recognizes that for the IoT to succeed in its aims to create a better world using data, the industry must focus its efforts in moving compute closer to data than ever before.

In many cases, that means enabling capable compute on the endpoint device itself. In others, it’s about putting compute where it makes the most sense; be it the edge, within computational storage or elsewhere in the network infrastructure.

The Endpoint

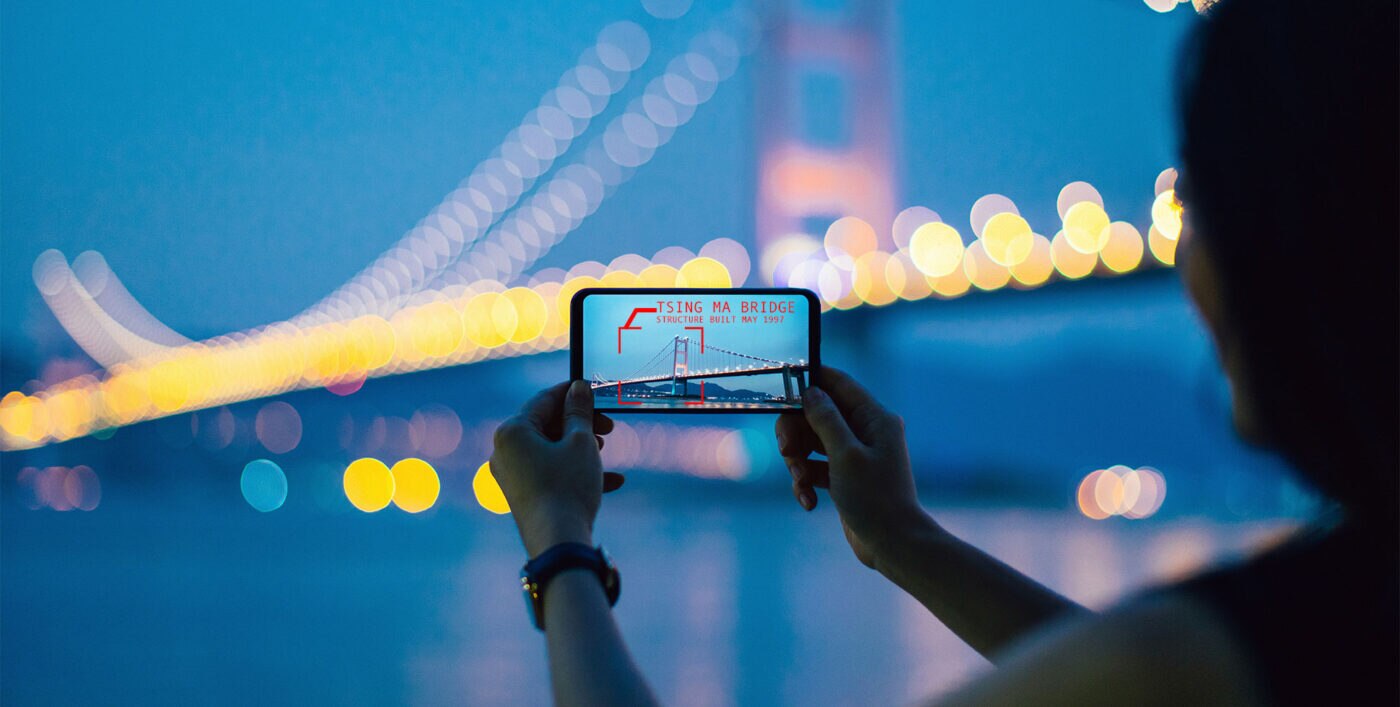

Data begins at the endpoint. It is generated, constantly and in great abundance by industrial sensors, cars, smartphones, security cameras, wearables and hundreds of other endpoint device categories within the IoT.

By giving endpoint devices the ability to process their own data, we ensure the lowest time-to-value of that data and therefore the greatest insight. Arm Cortex processors and Arm Ethos ML processors enable these processes on even the most restricted IoT devices, from traditional workloads to sophisticated endpoint AI and ML algorithms, putting compute closer to data.

In doing so, we also enable new ways to manipulate sensor data at the endpoint. We call this sensor fusion: the ability to aggregate data from multiple sensors within a device (but also potentially data from other nearby devices or less time-critical data from the cloud) and infer ever deeper meaning.

For example, an IoT device used to monitor the health of an industrial electric motor might traditionally monitor the RPM for fluctuations. In industrial applications such as this where machines are under extreme load, these failures can occur almost instantly and cause significant damage to machinery: in predicting that failure, every second counts.

A modern, AI-enabled device might employ sensor fusion, combining RPM data with data from voltage, vibration and sound sensors in real-time in order to pre-empt failure long before it happens.

Endpoint AI: Read more

Discover how Arm is enabling AI for IoT at the endpoint with the Arm Cortex-M55 CPU and Arm Ethos-U55 NPU

The Edge

By putting high-performance edge compute closer to data, we can ensure that even the most complex data workloads can be processed as quickly as possible. Residing within relatively close physical proximity to endpoint devices (for example, in the base station of a cell tower), edge servers reduce latency to near-zero and retain that critical time-to-value while maximizing data privacy and reducing energy costs.

Analyst house Omdia, which defines the edge as any location with a 20-millisecond (ms) round trip time (RTT) or less to the endpoint, predicts that edge server shipments will double by 2024.

Edge servers have a growing role in not only handling the flow of data but processing that data too. In most cases, this new wave of performant edge servers contains much of the same compute power found in cloud data centers, albeit far closer to the endpoint.

We can also extend the benefits of sensor fusion by pooling data from multiple IoT devices: an edge server presiding over the factory in our electric motor example above might aggregate data from multiple motor devices in order to spot patterns and better predict chain-of-event failure in real-time.

Another example is an ANPR/ALPR traffic camera, recording car license plate numbers. The camera itself might employ endpoint AI in order to analyse video frames and identify the license plates, sending the license plate (rather than any video data) to the edge server.

From here, an AI edge server might aggregate license plate data from hundreds of other nearby traffic cameras and compare it with a database of stolen vehicles to track a car as it moves across state, predict the vehicle’s trajectory and suggest roadblock locations to law enforcement.

Edge AI: Read more

Read more about how Arm is bringing intelligence closer to the edge through the Arm Neoverse family

Computational Storage

However, the example above has its own roadblock. Like many IoT devices, the endpoint traffic camera and edge server will contain a storage device. The camera will use this device to store raw traffic video; the edge server will use it to store license plate information, as well as information about the cameras it needs to communicate with and other operational data.

In both cases—and in the case of any compute device that employs external storage—the speed at which data can be processed is limited by the speed at which it can be stored to, and called from, storage.

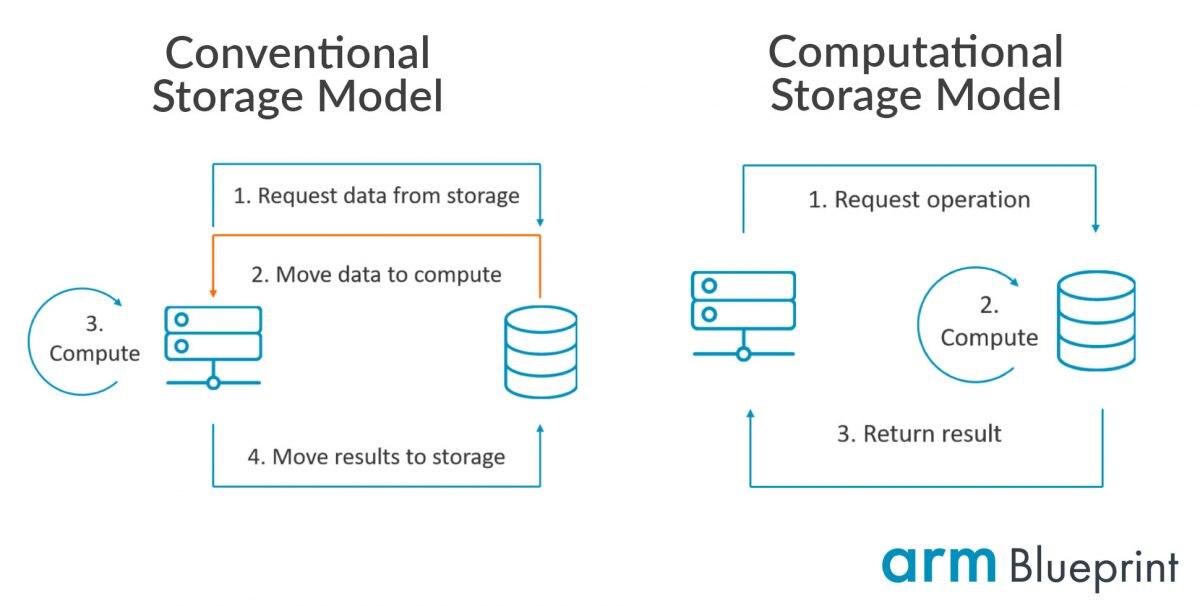

In a conventional compute system, the CPU must request blocks of data from the storage. Only once that block of data has moved into memory can the CPU access the specific element of data it needs. This potentially results in a CPU retrieving large quantities of data from storage into memory in its search for a particular data element.

This bottleneck is addressed in the computational storage model, which adds powerful compute into the storage device itself, putting compute closer to data than ever.

While conventional storage devices already contain a CPU to control storage functions, computational storage adds the capability to process non-storage workloads—such as the processing of data directly on the storage device.

In doing so, the main compute device no longer needs to request blocks of data: it requests an operation to be performed on the data, with only the computed result returned. In the case of an ALPR traffic camera, that might mean requesting all the license plates beginning with a certain letter.

The compute within the storage device would sort through its data and only send the relevant results back to the main compute device, significantly reducing the bandwidth required, and time taken, to process the data. And by reducing the movement of unencrypted data by enabling it to be processed directly on the drive with security in place, computational storage ensures that data remains as secure as possible.

In many cases, the compute performed on a computational storage CPU will be traditional in nature. Yet it’s entirely possible to run ML workloads, too—either natively on the CPU or via an NPU (neural processing unit). Continuing the above example, this might enable the storage device to identify all license plates beginning with a certain letter—but only on blue sedans. The more intelligence we add, the more complex the inference and the more value we can extract from data.

Computational Storage: Read more

Discover how the Arm Cortex-R82 CPU can power the next generation of computational storage devices.

Reducing latency, from miles to microns

Edge compute reduces the time it takes to process data sent upstream by potentially thousands of milliseconds. Endpoint compute reduces this even further by placing the compute on the device itself. And computational storage removes that final bottleneck between where data is computed and where it is stored.

Each of these technologies share the same key benefits: by putting compute closer to data, we gain reduced latency, faster inference, greater value, lower overheads, greater energy efficiency, greater privacy. It’s these fundamental values that Arm has invested in across its full range of IP. In doing so, we’re ensuring that the incredible amount of data being generated by the IoT every day is not wasted: time-to-value of data is maximized, and real insight can be drawn.

Read more about how Arm IP makes it possible to move compute closer to the data than ever, and how computational storage is enabling a new approach to storing and managing data.

Any re-use permitted for informational and non-commercial or personal use only.