Beyond the Newsroom: 10 Latest Innovations from Arm in September 2024

September 2024 has been another innovative month for us, showcasing various Arm innovations across various domains. From enhancing CPU performance and efficiency to revolutionizing AI software development and optimizing automotive microcontrollers, we continue to push the boundaries of technology and ensure the future of computing is built on Arm.

Here’s a summary of the top 10 technological developments at Arm this month:

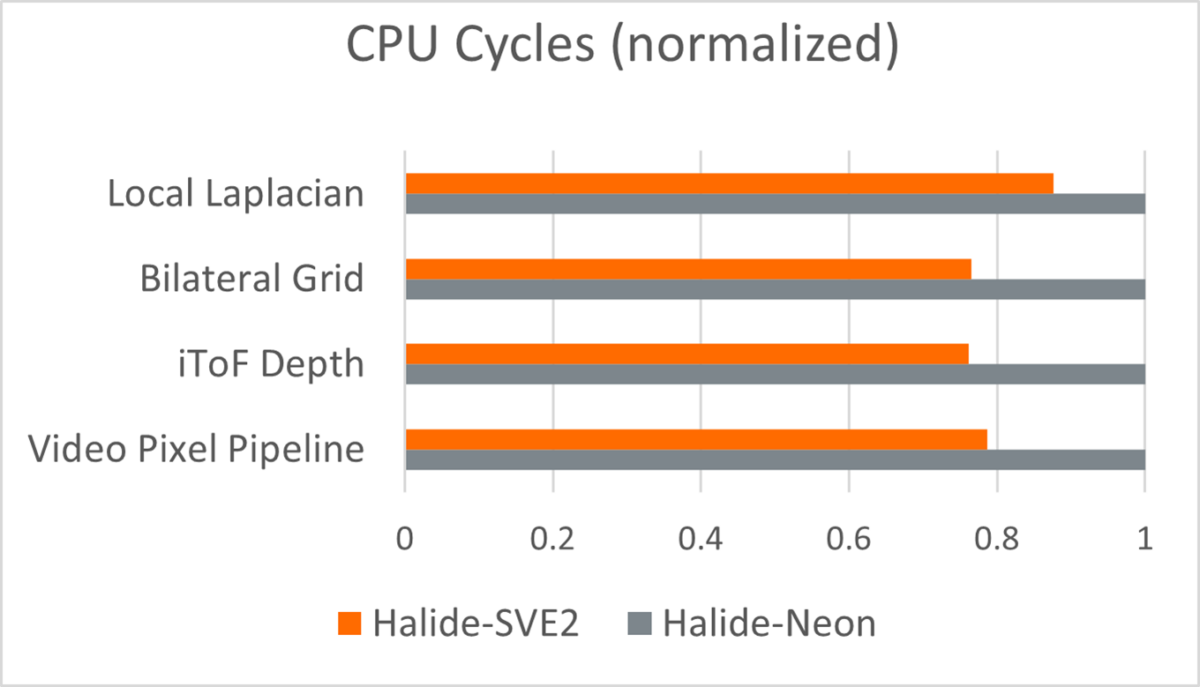

Setting new performance and efficiency standards with Armv9 CPUs and SVE2

Each new generation of Arm CPUs gets faster and better, meeting the needs of modern computing tasks. Poulomi Dasgupta, Senior Manager of Consumer Computing, highlights how Armv9 CPUs and their exclusive SVE2 optimizations are part of the latest Arm technological advancements. They help enhance performance and efficiency for mobile devices and boosts HDR video decoding by 10% and image processing by 20%. This helps improve battery life and app performance for popular apps like YouTube and Netflix.

Likewise, Yibo Cai, Principal Software Engineer, explains how the SVMATCH instruction introduced with SVE2 speeds up multi-token searches, simplifying tasks like parsing CSV files. This further helps reduce the number of operations needed, leading to better performance, as seen in the optimized Sonic JSON decoder. A highlight of SVMATCH is that it helps enhance various software engineering tasks, making data processing faster and more efficient.

How Arm and Meta are transforming AI software development

Sy Choudhury, Director, AI Partnerships at Meta, explains how Arm and Meta are accelerating AI software development through open innovation and optimizing large language models (LLMs), like Llama, across data centers, smartphones, and IoT devices.

Learn more about the latest Kleidi integrations for PyTorch, ExecuTorch, and more, as well as how to unlock the true performance potential of LLMs with Arm’s cutting-edge innovations – all while simplifying model customization and deployment.

Faster PyTorch Inference using Kleidi on Arm Neoverse

PyTorch is a popular open-source library for machine learning. Ashok Bhat, Senior Product Manager, explains how Arm has improved PyTorch’s inference performance using Kleidi technology, integrated into the Arm Compute Library and KleidiAI library. This includes optimized kernels for machine learning (ML) tasks on Arm Neoverse CPUs.

These optimizations lead to significant performance improvements. For example, using torch.compile can achieve up to 2x better performance compared to Eager mode for various models. Additionally, new INT4 and INT8 kernels can enhance inference performance by up to 18x for specific models like Llama and Gemma. These advancements make PyTorch more efficient on Arm hardware, potentially reducing costs and energy consumption for machine learning tasks.

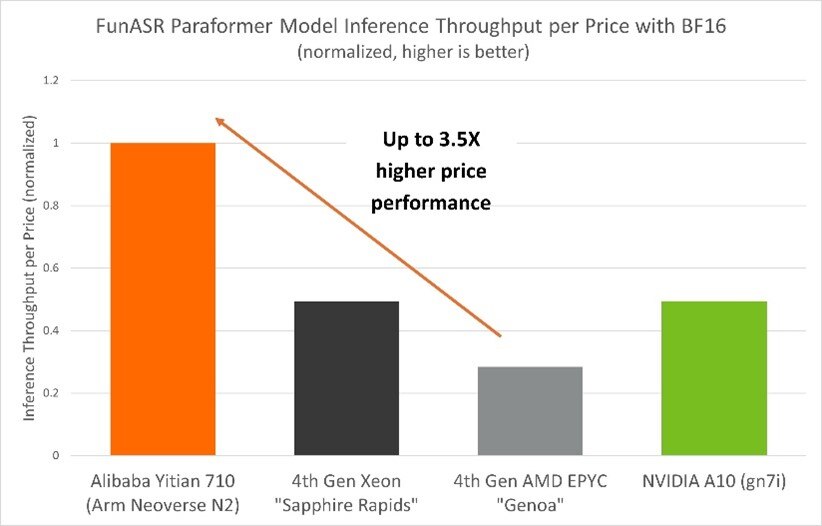

Advancing ASR Technology with Kleidi on Arm Neoverse N2

Automatic Speech Recognition (ASR) technology is widely used in applications like voice assistants, transcription services, call center analytics, and speech-to-text translation. Willen Yang, Senior Product Manager, and Fred Jin, Senior Software Engineer, introduce FunASR, an advanced toolkit developed by Alibaba DAMO Academy.

FunASR supports both CPU and GPU, with a focus on efficient performance on Arm Neoverse N2 CPUs. It excels in accurately understanding various accents and speaking styles. Using bfloat16 fastmath kernels on Arm Neoverse N2 CPUs, FunASR achieves up to 2.4 times better performance compared to other platforms, making it a cost-effective solution for real-world deployments.

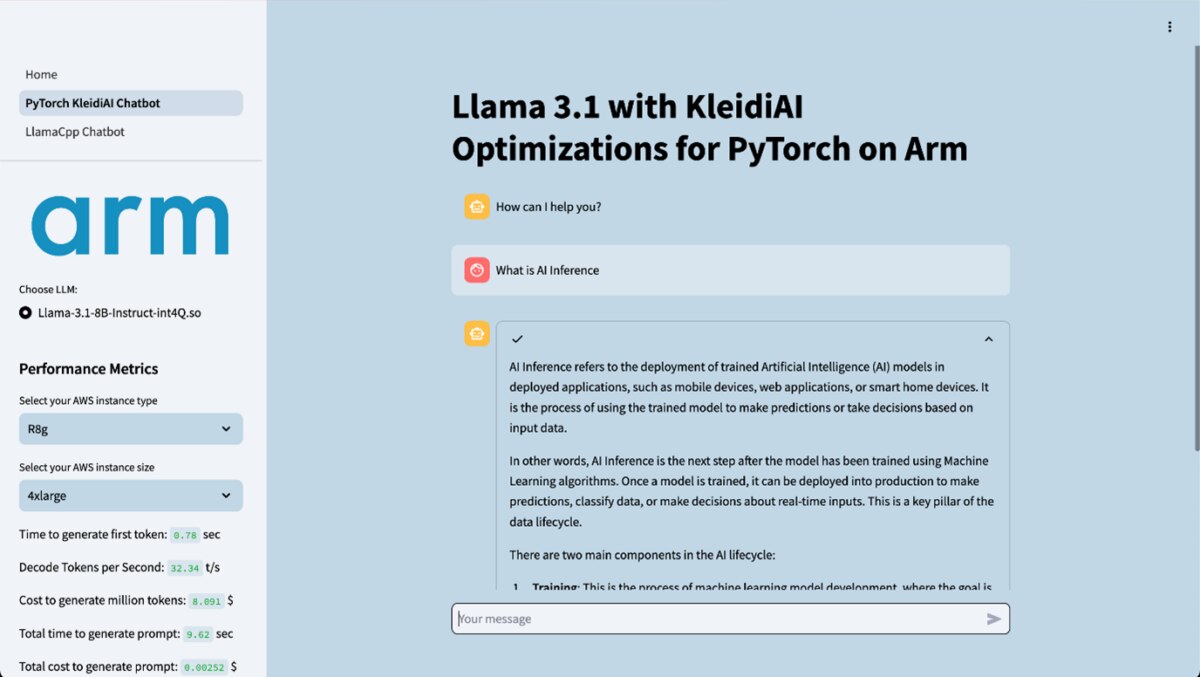

Optimizing LLMs with Arm’s Kleidi Innovation

As Generative AI (GenAI) transforms business productivity, enterprises are integrating Large Language Models (LLMs) into their applications on both cloud and edge. Nobel Chowdary Mandepudi, Graduate Solutions Engineer, discusses how Arm’s Kleidi technology enhances PyTorch for running LLMs on Arm-based processors. This integration simplifies access to Kleidi technology within PyTorch, boosting performance.

The demo application demonstrates significant improvements, such as faster token generation and reduced costs. For example, the time to generate the first token is less than 1 second, and the decode rate is 33 tokens per second, meeting industry standards for interactive chatbots.

These optimizations make running LLMs on CPUs practical and effective for real-time applications like chatbots, leading to more efficient and cost-effective AI solutions. This benefits businesses and developers by reducing latency and operational costs.

Demonstrating AI performance uplifts with KleidiAI

Arm’s KleidiAI, integrated with ExecuTorch, enhances AI inference on edge devices. In a demo by Gian Marco Iodice, real-time inference of the Llama 3.1 8B parameter model is showcased on a mobile phone. This demo highlights KleidiAI’s capability to accelerate various AI models across billions of Arm-based devices globally.

KleidiAI uses optimized micro-kernels and advanced Arm CPU instructions like SMMLA and FMLA to deliver efficient AI performance without compromising speed or accuracy.

Meanwhile, Nobel Chowdary Mandepudi demonstrates how KleidiAI boosts AI performance in the cloud using AWS Graviton 4 instances. This demo highlights KleidiAI’s ability to drive AI performance on Arm-powered cloud servers while maintaining energy efficiency.

This side-by-side demo compares PyTorch inference with and without KleidiAI optimizations, showcasing significant improvements in efficiency and speed with the Llama 3.1 8B model. KleidiAI leverages advanced Arm instructions to accelerate generative AI workloads, enhancing text generation and prompt evaluation.

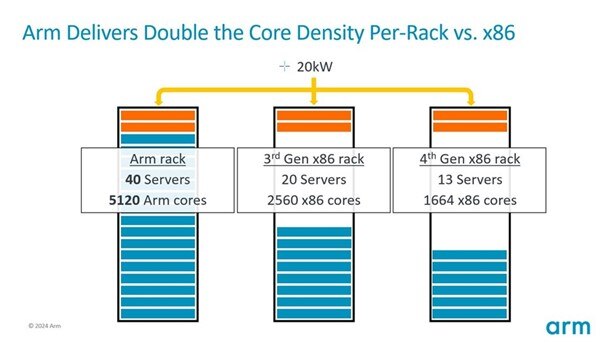

Arm’s growing ecosystem and server integration

The Arm ecosystem is expanding rapidly across all sectors, including Microsoft Copilot+ PCs, cloud services (AWS, Google, Microsoft), and automotive innovations like in-vehicle infotainment (IVI) and advanced driver assistance systems (ADAS). Steve Demski, Director of Product Marketing, highlights the integration of several hundred HPE ProLiant RL300 Gen11 servers, powered by Ampere Altra Max CPUs, into Arm’s Austin datacenter.

These high-performance, power-efficient servers support various workloads and align with Arm’s goal to run at least 50% of their on-premises EDA cluster infrastructure on Arm by 2024. This transition boosts productivity frees up space and power for future workloads like generative AI, and offers a lower cost-per-core, enabling more efficient budget allocation.

Simplifying automotive microcontrollers with EB tresos Embedded Hypervisor

The EB tresos Embedded Hypervisor by Elektrobit allows multiple virtual machines (VMs) to run on a single automotive microcontroller, supporting various operating systems and applications. Dr. Bruno Kleinert from Elektrobit explains how this technology optimizes resource use, enhances safety, and cuts costs.

The hypervisor technology is crucial for software-defined vehicles (SDVs), enabling flexible updates to vehicle functions. It also supports sustainable design by reducing hardware, cabling, weight, and energy use, with the technology touted to be ready for mass production in October 2024, and safety-approved versions expected in early 2025.

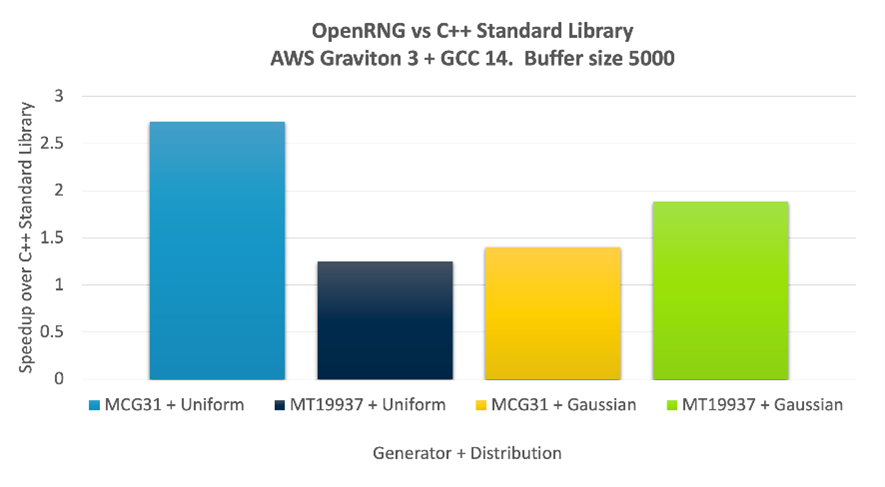

Boosting performance for AI and beyond with OpenRNG

OpenRNG is an open-source Random Number Generator (RNG) library that boosts performance for AI, scientific, and financial applications. Kevin Mooney, Staff Software Engineer, explains how it can replace Intel’s Vector Statistics Library (VSL) and supports various random number generators, including pseudorandom, quasirandom, and true random generators.

OpenRNG significantly improves performance, enhancing PyTorch’s dropout layer by up to 44 times and speeding up the C++ standard library by 2.7 times. OpenRNG is crucial for applications needing fast and reliable random number generation, like AI, gaming, and financial modeling, ensuring consistent results across systems.

Pioneering automotive safety with Arm Software Test Libraries

Integrating Arm’s Software Test Libraries (STLs) into automotive systems boosts safety and reliability, meeting ISO26262 standards. Andrew Coombes, Principal Automotive Software Product Manager, and ETAS explains how Arm STL can be used with Classic AUTOSAR to improve diagnostics, detect faults early, and offer flexible integration. Using Arm STLs with microcontroller hypervisors based on Arm architecture supports mixed-criticality systems and enhances fault mitigation. Meanwhile, achieving Functional Safety certification requires comprehensive strategies, as detailed in a joint white paper by Exida and Arm.

Optimizing video calls with artificial intelligence

While video conferencing is a ubiquitous tool for communication, it is not always a straightforward plug-and-play experience, as adjustments may be needed to ensure a good audio and video setup. Ayaan Masood, a Graduate Engineer, has developed a demo mobile app that uses a neural network model to improve video lighting in low-light conditions.

This app processes video frames in real time, providing smooth and clear visuals. It ensures a professional appearance during video calls, which is essential for remote work and social interactions. The success of this app highlights the potential of AI to solve everyday problems, paving the way for more AI-driven solutions in various fields.

Any re-use permitted for informational and non-commercial or personal use only.