What Tech Innovations Did Arm Deliver in September 2025?

September’s highlights capture the ingenuity shaping tomorrow’s technology, from breakthroughs in formal verification to the latest in AI-driven vehicles and datacenter innovation.

Discover how precision analysis is improving floating-point accuracy, why rethinking memory barriers matters for safe concurrency, and how on-device AI agents are transforming intelligent mobility. Explore advances in cloud reliability, Java optimization at scale, and the business impact of chiplet-based design.

Whether you’re building smarter infrastructure or looking for practical performance insights, September’s tech developments reflect the ongoing momentum across the Arm ecosystem.

How On-Device AI Agents Drive the Future of the Intelligent Vehicle

The automotive industry is moving into the era of the AI-defined vehicle, with Aaron Ang explaining how to build an on-device multimodal assistant for automotive compute. Picture a modular, multimodal AI agent running entirely on-device, seamlessly combining voice and vision under the direction of a supervisory system.

By applying techniques such as quantization, retrieval-augmented generation (RAG), and built-in privacy safeguards, this approach enables advanced safety, diagnostics, and control features without dependence on the cloud. The result is a clearer path to vehicles that are both smarter and more secure.

Scaling AI with Performance and Efficiency on Arm Neoverse V-Series

Meeting the demands of AI at scale requires compute that can deliver consistent throughput, predictable latency, and energy efficiency all at once. This balance is essential as datacenters face surging workloads, tighter power budgets, and the need for real-time responsiveness.

With the Neoverse V-series CPUs, Arm is redefining how these challenges are addressed in AI infrastructure. Shivangi Agrawal, Product Manager, and Rohit Gupta, Senior Manager, Ecosystem Development, highlight how Neoverse V2 and its successors are designed from the ground up to accelerate inference, training, and next-generation AI services, enabling cloud providers, enterprises, and hyperscalers to scale AI with both performance and efficiency in mind.

Cloud Reliability Using Out-of-Band Telemetry on Arm Neoverse

Reliable, real-time insights into server performance are becoming critical as cloud and data center operators look to maximize efficiency and uptime at scale. Samer El-Haj-Mahmoud, Distinguished Engineer at Arm, and Tim Lewis of Insyde Software share how they developed a proof-of-concept for out-of-band (OOB) telemetry on Arm Neoverse servers. This innovation enables continuous monitoring of key hardware metrics, such as thermal and power data, independent of the operating system, unlocking new levels of fleet-scale visibility and management. Their work showcases how OOB telemetry could transform the way operators manage modern infrastructure.

Optimizing Java at Scale by Unlocking Code Cache Efficiency on Arm Neoverse

Why does code cache performance matter at scale? In large Java deployments on Arm Neoverse servers, subtle inefficiencies can ripple into noticeable impacts on throughput, latency, and resource utilization.

Yanqin Wei, Principal Software Engineer, unpacks a set of targeted optimizations, such as relocating metadata out of the code cache, enabling Transparent Huge Pages, and clustering hot methods, to reclaim instruction-fetch efficiency and tame front-end bottlenecks. These insights draw on real experimentation with code-inflated workloads and show how you can tune the JVM / CPU interaction for better scalability.

Novatek Advances Datacenter Innovation with Neoverse CSS N2 SoC

Driving innovation in datacenter compute requires more than just powerful cores, it calls for scalable design approaches that can accelerate time-to-market while unlocking advanced node technologies. Dr. Daniel Ping, Assistant Vice President at Novatek, and Marc Meunier, Director of Hardware Ecosystem, Infrastructure at Arm, detail how Novatek leveraged the Arm Total Design program to successfully deliver a Neoverse CSS N2-based system-on-chip (SoC).

Their collaboration highlights how chiplet-based design is shaping the future of datacenter compute, offering flexibility, performance, and efficiency at scale. Discover how Novatek achieved this milestone and what it means for the next era of infrastructure innovation.

Analyzing Real-World DPDK Scaling on Arm Neoverse V2 with NVIDIA Grace

In an era where every microsecond of network processing counts, Doug Foster, Software Engineer at Arm, presents a rigorous, data-driven look at DPDK’s L3 forwarding on Arm’s Neoverse V2 + NVIDIA Grace platforms. His evaluation dives into how real-world throughput diverges from ideal scaling, exposing bottlenecks tied to SCF cache evictions, MMIO doorbell latency, and NIC-PMD buffer mismatches.

By tuning queue depths and reducing processing overhead, he shows how to reclaim headroom lost to artifacts of system architecture. His analysis into how DPDK performance really behaves on modern Arm infrastructure offers insight and actionable guidance.

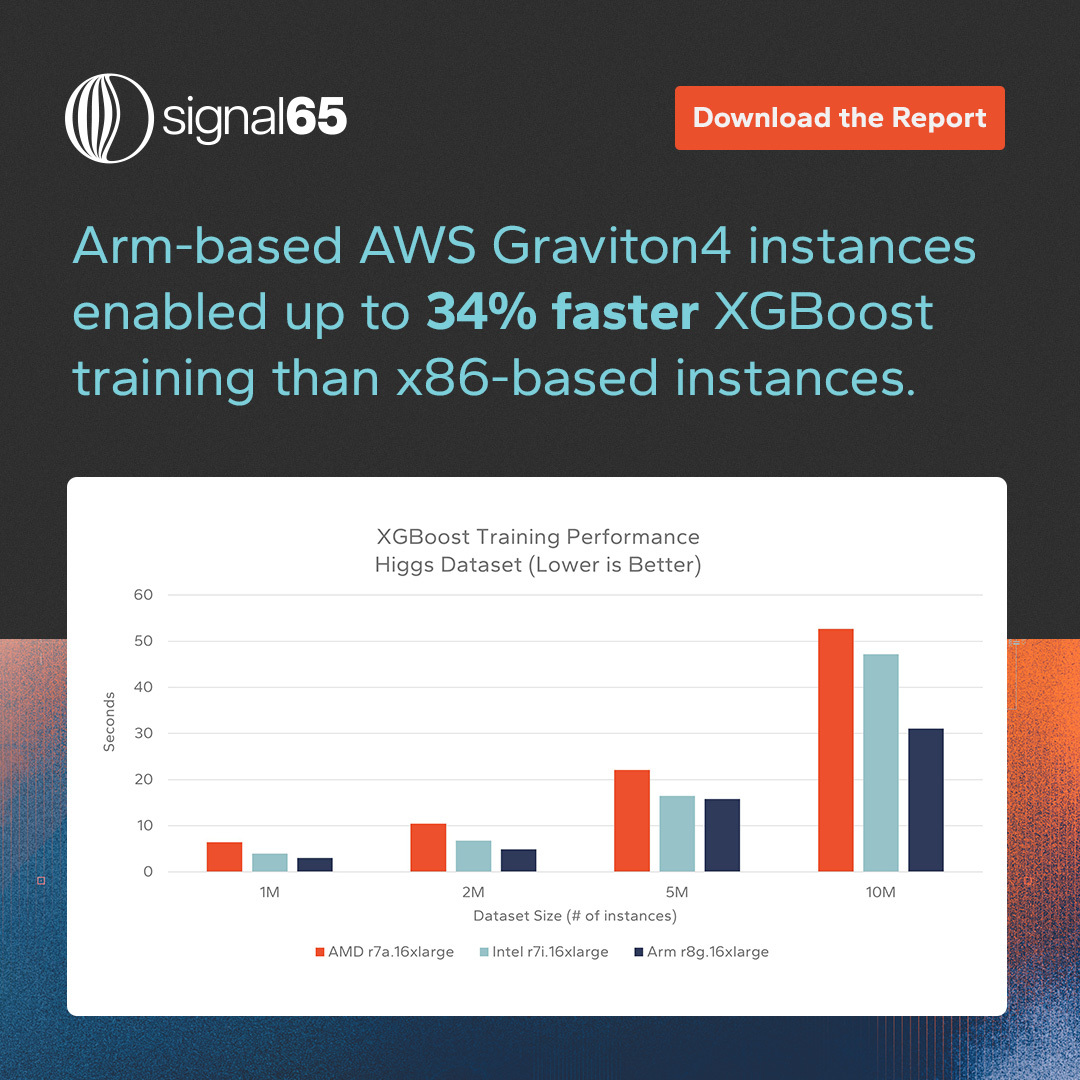

Lessons from Migrating Large-Scale AI Inference to Arm-Powered Infrastructure

As demand for generative AI grows, developers are under pressure to deliver faster results while reducing costs and energy use. Arm Ambassador Cornelius Maroa details how Vociply AI successfully migrated its large language model (LLM) inference workloads from x86 to Arm-based AWS Graviton3 instances. The move unlocked around 35% cost savings, delivered 15% faster inference, and improved energy efficiency. Cornelius Maroa explores the migration process, lessons learned, and why the team now views Arm-powered infrastructure as a long-term advantage for scaling AI services.

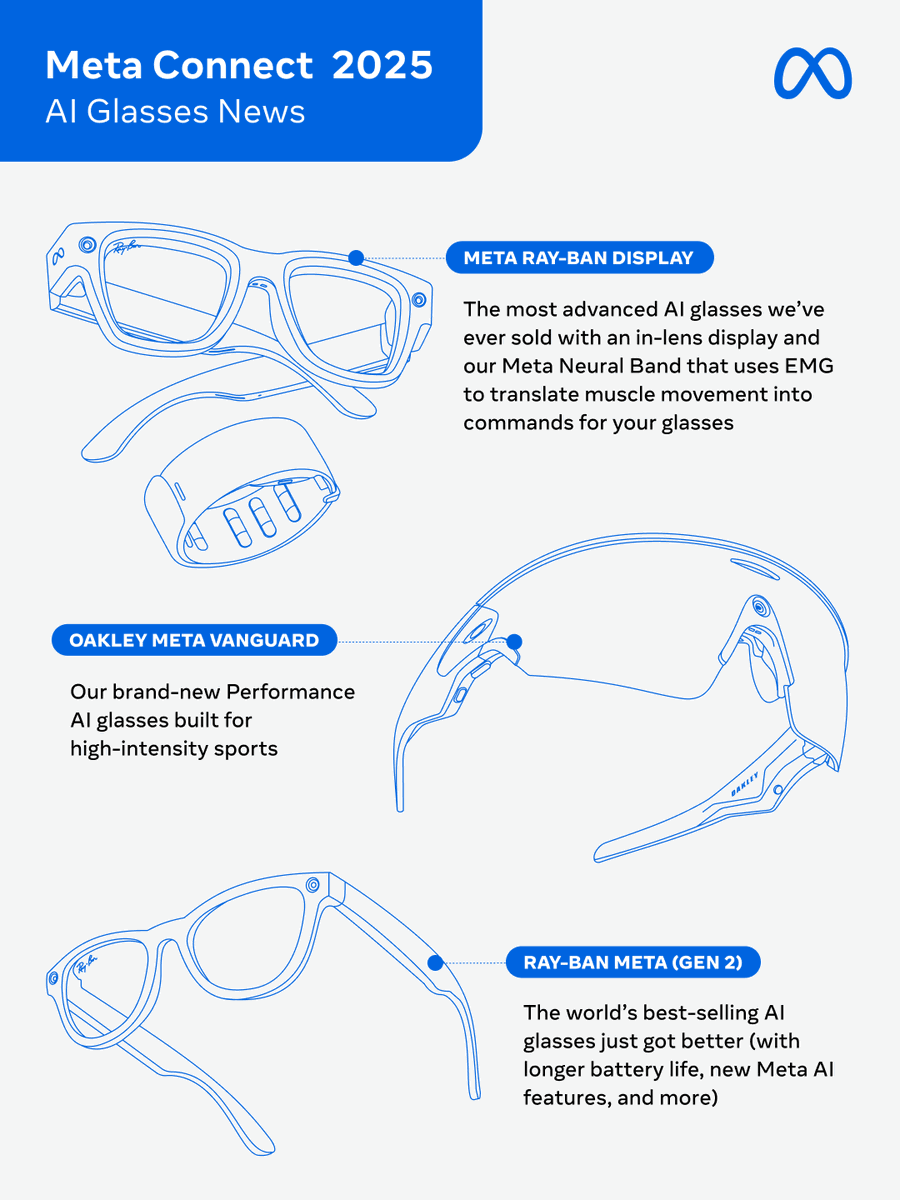

Preparing Developers for the Next Generation of Mobile GPUs

AI is redefining the future of real-time graphics. In this SIGGRAPH 2025 session, experts explore how neural techniques like reconstruction, denoising, and upscaling are driving major efficiency gains, and what it will take to deliver them on mobile devices with tight power budgets. The talk covers the evolving standards shaping neural graphics, strategies for managing heterogeneous workloads and accelerators, and practical ways to train and deploy custom networks.

With insights into the role of new hardware, APIs, and real-time AI, developers will see how to prepare for the next generation of mobile GPUs and push the boundaries of visual fidelity and performance.

How Arm SME2 Powers the Next Generation of AI-Driven Fitness Apps

Personalized fitness is moving closer to reality with AI that can guide and respond in real time. Hamza Arslan, Graduate Engineer, demonstrates how he built an AI-powered Yoga Tutor that uses pose estimation and conversational feedback to improve practice. He highlights how Arm’s Scalable Matrix Extension (SME2) accelerates the entire pipeline, enabling ultra-low latency feedback on future devices. The result is a glimpse into how edge AI can deliver accessible, intelligent fitness coaching anywhere.

How Formal Verification Methods Improved Floating-Point Accuracy

Accurate floating-point division is critical in computing, where even small errors can cascade into major problems. In a two-part series on Arm Community, Principal Software Engineer Simon Tatham explains how he used the Gappa tool to formally verify a floating-point division routine.

Part 1 explores how Gappa and the Rocq prover revealed discrepancies in error bounds, prompting closer analysis. Part 2 details the mapping of machine instructions into Gappa, cross-checks with Python wrappers, and the bug discovered in the original approach. Simon Tatham then walks through the fix, balancing performance with accuracy, and revalidating the final implementation.

The result: a faster, formally verified routine that demonstrates how rigorous analysis can strengthen confidence without slowing development.

Uncovering the Hidden Risks of Memory Barriers in Concurrent Systems

Many developers assume barriers enforce strict ordering in concurrent systems, but that assumption can fail in subtle yet serious ways. Wathsala Vithanage, Staff Software Engineer, and Ola Liljedahl, Systems Engineer (both at Arm), explain how certain memory barriers do not guarantee full ordering, leading to unsafe partial orders and hard-to-detect bugs. Using a real-world example from DPDK’s ring buffer logic, they show how assumptions about acquire/release fences can break down and offer practical fixes to ensure correct synchronization. Their analysis highlights where barriers can mislead, and how to design systems that avoid these pitfalls.

Enabling MPAM on Ubuntu for Predictable Performance Across Mixed Workloads

Effective resource control is critical for delivering predictable performance in multi-tenant and mixed-workload environments, helping to eliminate “noisy neighbor” interference on Arm platforms. Howard Zhang, Staff Software Engineer, shares how to enable, configure, and validate MPAM (Memory System Resource Partitioning and Monitoring) on Ubuntu, from kernel setup through workload testing.

He demonstrates how to partition cache and memory bandwidth with the resctrl interface and showcases experiments that prove real workload isolation. This hands-on walkthrough equips developers with the tools to explore and validate resource isolation on Arm-based systems.

Any re-use permitted for informational and non-commercial or personal use only.