AI for IoT: Opening up the Last Frontier

After a couple of decades working in the tech sector I’m not easily surprised. But I was astonished to learn that our engineering team had pushed up the artificial intelligence (AI) performance of our newest cores by nearly 500 times.

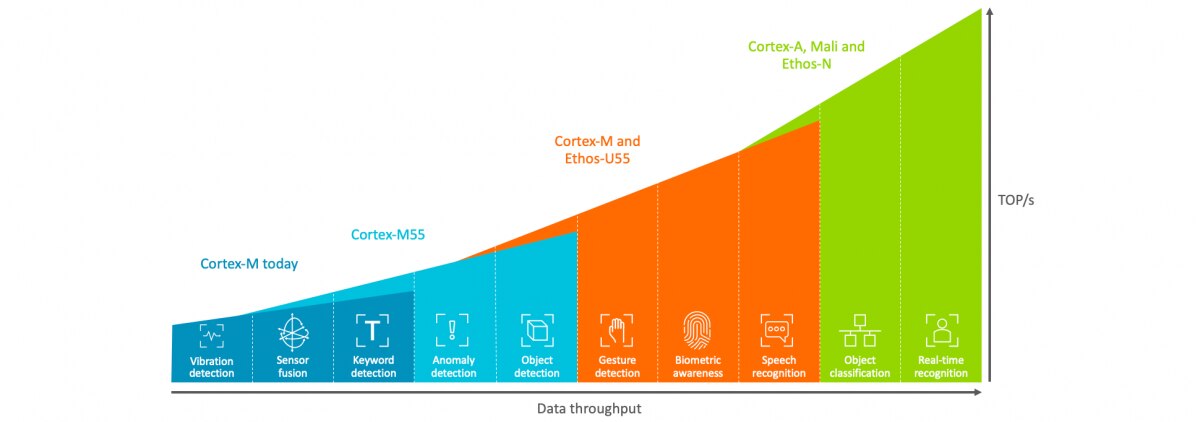

It was the back end of 2019 and the team had just finished final testing of the new microcontroller and neural network processors we launched today. By pairing the Arm Cortex-M55 CPU and Arm Ethos-U55 micro neural processing unit (microNPU), our engineers had reinvented the performance of Arm-based Internet of Things (IoT) solutions. They’d set the course for the future of what developers might do creatively with AI for IoT.

I realized quickly that our engineers had opened up the last frontier in AI for IoT devices. There were almost no applications I could imagine where it wouldn’t be possible to layer in machine learning (ML) now. And given what I was already seeing from the vast AI developer ecosystem, I knew it wouldn’t be long before we see this new capability turn into real-world products.

Enabling new, advanced use cases

The best technology for me is the kind you wish someone had invented earlier. But the simple truth is that the best ideas must often wait for technology to catch up. Autonomous cars are a great example: they’ll be life-changing, but we have to stretch the realms of what’s possible today to make them real at scale.

Another less top-of-the-mind example of AI for IoT is something that exists already but the Cortex-M55 and Etho

I spoke to the Arm healthcare innovation team about how our new Cortex and Eth

Our new processors could enable developers to swap out ultrasound for AI-enabled visual sensing using a 360-degree camera. That, alongside wireless communications and navigation, would be powered by a super-slim battery capable of lasting all day. The device would look like a conventional stick but be the ultimate visual aid. And with the AI compute performed locally, it wouldn’t matter if cell connectivity was lost.

It’s all about team play

As with all solutions though, it’s not just about the star players, whoever they may be, it’s about the whole team. Ultimately, it won’t be Arm or most of our chip partners coming up with the mind-blowing ideas behind the AI for IoT revolution, it will be the massive developer network working with our technology all over the world. So, more than ever, our focus needs to be on the team.

Software development in the AI for IoT space is highly complex, spanning a diverse range of use cases and hardware. And arguably Arm’s biggest team job is to ensure developers’ lives don’t get any harder as we move through technology iterations. We’ve put a lot of thought into that. In particular the evolution of hardware abstraction layers such as CMSIS-Core, as well as CMS

AI for IoT will ‘just work’

Another developer concern is ensuring favorite frameworks and toolchains are fully supported by hardware providers. This is at the heart of our CMSIS work, and our collaboration with fellow AI for IoT innovators like Google to ensure TensorFlow Lite Micro will be fully supported by the Cortex-M55 and Ethos-U55 toolchain.

Check out the thoughts of Ian Nappier, Tensor Flow Lite Micro product manager, below. The drivers for these processors will automatically optimize developers’ TensorFlow models to take full advantage of any hardware configuration they want to deploy.

Fast forward to the future

We often talk about the Fifth Wave of Computing, the combination of AI with IoT and 5G, and I already see AI and IoT coming together in huge numbers of devices. Our new Cortex and Ethos processor pairing will take that to a new level of performance. Get the technical detail here.

This latest launch maps exactly to Arm’s grander vision for distributed computing: boosting what’s possible with AI for IoT, efficiently distributing as much compute as we can at the endpoint, building an AI-driven Network Edge and pushing more efficiency and cost-saving in the cloud with new server technologies such as Graviton.

But we learned from the recently-published Arm 2020 Global AI Survey that up to three-quarters of consumers prefer their data to be processed on-device, with only crucial data sent to the cloud. By elevating the AI capability of devices so they don’t have to rely on cloud compute, we enable a richer framework for trust: Sensitive data access can be controlled between the compute layers.

Over to you, developers

I’m delighted that we’ve pushed up the performance of AI for IoT endpoints by almost 500x with our Cortex-M55 and Ethos-U55 processors. But it’s what they will enable that really excites me, inspiring possibilities as developers push AI down into the tiniest of applications and scale it up to grandest of uses. All done more efficiently than ever before.

Finally, coming back to my opening point around how hard it is to surprise me now, I’m already preparing myself for the next joyful moment: when an inventor makes something with our new technology that none of us dreamt of. I’m sure my reaction will be mixture of ‘wow’ and ‘I wish I’d thought of that’. But most of all, I’ll be celebrating the impact that Arm, our ecosystem and its developers will have on the world.

Learn more about how the Arm Co

Any re-use permitted for informational and non-commercial or personal use only.