How AI Inside the Car Is Quietly Redefining Vehicle Design

What was once thought as a distant promise is happening now inside the car, with artificial intelligence (AI) transforming how people interact with their vehicles.

However, many of the changes won’t always be noticed. For instance, at a red light, a navigation prompt will anticipate a stop; or when commuting, a voice assistant remembers your preferred route. These subtle moments are showing how AI has moved inside the vehicle and bringing invisible improvements that impact our driving experience.

According to Arm AI Readiness Index, 82% of global business leaders reported that they are already using AI applications, but only 39% have a clear strategy to scale them – a gap that’s now playing out in the automotive industry too. While the technology is here, the bigger question is: how to make AI work everywhere, safely, efficiently, and at scale?

This is exactly what Suraj Gajendra, VP Products and Solutions at Arm, looked to answer through a two-part conversation with Junko Yoshida in her new podcast series titled AI Toy to Tools. As Suraj says, “Automotive isn’t being left out of the AI revolution. The way AI is changing the world is unbelievable, and inside vehicles, that change is happening faster than many realize.”

The New Intelligence Inside the Car

For years, the conversation around automotive AI was dominated by the promise of self-driving technologies. But the real progress is happening in the car we already know, where the driver is still very much in control.

Suraj describes a new generation of multimodal cabin copilots that merge voice, vision, and gesture to create a natural and conversational interface. These systems run on-device, using specialized compute built on the Arm architecture. That shift helps reduce latency, protects privacy, and creates an experience that feels intuitive instead of technical.

“The next step is continuity,” Suraj says. “You take the interaction you have with your assistant at home into the cockpit, meaning the conversation continues and it’s seamless.”

AI is also changing how people feel in their cars. Even standard rituals of car ownership are getting smarter. For instance, the thick owner’s manual is going digital. Instead of flipping through a book that’s usually buried in the glovebox, drivers can now directly ask their vehicles simple questions such as “what does this light mean? What’s the right tire pressure today?” and get clear, instant, contextual answers from an AI model built into the car.

When AI Meets the Open Road

If inside the vehicle is where AI feels most human, it’s the outside world where it becomes most complex, with Advanced Driver Assistance Systems (ADAS) and automated driving continuing to evolve, alongside different model approaches.

Traditional ADAS software still breaks that process into distinct modules: one system identifies obstacles, another decides how to respond, and a third executes the manoeuvre. But newer end-to-end AI models, trained on billions of data points, are starting to merge those layers into a single neural network that translate raw sensor input directly into safe driving actions.

That coexistence is already visible today. In many premium vehicles, AI models now manage hands-free lane changes to adaptive highway cruising by interpreting data from radar, LiDAR, and cameras in real time. Each approach demands a different balance of compute power, bandwidth and safety verification and together, they’re redefining the software stack that runs the car.

As Suraj says, “You can’t throw away millions of miles of modular learning overnight. Both modular and end-to-end AI will coexist for some time.”

Learn more on how you can scale automated driving using Arm Zena CSS.

Bridging the Cloud and Car

What drives experience as convenience depends on something far more complex behind the scenes, which is cloud-to-car parity. AI training workloads will continue to be processed in the cloud, but inference – which enables real-time decisions – will take place inside the vehicle.

“Parity between the cloud and car is going to be even more important,” says Suraj. “The closer the two environments are in architecture and tools, the faster you can move from development to deployment.”

That close bridge matters because it lets developers build and test models in the cloud, then run them in the car without rewriting code or compromising performance. Due the ubiquity of the Arm compute platform across data centers and at the edge – which includes vehicles – engineers can move seamlessly from simulation to silicon, improving performance and efficiency, simplifying porting, and keeping inference results consistent.

Building Trust Into Vehicle Intelligence

Modern vehicles are increasingly becoming AI-defined, juggling dozens of simultaneous tasks. These range from keeping passengers safe by managing steering, braking, and collision avoidance, to enhancing the experience with streaming music, fatigue monitoring, and navigation through city traffic. These workloads run side by side, and yet each must meet a different level of urgency, safety, and security.

“You have to understand where in the mixed-criticality spectrum your application sits and what it must support,” says Suraj.

That’s where the architecture makes the difference. Arm Automotive Enhanced (AE) processors are designed with functional safety built in, ensuring that systems performing life-critical operations remain predictable even when everything else in the car is multitasking.

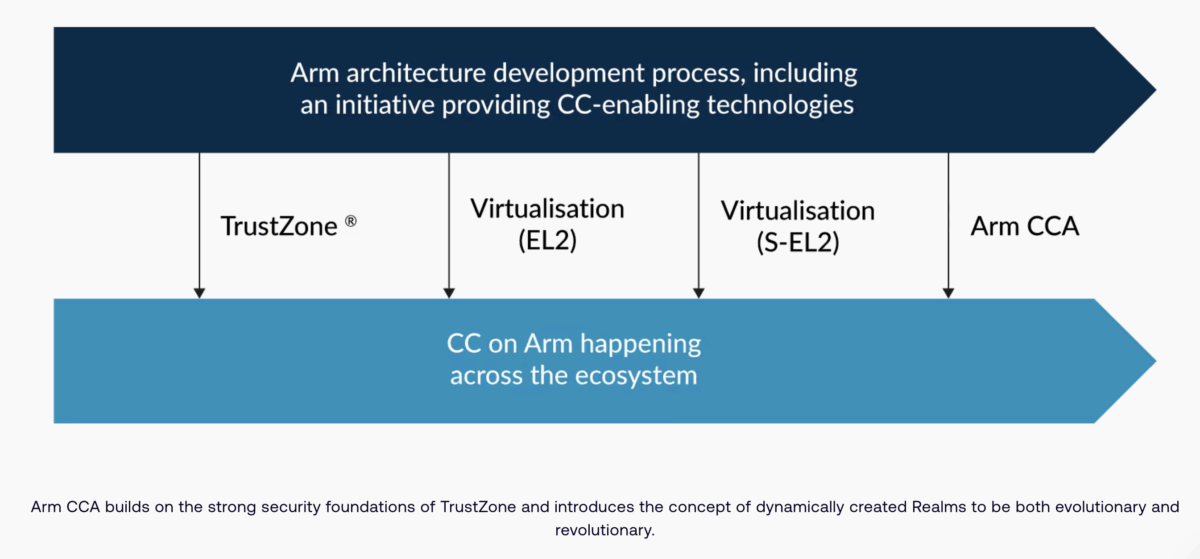

At the same time, the Arm Confidential Compute Architecture (CCA) creates digital realms, a secure, hardware-isolated environments that separate sensitive workloads from general applications. A driver monitoring algorithm can analyze camera data, for instance, without ever exposing it to the infotainment system. This means safety and privacy stay intact, even as compute loads rise.

The challenge here is simply not to add more performance, but to maintain trust as vehicles grow more intelligent.

The Power of Common Ground

The next leap in automotive AI is about making everything work together and not about inventing new hardware. Each vehicle platform brings its own chips, middleware, and operating systems. Therefore, without an established common ground, developers would be rewriting code for every new model.

That’s why collaboration matters. The Scalable Open Architecture for Embedded Edge (SOAFEE) sets shared standards for the foundational software that underpins all vehicles. Boot flows, power management, and low-level firmware can be unified, and allow developers to spend their time where it counts – on differentiating user experiences and AI capabilities.

As Suraj says, “The goal of SOAFEE is to standardize the layers of software where there is no differentiation needed, giving developers a consistent foundation to build on across different Arm-powered platforms.”

Inside the Blueprint for Scalable AI Hardware

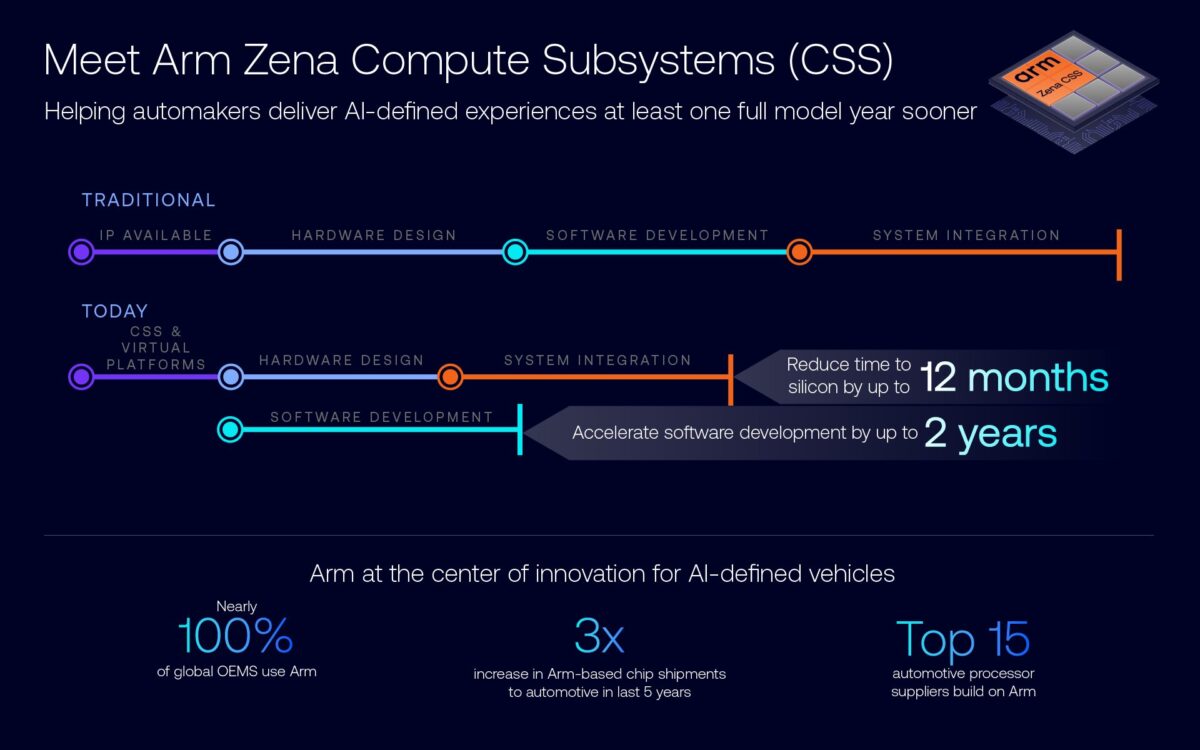

Under the hood, scalability takes physical shape through the Arm Zena Compute Subsystem (CSS), a pre-integrated and pre-validated compute platform for next-generation automotive SoCs. It brings together CPU clusters, safety island, security enclave, system IP and debug tools into one cohesive platform.

“More compute can unlock richer AI, but it also draws more energy. Hence, a subsystem view makes those trade-offs visible,” says Suraj. That visibility will help engineers make smarter design choices, balancing power, safety, and cost in a way that scale across vehicle tiers.

The virtual platforms Arm introduced in 2024 are now being extended to Zena CSS, giving software teams a head start on software development and deployment. Developers can build and test code before silicon arrives, using digital twins of the hardware to optimize performance. That means when chips are ready, software is too.

Driving Toward An AI-Defined Future

The journey toward AI-defined vehicles is as much about engineering discipline as it is about imagination and innovation. AI now links the driver experience with the compute in the vehicle and the cloud that needs to deliver high performance and efficiency alongside a seamless development experience.

As Suraj says, “Cloud will always be in the game, but it’s important to make the cloud-to-car path robust and as seamless as possible.”

As automotive AI matures, its success will depend on the same balance Arm has championed for decades: performance and efficiency that empowers innovation, and scalability that allows more people to benefit from new ground-breaking technology experiences.

Are LLMs Useful in Automotive?

Check out how large language models are shaping the next generation of in‑vehicle AI.

Any re-use permitted for informational and non-commercial or personal use only.