AI and IoT Are Enabling the Third Wave of Photography

Photography remains one of humanity’s favorite inventions. Its first wave in the early 1800s was quickly adopted by artists and journalists alike to capture everything from the horrors of war to the magnificence of ancient civilizations. In 1888, George Eastman released the first in a series of point-and-shoot cameras giving non-experts the power to document their lives.

The second wave began on June 18, 1878, when Eadweard Muybridge captured a series of high-speed images of a horse on a racetrack. The now-famous sequence proved that all four hooves leave the ground at the top of the stride—Muybridge was out to settle a bet—and set off a quest for motion pictures. Hollywood, TV, and the 24-hour news cycle followed.

Together, pictures and video dominate the internet. Humans snapped an estimated 1.4 trillion photos in 2021 (with nearly 100 percent of them captured on Arm-based smartphones, tablets and smart cameras). Video, meanwhile, consumes 70+ percent of internet traffic.

So what happens in the third wave? Thanks to continual improvements in processing power and computer vision powered by artificial intelligence (AI), the ‘camera’ will become a device for capturing multiple sensory inputs and weaving them into a richer, more comprehensive, and more insightful picture of the surrounding world. You’ll be able to capture what your eyes see as well as what they ordinarily can’t.

Hot shots with lidar imaging

Computer vision is fundamental to capturing real-world data within the Internet of things (IoT). This blog by Arm’s VP of IoT and embedded technologies explores how Arm technology provides a secure ecosystem for smart cameras in business, industrial and home applications.

Recreating a sensor as powerful as the human eye with technology opens up a wide and varied range of use cases for computers to perform tasks that previously required human sight – so it’s no wonder that computer vision is quickly becoming one of the most important ways to capture and act on real-world data within the Internet of Things (IoT).

But smart cameras don’t just capture what we can see as humans. They’re also becoming ever more adept at capturing what we can’t, using various imaging techniques targeting different areas of the electromagnetic spectrum.

Consider thermal data. Invented in the 1920s, thermal cameras were primarily used by the military or lab technicians and engineers for decades. During the 2000s, declining prices and improving performance allowed contractors to use thermal equipment to pinpoint air gaps in homes. Still, thermal movement wasn’t exactly what you’d call a must-have app: the closest most of us got to one came from watching Ghost Hunters.

Then came COVID-19. Campuses, museums, and other facilities with high foot traffic suddenly needed an accurate, quick, and contactless way to find temperature anomalies. AI-enhanced thermal cameras (with appropriate privacy mechanisms built in) will increasingly be included in smart building platforms to improve health, reduce energy consumption and make complex AI tasks like predictive maintenance and production optimization practical.

In the not-too-distant future, thermal imaging—now available through add-on modules—could become more prevalent for smartphones and not just for life-and-death matters. Imagine a thermal component added to a multiplayer augmented reality (AR) game. Thermal-enhanced imaging would effectively allow players to grasp the position and location of a target or an opponent.

Or think about combining video streams with motion data and analyzing it all with AI. Digital TVs with navigate-by-gesture customized to your own behavior will be possible by fusing AI, motion sensing and visual data streams into web cameras.

Eldercare? Multiple sensors and cameras feeding data will allow the elderly to live independently longer while giving their concerned relatives peace of mind, vital signs like blood oxygen levels (with or without wearables) and video snippets of grandpa making a sandwich.

The car and camera fusion

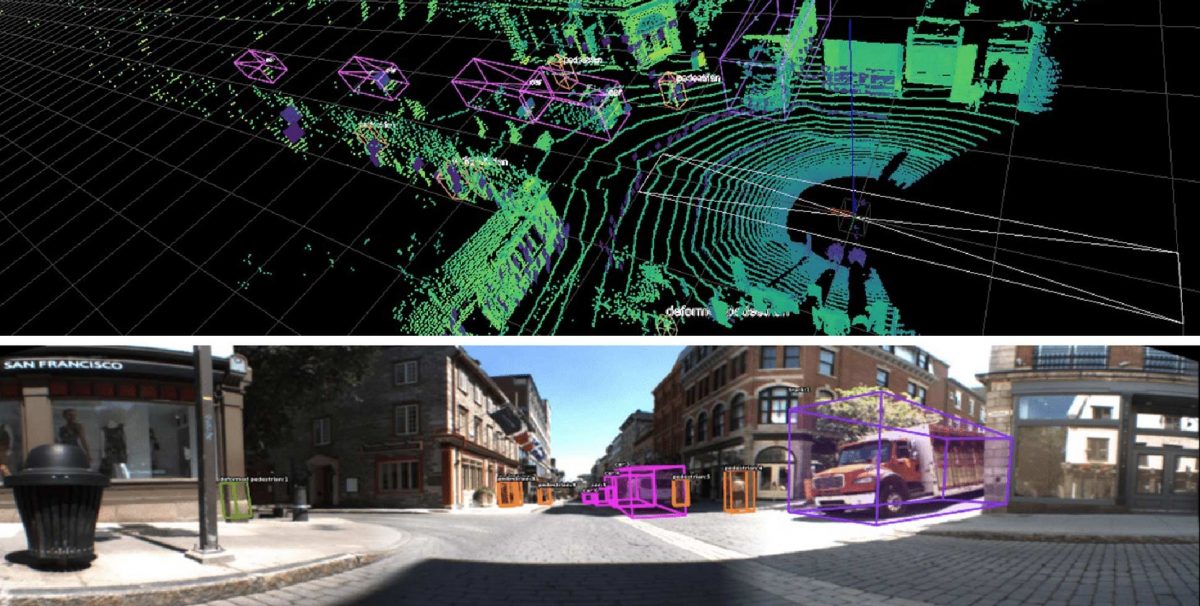

Now we move to cars. LiDAR, or light detection and ranging systems, uses a set of eye-safe lasers to create a dynamic, 3D image of a person’s surrounding area. David Hall, an engineer at Velodyne, debuted a LiDAR prototype at the second DARPA Grand Challenge for robotic vehicles in 2005. His car didn’t finish, but five of the six finishers in the next challenge in 2007 relied on LiDAR.

Meanwhile, Lucid has said that its sedan, due next year, will contain a DreamDrive Pro autonomous driving platform powered by LiDAR while LeddarTech is working to standardize LiDAR to drive down costs.

Thermal technology will also be integrated into car cameras as well. Adasky says its Viper camera which combines thermal technology, visual imaging and ML can detect objects at 300 meters and classify living objects at 200 meters. Headlights only provide visibility for around 80 meters.

What other inputs could you combine? Some utilities are looking at ways to visualize gas leaks and integrate them into video streams and maps to help repair crews or firefighters. A smartphone with an olfactory sensor, a camera, ML and some clever graphics embedded in a smartphone could help you find the ripest tangerines in a massive grocery display, or ward you away from a package of hot dogs that have seen better days.

And let’s not forget 3D. It was a passing fad in the 50s, and again in the 2010s, but computational photography, AI, new screen technologies, and fusing CAD models with multiple images are producing realistic, immersive images that don’t make viewers queasy.

A technology revolution under the hood

All of this, of course, will take work. Computer vision and other AI applications for fusing sensor streams or enhancing images will largely have to take place on the camera itself to save time and energy consumption. Conducting image analysis on the estimated 770 million surveillance cameras in the world instead of sending data to the cloud could potentially avoid over 19 million tons of CO2 a year while enhancing privacy protection, for example. Advanced photography will also require 64-bit processors containing specialized cores for graphics and neural network processors along with the traditional CPU.

Computational storage, where many computing tasks take place in the confines of the storage drive, will also find an early market in advanced photography. Likewise, machine learning algorithms, processors, and other components will have to be meticulously designed for energy efficiency, so battery-powered devices don’t fail at the crucial moment. A software-defined approach would further make it easy for hardware manufacturers or app developers to add new features as time goes on.

Meanwhile, all this engineering work will take place in tandem with clamors for higher resolution and more features. Moving from 1080p to 4K will double the data rate in smart cameras and will continue to grow with 8K and the shift from 30 to 60 frames per second. More sophisticated cameras will also mean a greater attack surface for hackers, so expect to see specialized cryptography processors in the mix.

Drawing with light… and technology

One could argue that this sort of fusion goes beyond photography. Photography, after all, means “drawing with light.” Adding sensory input, or realistic images that technically aren’t real at all, goes beyond the scope of what a camera is today. But to the public, cameras have always been about capturing reality as accurately as possible – the scope of that “reality” is about to get a lot bigger.

Fusing sensory inputs into a single, smarter device, in other words, gives people want they really want: the big picture.

What Happens Next?

Learn how Arm and its partners are sparking the world’s potential by bringing together specialized processing, AI and an ecosystem for accelerating innovation.

Any re-use permitted for informational and non-commercial or personal use only.