AI’s Trillion-Dollar Opportunity

We know that artificial intelligence (AI) promises to transform every aspect of human activity, but realizing this potential requires confronting a fundamental truth: the infrastructure that powered yesterday’s computing won’t scale to meet tomorrow’s AI ambitions.

Consider the magnitude of change already underway:

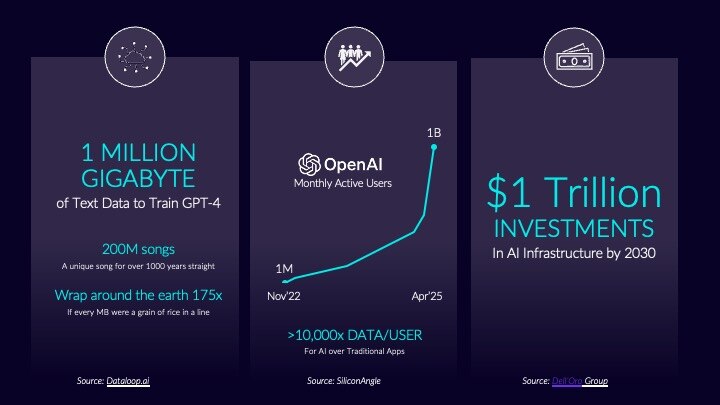

- Training ChatGPT-4 required over a petabyte of data—equivalent to 200 million songs playing continuously for 1,000 years.

- OpenAI serves 1 billion monthly active users, each consuming 10,000 times more data than traditional applications.

- By 2030, this AI revolution will drive over $1 trillion in infrastructure investments.

This explosive growth is pushing power consumption in data centers from megawatts to gigawatts, creating constraints that can’t be solved by simply adding more commodity servers. The industry must fundamentally rethink how we architect, build, and deploy computing infrastructure. The companies that master this transformation will unlock AI’s full potential—and those that don’t risk being left behind.

That was the message Mohamed Awad, senior vice president and general manager of Arm’s Infrastructure Line of Business, shared Sunday night during a SKYTalk at the 62nd Design Automation Conference (DAC) in San Francisco.

Lessons learned

He said the blueprint for managing such massive technological shifts already exists. Over the past three decades, successful technology revolutions—from mobile computing to automotive transformation to IoT deployment—have followed a consistent pattern. Companies that emerged as leaders shared three common characteristics:

- They pursued technology leadership

- Adopted systems-level thinking

- And fostered strong ecosystems.

This pattern offers crucial lessons for the AI transformation. Consider how the mobile revolution wasn’t just about faster processors—it required rethinking everything from power efficiency to software stacks to manufacturing partnerships. Similarly, the automotive industry’s shift toward autonomous and electric vehicles demanded integrated approaches spanning silicon design, system architecture, and collaborative ecosystems.

“What it’s going to take for AI to really reach those lofty goals that we’re all setting for it is really more of the same,” Awad said. “Technology leadership, systems designed from the ground up, and a strong ecosystem.”

The infrastructure evolution imperative

The data center evolution reveals how rapidly the industry is adapting to AI’s demands. Pre-2020, companies relied on commodity servers with accelerators added via PCI slots. By 2020, the focus shifted to integrated servers with direct GPU-to-GPU connections. In 2023, we saw tightly coupled CPU-GPU integration. Now, the industry is moving toward complete “AI factories”—entire racks architected from silicon up for specific workloads.

Leading technology companies are abandoning the one-size-fits-all approach. NVIDIA’s Vera Rubin AI Cluster, Amazon’s AI UltraCluster, Google’s Cloud TPU Rack, and Microsoft’s Azure AI Rack represent purpose-built systems optimized for their unique requirements rather than generic solutions.

“All the major hyperscalers are doing exactly the same thing,” Awad explained. “They’re building completely integrated systems from the silicon level, taking their system requirements and using that to drive silicon innovation,” he told a standing room-only audience.

This shift reflects a broader industry recognition: AI’s computational demands require infrastructure designed specifically for AI workloads, not general-purpose systems retrofitted for artificial intelligence.

Proven performance at scale

The results speak volumes. AWS reports that over 50% of new CPU capacity deployed in the last two years has been based on their Arm-powered Graviton processors. Major workloads from Amazon Redshift and Prime Day to Google Search and Microsoft Teams are running on infrastructure built with technologies like Arm Neoverse, delivering significant performance and efficiency gains.

These aren’t cost-cutting moves—they’re performance plays, he said. Companies are building custom silicon not because it’s cheaper, but because it delivers performance and efficiency levels impossible to achieve with off-the-shelf solutions designed for their specific data centers.

Faster innovation through collaboration

Building custom silicon presents significant challenges—high costs, complexity, and lengthy development cycles. The solution lies in collaborative ecosystems that reduce barriers while accelerating innovation. Pre-integrated subsystems, such as Arm CSS, shared design resources, and validated tool flows can dramatically shorten development timelines.

Industry examples demonstrate the potential. Some partnerships report saving approximately 80 engineering years through collaborative approaches, with development cycles compressed from years to months. One project went from kickoff to functioning silicon running Linux on 128 cores in just 13 months—remarkable speed for cutting-edge silicon development, Awad said.

The emerging chiplet ecosystem represents another collaborative breakthrough. Industry initiatives like Arm’s Chiplet System Architecture (CSA) are defining common interfaces and protocols, enabling partners across Korea, Taiwan, China, Japan, and the UK to develop standardized compute building blocks that can be mixed and matched for different applications, creating more flexible and cost-effective development paths. Through programs like Arm Total Design, these collaborative frameworks are connecting foundries, design services, IP vendors, and firmware partners to streamline the entire development process.

No hardware without software

Hardware innovations alone won’t enable AI’s potential. Success requires robust software ecosystems spanning 15 years of accumulated investment: millions of developers, broad open-source project support, and thousands of vendors building compatible solutions.

Today’s leading AI infrastructure deployments leverage mature software stacks spanning Linux distributions, cloud-native technologies, enterprise SaaS applications, and AI/ML frameworks. This software maturity allows companies to deploy new hardware architectures confident their entire technology stack will function seamlessly.

“Hardware is nothing without software,” Awad said, “and this is such an important point, because when we think about all of the accelerators that are being built and devices that are being built, all of the silicon that is being built in support of AI, I’m often asked about the software. I have startups come up to me and say, ‘Hey, I built this great hardware thing.’ And then you ask them, ‘how many people develop software for it?’ The story’s not so strong.”

Embracing the infrastructure transformation

As AI continues its exponential growth, infrastructure challenges will intensify. Organizations cannot simply scale by adding traditional servers—they need purpose-built systems optimized for AI workloads while maintaining the efficiency necessary to operate at unprecedented scale.

The companies and technologies that successfully navigate this transformation will share common characteristics: they’ll pursue breakthrough performance through technology leadership, adopt holistic systems approaches rather than component-level thinking, and build collaborative ecosystems that accelerate innovation while reducing individual risk.

This infrastructure transformation represents both challenge and opportunity. Organizations that prepare now—by understanding these principles and building the right technology foundations—will be positioned to capitalize on AI’s trillion-dollar potential. Those that cling to yesterday’s approaches risk missing the largest technology opportunity of our generation.

The future belongs to those ready to build it, Awad said. The infrastructure transformation has begun.

Any re-use permitted for informational and non-commercial or personal use only.