Accelerating Development Cycles and Scalable, High-Performance On-Device AI with New Arm Lumex CSS Platform

Mobile devices are evolving into AI-powered tools that adapt, anticipate, and enhance how we interact with the world. However, as on-device AI becomes more advanced and sophisticated, the pressure on mobile silicon is intensifying.

Accelerated product cycles – where each new generation of flagship mobile devices arrives faster than the last – mean silicon providers and OEMs need to deliver innovation on tighter timescales with less margin for error. Advanced packaging techniques to sustain AI performance can be challenging to achieve in area and thermally constrained mobile form factors. Finally, the move to shrinking process nodes, like 3nm, introduces steep design complexities.

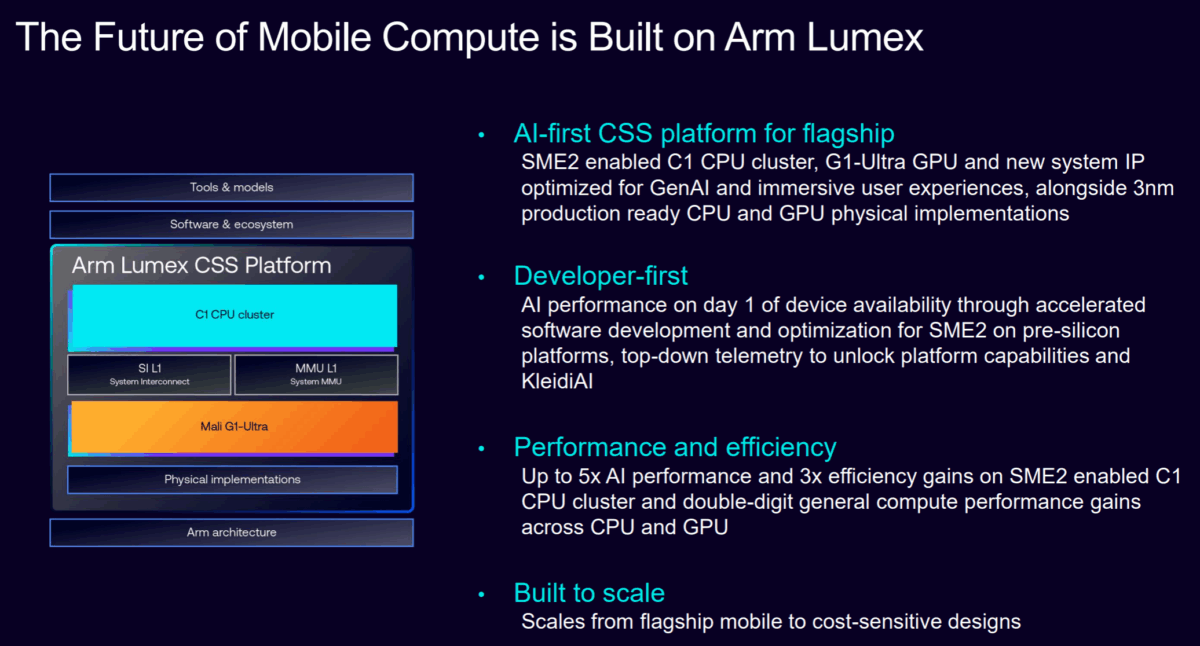

This is exactly why Arm introduced integrated platforms that combine Arm CPU and GPU IP with physical implementations and read-to-deploy software stacks to speed time-to-market and deliver best-in-class performance on the latest cutting-edge process nodes. The next evolution is Arm Lumex: our new purpose-built compute subsystem (CSS) platform to meet the growing demands of on-device AI experiences on flagship mobile and PC devices.

Re-Designed for the AI-first Era

Lumex provides the latest co-designed, co-optimized Arm compute IP with advanced features into a modular and highly configurable platform:

- New Armv9.3 C1 CPU cluster: Delivering IPC performance leadership and built-in Arm Scalable Matrix Extension 2 (SME2) units for more responsive, accelerated AI experiences on the CPU cluster.

- New Arm Mali G1-Ultra: Powering desktop-class visuals and richer gaming experiences with next-generation ray tracing capabilities, alongside faster AI inference.

- New system IP, including Arm SI L1 System Interconnect and Arm MMU L1 System Memory Management Unit: Designed to eliminate system performance bottlenecks and reduce latency across inference-heavy and compute heavy workloads.

- 3nm-ready CPU and GPU physical implementations: Built for industry-leading PPA (power, performance, and area) and a faster path to enabling flagship performance in silicon.

Accelerated Real-World AI Performance Across CPU and GPU Technologies

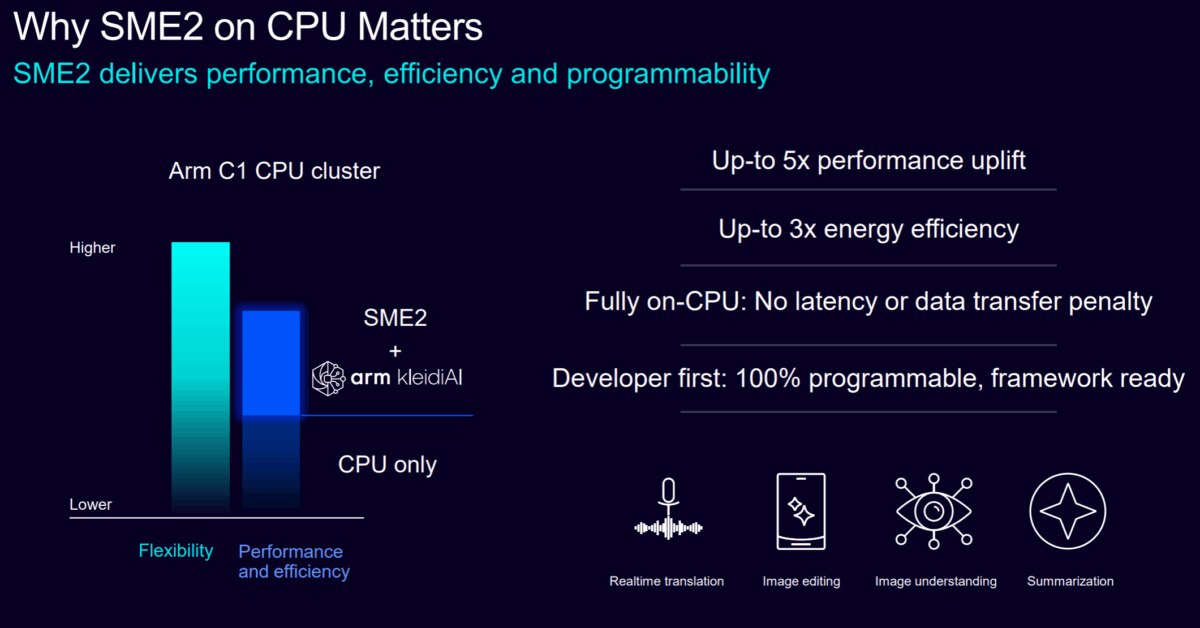

On the CPU side, the SME2-enabled Armv9.3 C1 CPU cluster, combined with Arm KleidiAI providing native support for leading frameworks and runtime libraries, delivers a significant speed-up across a range of AI-based workloads, including classic machine learning (ML) inference, speech and generative AI, compared to the previous generation CPU cluster under the same conditions. This is alongside a 5x AI performance uplift and 3x improved efficiency. These SME2-enabled improvements mean users experience smoother AI-based interactions and longer days of use on their favorite consumer devices.

Moreover, thanks to micro-architectural improvements and tighter integration across cores, the C1 CPU cluster sets a new bar for performance and efficiency, including:

- An average 30 percent performance uplift across six industry-leading performance benchmarks compared to the previous generation CPU cluster under the same conditions;

- An average 15 percent speed-up across leading applications, including gaming and video streaming compared to the previous generation CPU cluster under the same conditions;

- An average 12 percent less power used across daily mobile workloads, like video playback, social media and web browsing compared to previous generation CPU cluster under the same conditions; and,

- Double digit IPC (Instructions per Cycle) performance gains through the Arm C1-Ultra CPU compared to the previous generation Arm Cortex-X925 CPU.

Mali G1-Ultra pushes AI performance and efficiency even further, with 20 percent faster inference across AI and ML networks compared to the previous generation Arm Immortalis-G925 GPU.

For gaming, the Mali G1-Ultra delivers a 2x uplift in ray tracing performance for high-end, desktop-class visuals on mobile thanks to a new Arm Ray Tracing Unit v2 (RTUv2). This is alongside 20 percent higher graphics performance across key industry benchmarks and gaming applications, including Arena Breakout, Fortnite, Genshin Impact, and Honkai Star Rail.

The Scalable System Backbone of Lumex

However, to support AI-first experiences, the mobile system-on-chip (SoC) must evolve across the entire interconnect and memory architecture, and not just the compute IP.

This is why we’re introducing a new scalable System Interconnect which is optimized to meet the bandwidth and latency demands of demanding AI and other compute heavy workloads. This helps ensure leading performance on Lumex without compromising system responsiveness. The new SI L1 System Interconnect features the industry’s most advanced and area efficient system-level cache (SLC), with a 71 percent leakage reduction compared to a standard compiled RAM to minimize idle power consumption.

For our partners, the System Interconnect delivers a highly flexible, scalable solution that can be optimized for different PPA needs across a wide range of mobile and consumer devices. The SI L1 System Interconnect is for flagship mobile devices with a fully integrated optional SLC and support for the Arm Memory Tagging Extension (MTE) feature to deliver best-in-class security. Meanwhile, the Arm NoC S3 Network-on-Chip Interconnect (NoC S3) is targeted for cost-sensitive and non-coherent mobile systems.

Alongside the new Interconnect, we are also introducing the next-gen Arm MMU L1 System Memory Management Unit, which provides secure, cost-efficient virtualization that scales across a broad category of mobile and consumer devices.

Unlocking Industry-Leading PPA with Physical Implementations

Lumex provides production-ready CPU and GPU implementations that are optimized for 3nm and available on multiple foundries, allowing our silicon and OEM partners to:

- Use the implementations as flexible building blocks, so they can focus on differentiation at the CPU and GPU cluster level;

- Achieve compelling frequency and PPA; and,

- Help ensure first-time silicon success when transitioning to the latest 3nm process node.

Immediate Developer Access to Lumex Benefits

To unlock the full potential of Lumex, developers need early access to its capabilities ahead of actual device availability. That’s why we’re introducing a new broad range of software and tools, so developers can prototype and build their AI workloads and utilize the full AI capabilities of the Lumex CSS platform now. These include:

- A complete Android 16 ready software stack, from trusted firmware to the application layer;

- A full, freely available SME2-enabled KleidiAI libraries; and

- New top-down telemetry to analyze app performance, identify bottlenecks and optimize algorithms.

Following a highly successful first year, KleidiAI is now integrated into all major AI frameworks and already shipping across a broad range of applications, devices, and system services, including Android. This work means that everything is ready, so when Lumex-based devices hit the market in the coming months, applications will immediately experience performance and efficiency uplifts for their AI workloads.

On the graphics side, with RenderDoc support coming in future Android releases and unified observability tools like Vulkan counters, Streamline, and Perfetto available through Lumex, developers can analyze workloads in real time, fine-tune for latency, and balance battery and visual quality with precision.

Laying the Foundation for the Next Generation of Mobile Intelligence

Mobile computing is entering a new era that is defined by how intelligence is built, scaled, and delivered. As AI becomes foundational to every experience, platforms must predict, adapt, scale, and accelerate what comes next.

Lumex is designed with that future in mind, with benefits across the entire ecosystem. From OEMs building and scaling innovative devices to developers creating next-gen apps, Lumex makes it easier for our ecosystem to deliver differentiated AI-first platforms and experiences faster at scale, with more intelligent performance.

Any re-use permitted for informational and non-commercial or personal use only.