Unpacking Axion: Google Cloud’s Custom Arm-based Processor Built for the AI age

Cloud computing demands are skyrocketing, especially in the AI-driven era, pushing developers to seek performance-optimized, energy-efficient solutions that lower total cost of ownership (TCO). We’re dedicated to meeting these evolving needs with Arm Neoverse, which is rapidly becoming the compute platform of choice for developers shaping the future of cloud infrastructure.

Google Cloud, in collaboration with Arm, has designed custom silicon tuned for real-world performance. The result: Axion, Google’s first custom Neoverse-based CPU, built to outperform traditional processors with better performance, efficiency, and scale. This collaboration brings greater choice to developers and advances cloud innovation.

Strong Adoption from Google Cloud Customers and Internal Services

Built on the Neoverse V2 platform, Axion processors are engineered specifically to deliver extraordinary performance and energy efficiency for a wide range of workloads, including cloud-native applications, demanding AI models and a host of Google Cloud services such as Compute Engine, Google Kubernetes Engine (GKE), Batch and Dataproc with Dataflow, AlloyDB and CloudSQL currently in preview.

Companies across industries, from content streaming to enterprise-scale data services, are using Arm-based Google Axion processors and discovering substantial improvements in computing efficiency, scalability, and TCO. Google Cloud customers such as ClickHouse, Dailymotion, Databricks, Elastic, loveholidays, MongoDB, Palo Alto Networks, Paramount Global, Redis Labs, and Starburst are already seeing transformative results. Spotify, for example, has observed roughly 250% better performance using Axion-based C4A VMs.

Breaking Down Performance Barriers

Google Axion processors excel in both AI inferencing workloads and general-purpose computing. For AI inferencing, Axion’s specialized optimizations deliver significant performance gains, allowing AI workloads to run faster and more efficiently. This is particularly beneficial for applications such as natural language processing, computer vision, and recommender systems. AI developers can take advantage of Arm Kleidi, a collection of lightweight, highly performant open source libraries. Integrated with leading frameworks, Kleidi significantly improves the performance of AI applications running on Arm with no extra effort from the developer.

Axion processors leverage Arm’s advanced architectural features, enabling developers to deploy complex AI models at scale without sacrificing speed or performance.

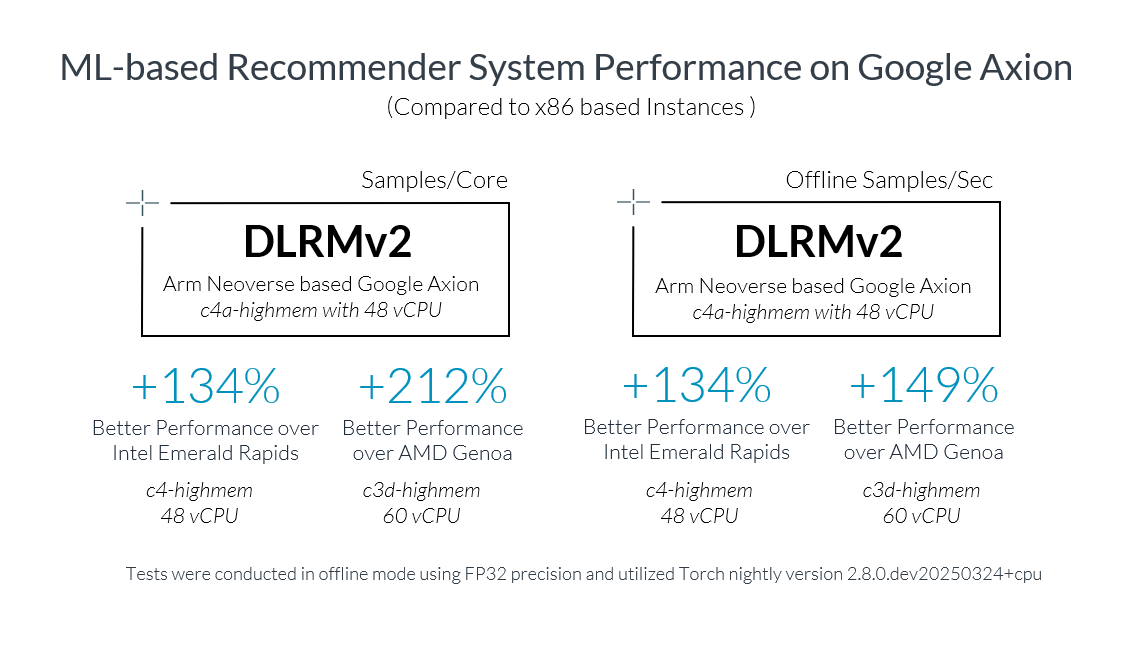

For example, the MLPerf DLRMv2 benchmark for Google Axion demonstrated up to three times better full-precision performance compared to x86-based alternatives, showcasing its advanced capabilities in recommender systems. Many users prefer FP32 precision to avoid the accuracy issues associated with lower-precision formats like INT8, as inaccurate recommendations can lead to lost sales, reduced customer satisfaction, and damage to brand reputation.

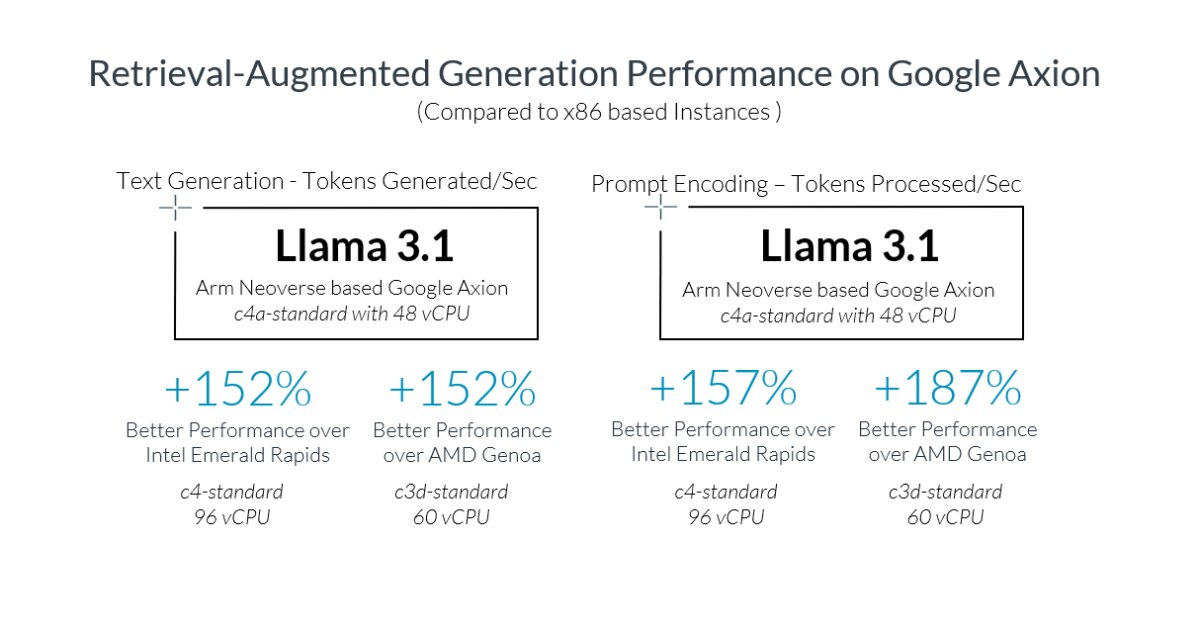

In another example, for AI chatbots that occasionally provide outdated or inaccurate answers, Retrieval-Augmented Generation (RAG) methodology offers a powerful solution to enhance their accuracy and relevance. In our testing, when the RAG applications are run on Google Axion processors, they delivered up to 2.5x higher performance compared to x86 alternatives.

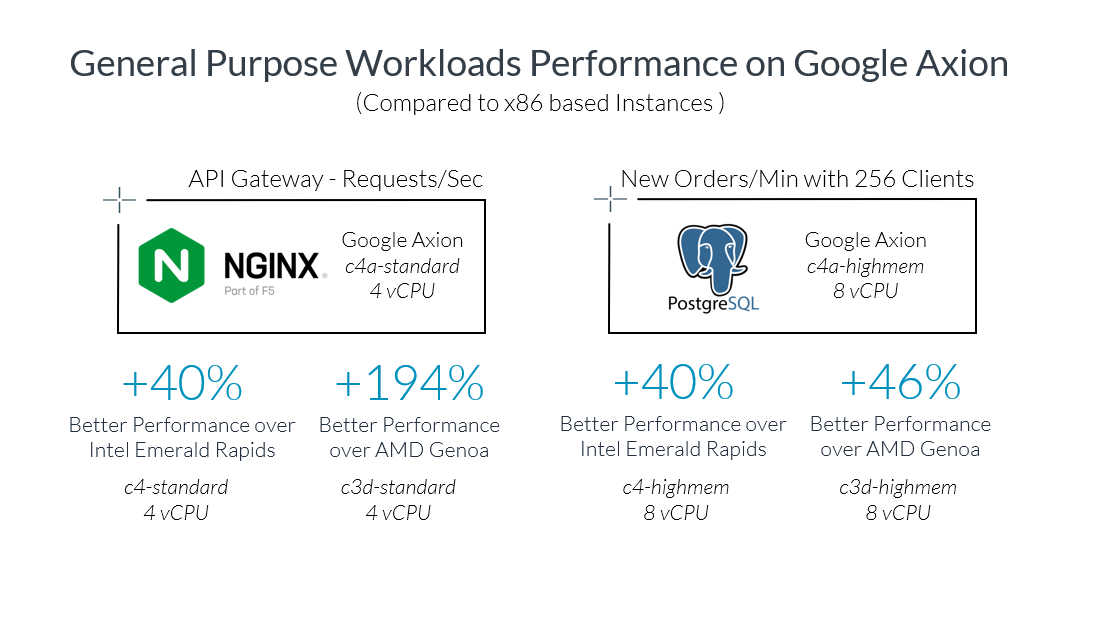

Axion processors provide a significant performance increase for general-purpose workloads as well as seen from the results below. By optimizing for high throughput and lower latency, Axion enables faster application response times, enhanced user experiences, and improved resource utilization, making it ideal for web servers, databases, analytics, and containerized microservices.

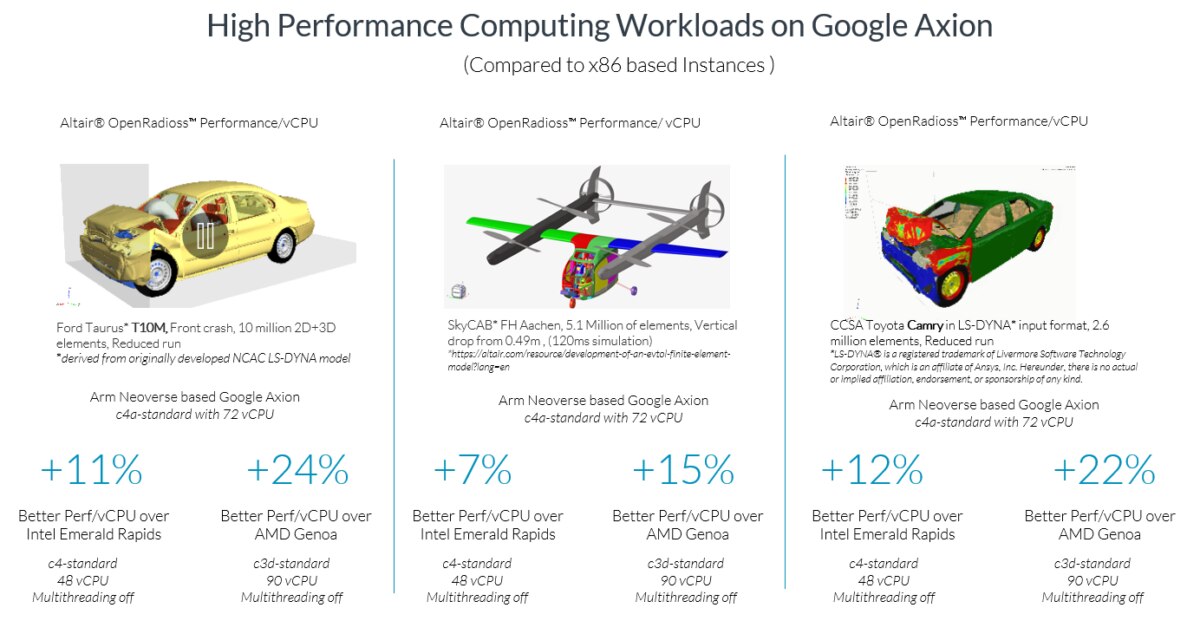

Additionally, Axion-based C4A VMs are particularly well suited for HPC workloads as they combine the performance of native Neoverse cores with ample memory bandwidth per vCPU. HPC developers can take advantage of a rich ecosystem of open source and commercial scientific computing applications and frameworks available on Neoverse platforms, including Arm Compiler for Linux and Arm Performance Libraries. Our tests on industry standard crash and impact simulation application Altair® OpenRadioss™ show significant performance benefits of running on Axion-based C4A VMs.

Accelerating Cloud Migration

To support and accelerate developer adoption of the Arm architecture in the cloud, we recently launched a comprehensive cloud migration initiative. Central to this initiative is our new Cloud Migration Resource Hub, offering more than 100+ detailed Learning Paths designed to guide developers through migrating common workloads seamlessly across multiple platforms. As the list of independent software vendors (ISVs) supporting Axion continues to grow with prominent players such as Applause, Couchbase, Honeycomb, IBM Instana Observability, Verve and Viant, the Software Ecosystem Dashboard for Arm conveniently keeps developers informed on available and recommended versions of major open-source and commercial software for Neoverse. This ensures compatibility and smooth operations from day one.

These resources enable developers interested in adopting or migrating to Google Cloud Axion-based C4A VMs to engage Arm’s active community support channels, including specialized GitHub repositories dedicated to migration. Arm’s cloud migration experts are also available to provide direct engineering assistance and personalized support, particularly for enterprise-scale migrations, helping to ensure a smooth and successful transition to Axion-based solutions.

In summary, Google Cloud’s introduction of Axion processors signifies a strategic move towards offering more diverse and higher performing computing options for its customers. By leveraging Arm’s architecture and Google’s custom silicon design, Axion delivers exceptional performance and efficiency for diverse workloads, from demanding AI inferencing and HPC applications to general-purpose and cloud-native services. This – combined with our cloud migration initiative and a robust software ecosystem – empowers developers to build the future of computing on Arm.

How Spotify optimizes cloud cost and performance with Google Cloud

Spotify scales fast and efficiently on Google Axion, built on Arm. Get the inside story now.

Additional Resources

Any re-use permitted for informational and non-commercial or personal use only.