LeddarTech Addresses Standardization, Component Integration in Automotive

Consider the challenges today of designing systems to support autonomous functionality in vehicles: Sensors must capture immense amounts of complex data from the real world around them, and compute must be able to comprehend and render that data into useful information for the vehicle and driver. All in an instant.

It’s nothing short of magic.

Companies like LeddarTech are at the forefront of confronting these design challenges. This 14-year-old Canadian company, packed with industry veterans from the automotive, telecom, healthcare and other sectors, is tackling some of the biggest challenges around sensor fusion design today.

LeddarTech’s innovation targets three main pillars: Software and data, LiDAR components and sensors and modules. And it partners with the world’s leading vendors delivering Arm-powered technologies to its OEM and Tier1 automotive customers.

But when they emerge from their heads-down design, they also have an important message to share with the rest of us: The industry won’t be able to drive autonomous technology to scale and volume unless it embraces openness, standardization and technological integration.

“I come from the telecom sector where open standards were the lay of the land,” says Pierre Olivier, LeddarTech CTO. “You start with the standard and if it doesn’t fulfill what you need, you work with other companies and define the new standard. Of course, you try and push your technology, but you do it with the understanding that it’s going to be multi-platform and multi-vendor.”

Making sense of sensing

LeddarTech, a self-described “old startup” founded in 2007 and headquartered in Quebec City, has evolved into an environmental sensing-solutions company with an emphasis currently on the automotive and mobility sectors but whose technologies are more broadly applicable.

LeddarTech also delivers a cost-effective, scalable, and versatile LiDAR development solution to Tier-1 and 2 automotive suppliers and system integrators, enabling them to develop automotive-grade solid-state LiDARs based on the company’s LeddarEngine technology. LeddarTech technology has been deployed into autonomous shuttles, trucks, buses, delivery vehicles, robotaxis, and smart city/factory applications.

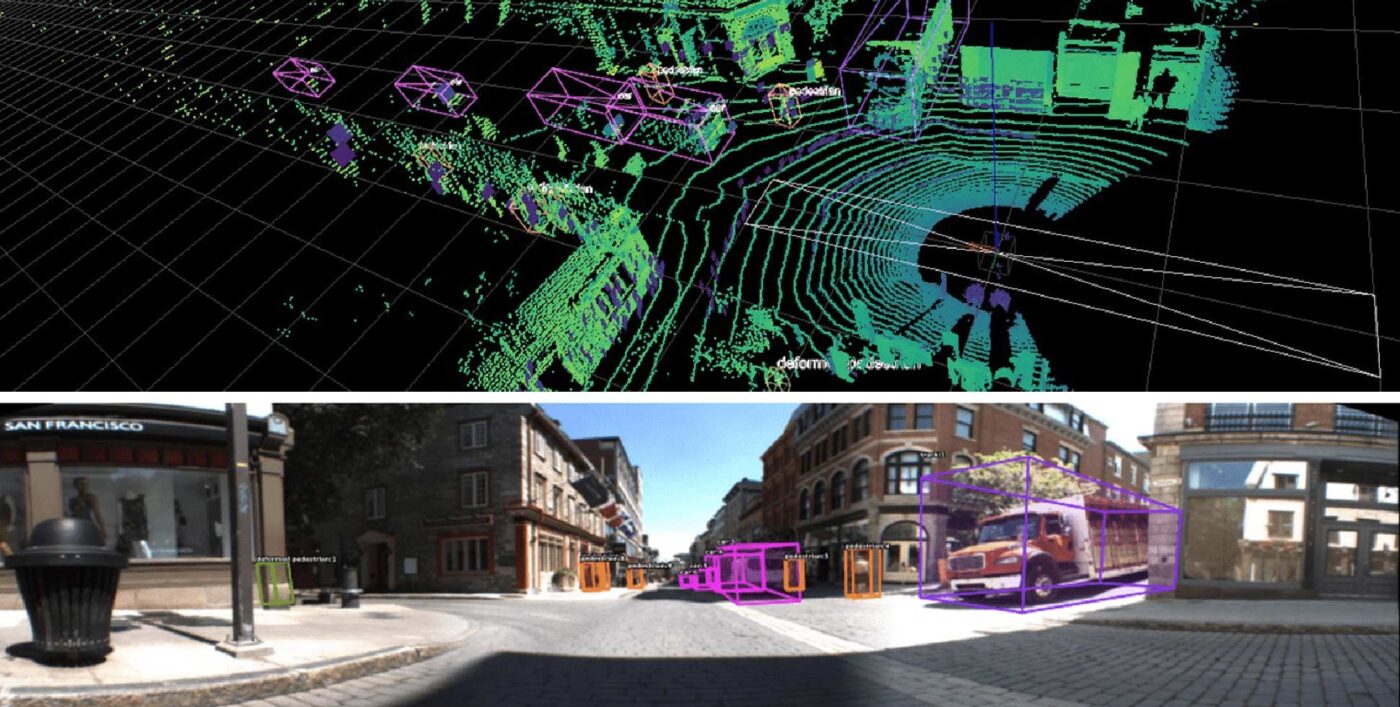

The company’s LeddarVision solution tackles one of the toughest problems in mobility today: Fusing data from ultrasonic, radar, LiDAR and camera sensors into a 3D representation of the world around the vehicle. The software stack supports all Society of Automotive Engineers (SAE) autonomy levels by applying artificial intelligence (AI) and computer vision algorithms to fuse raw data for Level 2 applications (using radar and camera data) and Level 3 (using camera, radar and LiDAR).

With 14 years of design experience in the segment, the company is well-positioned to describe the broader challenges on the road to autonomy. It’s a journey, that while promising, currently is highlighted by issues of scalability, cost and siloed engineering.

Balancing cost, functionality

LeddarTech’s goal is for its customers to be able to use low-cost sensors on the front end and leverage LeddarTech technology to propel their autonomous functions to new levels. Here, there’s a cost challenge that teams confront: Every design team dreams of deploying as many multi-functional sensors as possible to ensure the best results and complete safety. But LiDAR sensors don’t obey Moore’s Law, and they can cost 100x what a camera sensor does. So design teams need to choose their cost battles wisely on the front end.

“The industry’s done a fantastic job industrializing things like parking sensors. They ship hundreds of millions a year, and they’re like $7 a piece,” Olivier says. “There are typically 11 or 12 on every car, so why can’t I use them for smart parking-assist for example? Why do I have to put a high-cost LiDAR to achieve that?”

Here’s where of the major barriers to scalability exists at the moment, according to Olivier. Camera sensors are standardized today. Serializer and training formats are standard, “so I can pretty much mix and match this camera for the application, take them into the same vision processing system, and they are compatible,” he says.

But LiDAR standards are not nearly as evolved. LiDAR vendors tend to be “protective of their turf” and this can contribute to the siloing of sensor systems in vehicle design that can inhibit performance and cost-savings. In this case, the solution isn’t smarter LiDAR but “dumber” LiDAR: Treat those sensors just like the cameras, take in the data and handle it in a standardized way, Olivier says.

“We’re trying to get the industry to stop building islands of functionality and start building integrated systems that can be multi-functional,” he says. “Today you have the adaptive cruise control, lane-keep assist, you have the parking functionality, and they’re all a hodgepodge of different sensors, different processors, and so on.”

That’s one of the driving forces behind the company’s LeddarCore LCA2 SoC, a customizable, integrated LiDAR solution for high-volume manufacturing.

This emphasis on standardization and integration also can propel functional safety forward.

“So far, the industry has said of safety ‘OK, we’ll address this when we address it.’ But, as you guys know very well, you can’t retrofit safety into a design. It needs to be designed from the beginning. That’s what we do with our SoCs, and that’s what we do with our software.”

The need for openness

When it comes to supporting an open approach to design, LeddarTech walks the talk in other ways. For example, in February, it released its PixSet data set for researchers and academics to use in their ADAS and autonomous-driving work. The set contains data from a full sensor suite, including cameras, LiDAR, radar and IMU, and includes full-waveform data from Leddar Pixell, a 3D solid-state flash LiDAR sensor.

Recorded in high-density Canadian areas, such as Quebec City and Montreal, the scenes take place in urban and suburban environments as well as on the highway, in various weather (e.g., sunny, cloudy, rainy) and illumination (e.g., day, night, twilight) conditions. This provides a variety of situations with real-world data for autonomous driving.

Why would a company in such a highly competitive sector give away such data? To enable the industry to move more quickly to full autonomy.

“We believe that one of the reasons why the industry is late in delivering fully autonomous vehicles is that everybody tries to do it on their own,” he said. Today, more than 60 companies collect data in San Francisco, driving over the same streets and collecting the same data over and over, he said.

“Maybe if some of them pooled their data and started to collect elsewhere then it would further the position of the industry,” he added. “As companies, there’s so much we can do, but there’s much more all the academic and researchers can do in improving the basics like neural network architectures and so on, and we want to enable that.”

A second motivation is that LeddarTech wants to unlock LiDAR as a technology. Before February, no LiDAR vendor had released their data. “And thirdly,” he added, “we feel a debt to some of these people who built this, and we want to pay back some of that.”

Going forward, there is enormous opportunity for environmental sensing in software and hardware outside of the automotive sector. LeddarTech technology, for example, resides in a broad array of applications including free-flow tolling systems, auto-focus functions in 4K cinema cameras, and milk storage containers in New Zealand.

Olivier says that LeddarTech has shipped more LiDAR technology than all the vendors combined; some systems have been in operation for 10 years, 24 hours a day.

“There are huge opportunities for these applications,” he said.

Meanwhile in automotive, LeddarTech continues to evolve its technology and beat the drum for integration, openness and standardization.

“This is what the autonomous driving industry needs to succeed,” he added.

Arm in Automotive

As we move into a new era of transportation, Arm is driving innovation – architecting energy efficient intelligence for tomorrow’s vehicles. With new levels of connectivity, efficiency, and autonomy. Automotive and Arm, together we’re building the future of mobility.

Any re-use permitted for informational and non-commercial or personal use only.