NexOptic Enables Endpoint AI Imaging with Arm

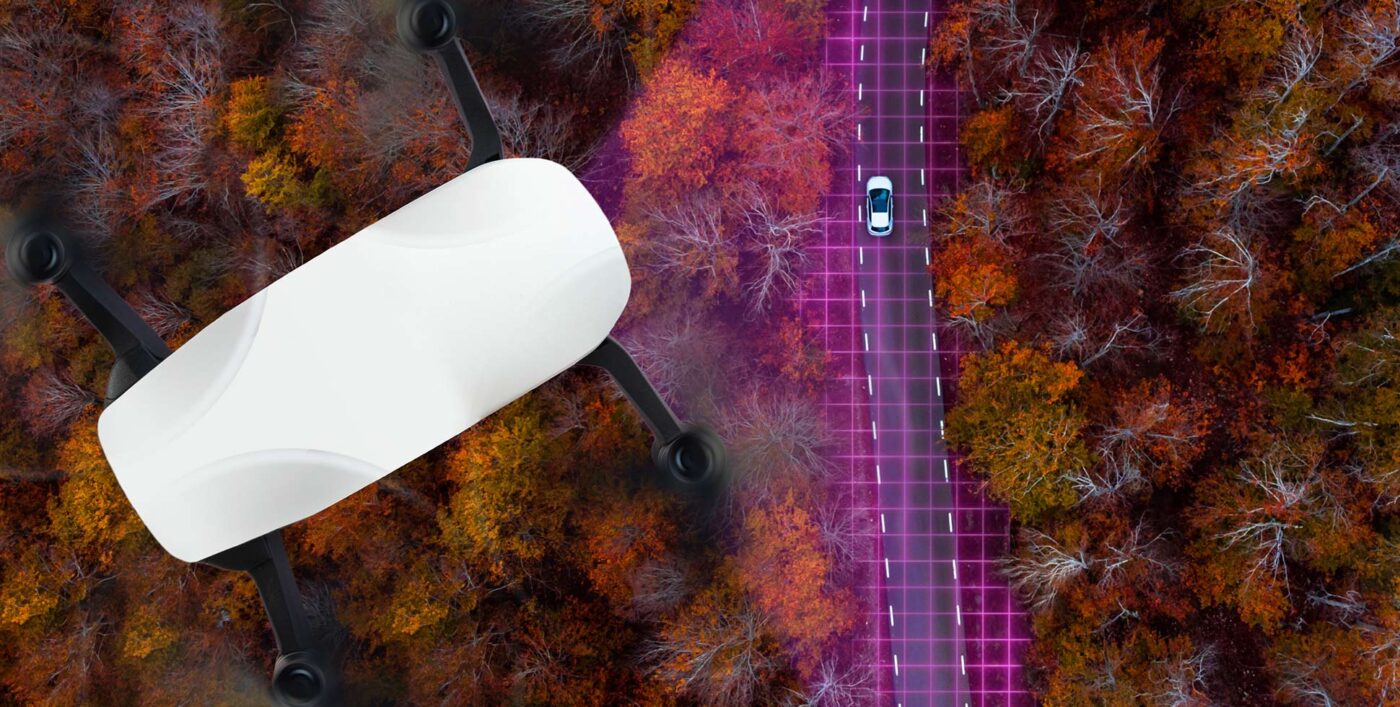

The way we see the world and everything around us shapes our experience. Cameras are in our smartphones, vehicles, cities and homes. And as these smart cameras become more advanced, so do our expectations of how and where they can be used.

Yet one of the fastest-growing segments of the industry is in images that no human will ever see. Every day, new algorithms and techniques are being developed that enable computers to make sense of image data without human involvement. We call this ‘intelligent imaging’, and it’s now found in a wide range of applications, from Internet of Things (IoT) devices to medical imaging, robotics and vehicle autonomy.

At NexOptic Technologies, we’ve developed a suite of intelligent imaging solutions called Aliis. Aliis provides OEMs, system integrators, and software developers access to the rapidly evolving field of intelligent imaging through patent-pending algorithms that have been engineered to deliver a sharper, clearer view to products and machines.

Aliis enables us to address a wide range of consumer and industry problems and demands. Central to this is deep learning.

Aliis: Intelligent imaging, powered by deep learning

For decades deep learning technology lay dormant due to limitations in hardware and data availability, but recent advances in computation, coupled with the availability of high-quality data, has led to a resurgence of this machine learning (ML) algorithm.

Already, deep learning is disrupting numerous disciplines of computer science, achieving state of the art results in countless areas from imaging to natural language processing, physical modelling and protein folding, to game-playing and robotics.

Meanwhile, the proliferation of image sensors has led to us interacting with cameras and imaging products almost constantly in our daily lives. At the intersection of these two technologies lies an opportunity to create a new paradigm of intelligent machines, one where sensing and computation are unified.

Already we’ve seen an explosion of research and commercial activity as academics and entrepreneurs alike set out to stake claims in the frontier of this budding field. For the adventurous, intelligent imaging could be the modern-day gold rush.

Because deep learning algorithms are so well-suited to imaging tasks, they’ve proven their exceptional ability to provide state-of-the-art performance in areas such as classification, object detection, segmentation and error detection. The versatility of deep learning cannot be understated.

Yet there’s a catch-22: Increasingly, we’re finding that the greatest potential to solve consumer and industry problems is through enabling intelligent imaging at the edge and endpoint.

Intelligence at the edge and endpoint

Yet performing complex deep learning in endpoint devices comes at significant computational cost. And that’s where a lot of NexOptic’s work in optimization is happening—through software optimizations such as model compression and distillation, network quantization and mixed precision, and massive architecture searches to find efficient and accurate deep learning models.

On the hardware side, we’re exploring everything from specialized instruction sets to purpose-built chips, sometimes referred to as Neural Processing Units (NPUs), such as the Arm Ethos-N range.

These chips exploit the homogenous nature of deep learning workloads with parallelism and optimize data flows to realize greater power efficiencies.

Together, these software and hardware techniques have propelled deep learning to the edge and endpoint in a very short amount of time, presenting an opportunity for low powered, high performance intelligent imaging solutions in devices without the need for cloud-based processing.

Aliis: an intelligent imaging neural network

The Aliis neural network processes raw images and video in real-time, working pixel-by-pixel to uniquely perfect characteristics such as resolution, lighting, sharpness and contrast. Using Aliis, you can expect dramatic reductions in image noise, glare, and motion-blur.

Additionally, it enhances long-range image stabilization by enabling faster shutter speeds (up to 600x) while offering upwards of 10X reduced file and bandwidth requirements for storage and streaming applications.

Central to Aliis’ design is its ability to be coupled with other deep learning algorithms for enhanced performance. As an example, Aliis boosted the performance of a commercially available image classifier by over 400 percent in low light environments. Applications such as segmentation, visual SLAM, object detection and collision avoidance, and others will benefit from the transformed vision stream Aliis provides.

Catching the deep learning wave

Manoeuvring in the fast-paced and unfamiliar environment of deep learning technology can be difficult, not to mention out of reach for many groups. The NexOptic AI team draws on the numerous advances and techniques developed in the field to deliver a simplified intelligent imaging entry point at the edge and endpoint. Importantly, NexOptic has partnered with Arm to ensure customers are able to leverage the most from their hardware of choice.

For example, specialized NPU technology like that found in Arm’s Ethos processors will help deliver the highest throughput and most efficient processing for ML inference in endpoint devices. Coupled with the robust and adaptable Arm “AE” image signal processing IP, end customers are able to truly build the AI-powered imaging systems of the future.

What was once relegated to the lab is now a rapidly growing field that shows no signs of slowing. A modern tech gold rush? To put it in perspective, the AI computer vision industry is projected to reach $USD 25 bn by 2023, growing by 47 percent each year.

The technology has the potential to augment the experiences that shape our lives and change the way we interact with the world. It’s powering our factories, driving our cars, keeping our homes and cities safe, and it’s enabling us to better capture and share life’s milestones and experiences.

Whereas yesterday’s digital image sensors allowed machines to see for the first time, today’s hardware and software is imbuing our machines with vision and perception.

As this technology is pushed to the edge and endpoint it will open new and exciting applications, offering designers an opportunity to breathe new life into their product offerings. Integrating intelligence into your product can immediately benefit your imaging application, differentiating your products so they remain relevant in this rapidly evolving field.

Arm AI Ecosystem Partner Program

The AI Ecosystem Partner Program works with best of breed companies delivering AI solutions and technologies for every market vertical. Whether it’s IIoT, smart home, wearables, medical or green tech, together with Arm they are shaping the AI industry for years to come. For more information on the program and how to join, click below.

Any re-use permitted for informational and non-commercial or personal use only.