Arm Enables Secure Computer Vision for Smart Cameras

Computer vision leverages artificial intelligence (AI) to enable devices such as smart cameras to interpret and understand what is happening in an image. Recreating a sensor as powerful as the human eye with technology opens up a wide and varied range of use cases for computers to perform tasks that previously required human sight – so it’s no wonder that computer vision is quickly becoming one of the most important ways to capture and act on real-world data within the Internet of Things (IoT).

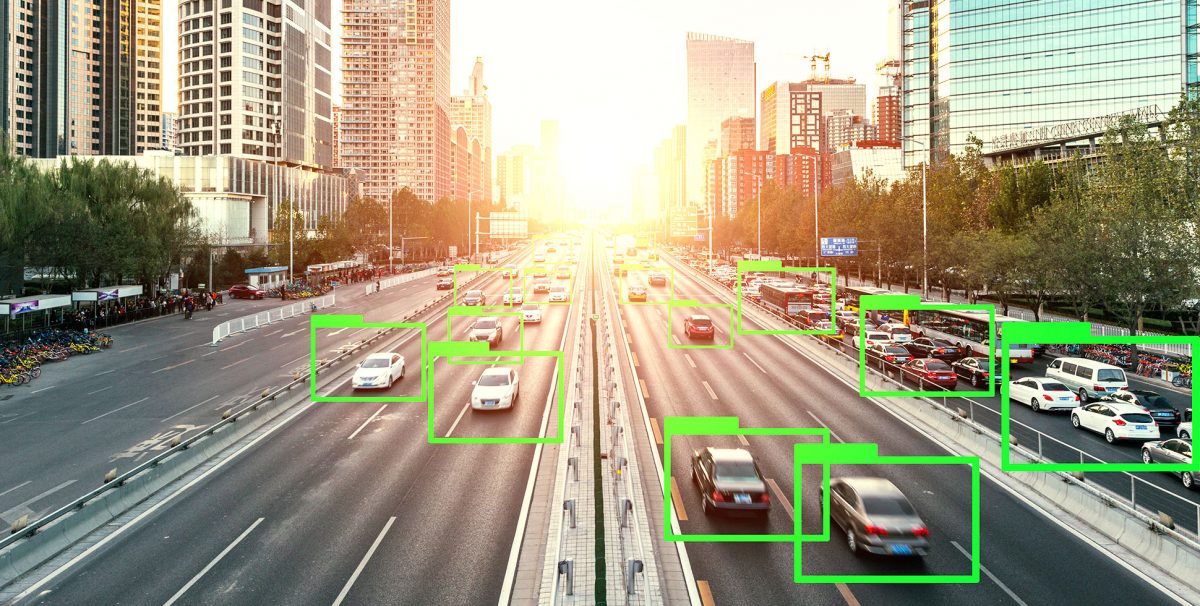

Smart cameras now use computer vision in a range of business and industrial applications, from counting cars in parking lots to monitoring footfall in retail stores or spotting defects on a production line. And in the home, smart cameras can tell us when a package has been delivered, whether the dog escaped from the back yard or when our baby is awake.

Across the business and consumer worlds, the adoption of smart camera technology is growing exponentially. In its 2020 report “Cameras and Computing for Surveillance and Security”, market research and strategy consulting company Yole Développement estimates that for surveillance alone, there are approximately one billion cameras across the world. That number of installations is expected to double by 2024.

This technology features key advancements in security, heterogeneous computing, image processing and cloud services – enabling future computer vision products that are more capable than ever.

Smart camera security is top priority for computer vision

IoT security is a key priority and challenge for the technology industry. It’s important that all IoT devices are secure from exploitation by malicious actors, but it’s even more critical when that device captures and stores image data about people, places and high-value assets.

Unauthorized access to smart cameras tasked with watching over factories, hospitals, schools or homes would not only be a significant breach of privacy, it could also lead to untold harm—from plotting crimes to the leaking of confidential information. Compromising a smart camera could also provide a gateway, giving a malicious actor access to other devices within the network – from door, heating and lighting controls to control over an entire smart factory floor.

We need to be able to trust smart cameras to maintain security for us all, not open up new avenues for exploitation. Arm has embraced the importance of security in IoT devices for many years through its product portfolio offerings such as Arm TrustZone for both Cortex-A and Cortex-M.

In the future, smart camera chips based on the Armv9 architecture will add further security enhancements for computer vision products through the Arm Confidential Compute Architecture (CCA).

Further to this, Arm promotes common standards of security best practice such as PSA Certified and PARSEC. These are designed to ensure that all future smart camera deployments have built-in security, from the point the image sensor first records the scene to storage, whether that data is stored locally or in the cloud by using advanced security and data encryption techniques.

Endpoint AI powers computer vision in smart camera devices

The combination of image sensor technology and endpoint AI is enabling smart cameras to infer increasingly complex insights from the vast amounts of computer vision data they capture. New machine learning capabilities within smart camera devices meet a diverse range of use cases – such as detecting individual people or animals, recognizing specific objects and reading license plates. All of these applications for computer vision require ML algorithms running on the endpoint device itself, rather than sending data to the cloud for inference. It’s all about moving compute closer to data.

For example, a smart camera employed at a busy intersection could use computer vision to determine the number and type of vehicles waiting at a red signal at various hours throughout the day. By processing its own data and inferring meaning using ML, the smart camera could automatically adjust its timings in order to reduce congestion and limit build-up of emissions automatically without human involvement.

Arm’s investment in AI for applications in endpoints and beyond is demonstrated through its range of Ethos machine learning processors: highly scalable and efficient NPUs capable of supporting a range of 0.1 to 10 TOP/s through many-core technologies. Software also plays a vital role in ML and this is why Arm continues to support the open-source community through the Arm NN SDK and TensorFlow Lite for Microcontrollers (TFLM) open-source frameworks.

These machine learning workload frameworks are based on existing neural networks and power-efficient Arm Cortex-A CPUs, Mali GPUs and Ethos NPUs as well as Arm Compute library and CMSIS-NN – a collection of low-level machine learning functions optimized for Cortex-A CPU, Cortex-M CPU and Mali GPU architectures.

Webinar: How Machine Learning is Enabling New Smart Camera Applications

Smart cameras are being redefined by AI and ML workloads. Watch this free webinar to learn more about how to analyze ML models and for guidance on understanding ML system design and requirements for your products

The Armv9 architecture supports enhanced AI capabilities, too, by providing accessible vector arithmetic (individual arrays of data that can be computed in parallel) via Scalable Vector Extension 2 (SVE2). This enables scaling of the hardware vector length without having to rewrite or recompile code. In the future, extensions for matrix multiplication (a key element in enhancing ML) will push the AI envelope further.

Smart cameras connected in the cloud

Cloud and edge computing is also helping to expedite the adoption of smart cameras. Traditional CCTV architectures saw camera data stored on-premises via a Network Video Recorder (NVR) or a Digital Video Recorder (DVR). This model had numerous limitations, from the vast amount of storage required to the limited number of physical connections on each NVR.

Moving to a cloud-native model simplifies the rollout of smart cameras enormously: any number of cameras can be provisioned and managed via a configuration file downloaded to the device. There’s also a virtuous cycle at play: Data from smart cameras can be now used to train the models in the cloud for specific use-cases so that cameras become even smarter. And the smarter they become, the less data they need to send upstream.

The use of cloud computing also enables automation of processes via AI sensor fusion by combining computer vision data from multiple smart cameras. Taking our earlier example of the smart camera placed at a road intersection, cloud AI algorithms could combine data from multiple cameras to constantly adjust traffic light timings holistically across an entire city, keeping traffic moving.

Arm enables the required processing continuum from cloud to endpoint. Cortex-M microcontrollers and Cortex-A processors power smart cameras, with Cortex-A processors also powering edge gateways. Cloud and edge servers harness the capabilities of the Neoverse platform.

New hardware and software demands on smart cameras

The compute needs for computer vision devices continue to grow year over year, with ultra-high resolution video capture (8K 60fps) and 64-bit (Armv8-A) processing marking the current standard for high-end smart camera products.

As a result, the system-on-chip (SoC) within next-generation smart cameras will need to embrace heterogenous architectures, combining CPUs, GPUs, NPUs alongside dedicated hardware for functions like computer vision, image processing, video encoding and decoding.

Storage, too, is a key concern: While endpoint AI can reduce storage requirements by processing images locally on the camera, many use cases will require that data be retained somewhere for safety and security – whether on the device, in edge servers or in the cloud.

To ensure proper storage of high-resolution computer vision data, new video encoding and decoding standards such as H.265 and AV1 are becoming the de facto standard.

New use cases driving continuous innovation

Overall, the demands from the new use cases are driving the need for continuous improvement in computing and imaging technologies across the board.

When we think about image-capturing devices such as CCTV cameras today, we should no longer imagine grainy images of barely recognizable faces passing by a camera. Advancements in computer vision – more efficient and powerful compute coupled with the intelligence of AI and machine learning – are making smart cameras not just image sensors but image interpreters. This bridge between the analog and digital worlds is opening up new classes of applications and use cases that were unimaginable a few years ago.

Arm enables smart cameras and computer vision

Across security, computing, imaging, and artificial intelligence, Arm technology enables a new future of powerful, secure and smart vision products.

Any re-use permitted for informational and non-commercial or personal use only.