Computational Storage: A New Way to Boost Performance

Computational storage—the ability to perform selected computing tasks within or adjacent to a storage device rather than the central processor of a server or computer—will move toward mainstream acceptance over the next few years.

What is computational storage?

Computational storage, also known as in-situ processing or in-storage compute, brings high-performance compute to traditional storage devices. Data can be processed and analyzed where it is generated and stored, empowering users and organizations to extract valuable, actionable insights at the device level.

From a high level, computational storage involves putting a full-fledged computing system—a central processing unit (CPU), memory (DRAM) and input/output (I/O)—inside of a solid-state drive (SSD) or next to an SSD to perform tasks on behalf of the system’s main processor.

Computational storage tasks typically include highly parallel operational functions such as encryption and video encoding. In addition, some solutions are able to run full OS instances in the device allowing for an even great set of applications and tasks to be run without host involvement.

Computational storage processors (CSPs) function more like co-processors, charged with handling defined tasks than offload engines, which typically receive workloads from the CPU.

Others are considering deploying computational drives for AI/ML facial recognition. Computational storage drives (CSDs) might seek to find approximate matches by comparing imagery from live video streams to a database loaded onto the drive. Approximate matches from the computational system are then subsequently sent to the more powerful central CPU for final confirmation. (See a demonstration of an entire Greenplum database loaded into a computational drive here.)

Why adopt computational storage?

Where do you think most of the energy is spent in computing? Is it in crunching the numbers? Would you be surprised to learn that 62 percent of the energy consumed in computing according to some estimates gets spent on moving data, i.e. from storage to DRAM, DRAM to the CPU, or the CPU to I/O devices. Computational storage reduces transfer, and the energy and data traffic associated with it, by keeping many operations confined to the drive.

Computational storage can also boost performance, reduce latency, and reduce equipment footprint. Think of a two-socket server with 16 drives. An ordinary server might have access to 64 computing cores (two sockets filled with 32-core processors). With computational storage, the server would have access to 72 or more cores in the CSDs (assuming 4 or more cores per drive) running in parallel for 136 or more in all with the primary CPUs freed up to take on application workloads.

Likewise, IoT devices can become smaller, smarter, and more energy-efficient all at the same time with drives that can combine memory, storage, and computing within the same small enclosure.

What does the underlying architecture look like?

First-generation computational storage devices were based around field-programmable gate arrays (FPGAs). While effective, FPGAs are typically more expensive and consume more energy than a CPU. FPGAs also need to be programmed to run specific applications.

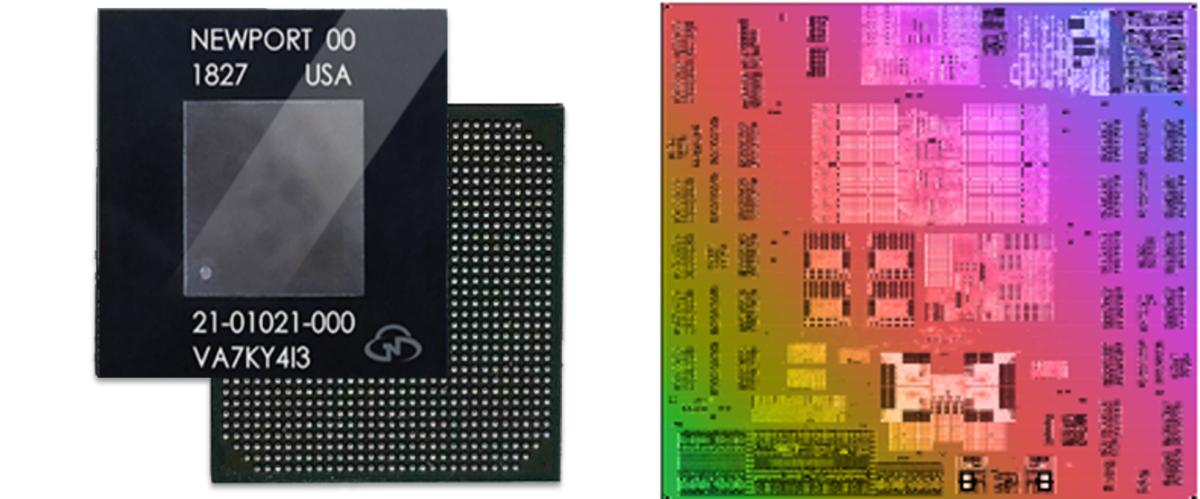

SoCs built with general-purpose CPUs can run standard, existing application programs, reducing the adoption hurdles. NGD Systems, for instance, uses a quad-core Arm Cortex-A53 processor in its Newport computational storage system and has plans to use higher performance Cortex-A processors in future systems. ScaleFlux, meanwhile, announced they will be using two quad-core Arm Cortex-A53 processors in their next-generation product.

Specialized processor cores such as NPUs for handling AI tasks or GPUs will also be added to computational storage drives over time. As we’ve seen with smartphones and servers, specialized processors will provide a performance and performance-per-watt uplift.

How do NPUs differ from data processing units (DPUs)?

Data processing Units (DPUs) perform networking, storage and security functions inside datacenters, so some overlap exists. The difference comes in speed, performance, and application, explains Scott Shadley, vice president of marketing at NGD. A DPU might be deployed to handle all of the networking protocols for a set of cloud servers in real-time. A single DPU might contain several Arm Neoverse processors along with other server-class GPUs and other devices.

CSDs, by contrast, put greater emphasis on efficiency as they have to fit within the power and volumetric budget allotted to a storage slot in a server. Working within an 8-watt ceiling, like that of an M.2 form factor, for the drive means that the computational storage device has a ceiling of two watts or less depending on the design.

Where will computational storage be used?

Data centers will be some of the first markets where computational storage drives will perform compression, deduplication, coding and other tasks.

Expect broad adoption at the edge where minimal power, space and cost are absolutely essential. Consider a content delivery network. An edge ‘datacenter’ loaded with the most frequently watched shows or a highly anticipated new show would ordinarily consist of one or more primary servers linked to several local SSDs. With computational storage, most, if not all, of the discrete servers could be eliminated: the local datacenter becomes a stack of CSDs that fits into a mailbox-sized container, a shift that lowers capex, opex, power consumption and space without compromising performance.

Smart security cameras are another potentially explosive market. An estimated 1 billion public security/surveillance cameras have already been deployed and the market is expected to grow by 18 percent per year. At 30 frames per second, a single 1080p camera can generate 2GB per hour or 17.5TB per year. Over 1 billion cameras, that’s over 13 zettabytes per year, or around 8,000x more data than gets posted to Facebook a year. By deploying computational storage for local ML tasks, web traffic can be dramatically reduced.

Bandwidth-constrained devices such as aircraft or subterranean mining vehicles are also prime targets. Over 8,000 aircraft carrying over 1.2 million people are in the air at any given time. Machine learning applications for predictive maintenance or overall aircraft health can be performed efficiently during the flight with computational storage to increase safety, reduce turnaround time and ultimately reduce costly and irritating delays.

What are the challenges?

At this stage, mostly finalizing standards for compatibility and scalability. The Storage Networking Industry Association (SNIA) recently released its 0.8 specification, with a full specification likely coming in 2022. Cloud providers among others have been experimenting with computational drives and will soon start to shift to commercial deployment.

If history is any guide, cloud providers will roll out computational storage as an option for specific services or instances with adoption spreading over time.

Will we see computational storage inside physical hard drives?

Computational storage is currently focused on SSDs. However, the benefits—lower latency, lower energy consumption—apply equally to hard disk drive (HDD) based systems. The advantages could even be greater. Hybrid drives that combine HDD and SSD technology could also benefit.

White Paper: The Guide to Computational Storage at Arm

Read this white paper to discover the technologies, use case scenarios and learn what to consider when entering the market for computational storage.

Any re-use permitted for informational and non-commercial or personal use only.