Techniques, Features and Trends to Optimize the Mobile Gaming Experience

From more realistic gaming scenes and AI-based characters to power savings for longer gameplay, developers are delivering truly immersive experiences that are transforming the mobile gaming experience across all tiers of devices, from entry-level to flagship. However, there are physical limits to these levels of performance. Clever graphics techniques, technologies, tools and features are needed to improve power and energy consumption and avoid overheating to ensure that the actual mobile device and overall gaming experience performs well.

In the run-up the 2024 Game Developer Conference (GDC), we explore the latest and greatest mobile graphics techniques, technologies, tools and features that are redefining the mobile gaming landscape and helping developers deliver optimum gaming experiences.

How does ray tracing create more immersive mobile games?

Ray tracing is a computer graphics technique that generates realistic lighting and shadows through modeling the paths that individual lights take around the scene. This helps to deliver more realistic, more immersive gaming experiences.

Ray tracing can be broken down into two different types: ray query and ray pipeline. Ray query is an explicit model, which means that all the rays are handled within the GPU shader. Ray pipeline differs in that the steps described are spilt into discrete stages. Across the current mobile ecosystem, ray query has greater adoption than ray pipeline and this is likely to remain the same for the next few years.

The challenge with ray tracing is that it can use significant power, energy, and area across the mobile system-on-chip (SoC). However, ray tracing on Immortalis-G715 GPU (the first Arm GPU to offer hardware-accelerated ray tracing support) only uses four percent of the GPU shader core area, while delivering more than 300 percent performance improvements through the built-in hardware acceleration.

The launch of Immortalis-G715 in 2022 provided the foundation for the developer ecosystem to start exploring and integrating ray tracing techniques into their gaming content.

Developers can learn more about how to achieve successful mobile games with ray tracing in this blog. Also at GDC 2024, Iago Calvo Lista, a Graphics Software Engineer at Arm, will be presenting a deep-dive talk on how game developers can create more realistic mobile graphics with optimized ray tracing.

Can Variable Rate Shading enhance gaming performance and power efficiency?

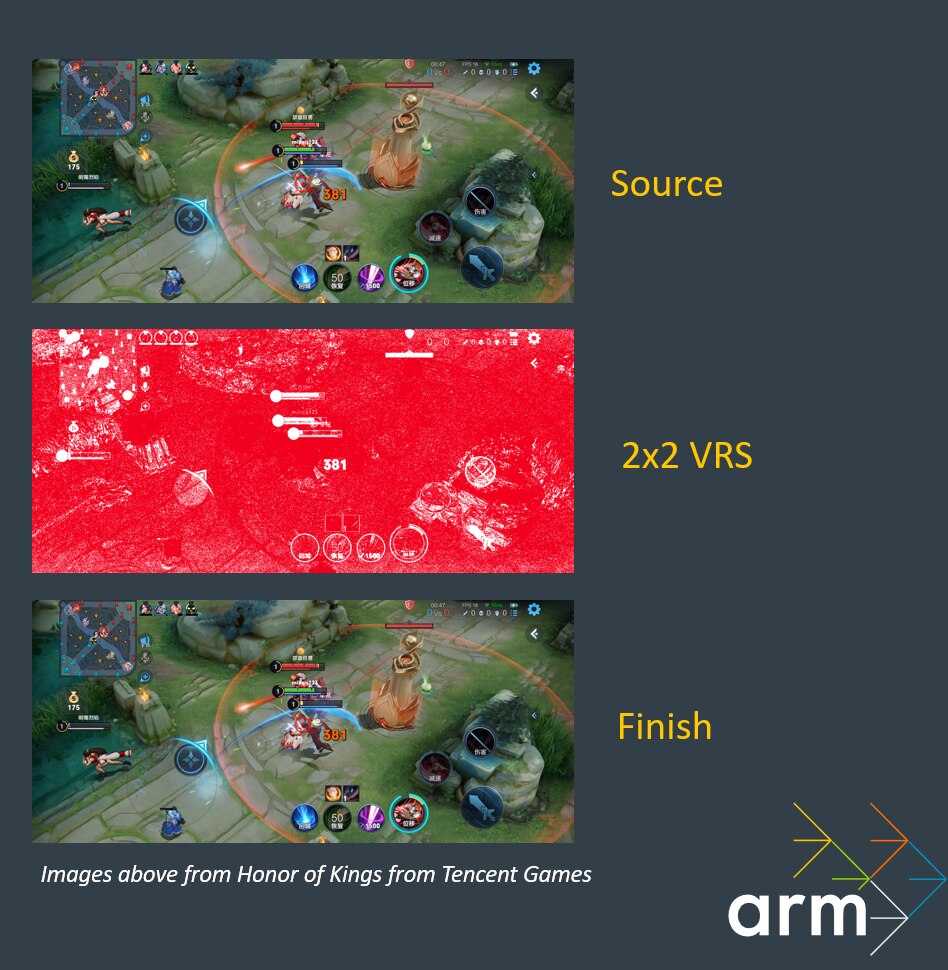

Variable Rate Shading (VRS) takes a scene and focuses the rendering on the parts that need it at a fine pixel granularity. In a game, this would typically be where the game action takes place. Then, areas of the scene that require less focus (such as background scenery) are rendered with a coarser pixel granularity.

The gaming scene still maintains its perceived visual quality, but as less rendering is being applied across the full scene, there are significant energy savings. This means longer game-time for players and better overall performance, with a better sustained framerate and less heat generated. When enabling VRS on gaming content, we have seen improvements of up to 40 percent on frames per second (FPS).

Arm first integrated VRS into Immortalis-G715 and Mali-G715 GPUs, with this continuing through to the latest generation of GPUs – Immortalis-G720 and Mali-G720 GPUs – where we added greater flexibility and higher performing shading rates for VRS.

More detailed information about VRS and how to apply this technique can be found in this blog.

What is the role of deferred vertex shading in complex mobile gaming scenes?

More complex mobile gaming scenes require work on the graphics pipeline, with Arm introducing deferred vertex shading (DVS) to improve geometry dataflow in Arm’s latest GPUs built on the 5th Gen GPU architecture. Through DVS, performance can be scaled to larger core counts, enabling the mobile ecosystem to reach higher performance points. DVS also helps to maintain a consistent framerate across the most complex gaming scenes, while future proofing for next-generation geometry content.

The introduction of DVS has led to performance benefits across selected scenes for popular gaming content. These include 33 percent less bandwidth used on Genshin Impact and 26 percent less bandwidth used on Fortnite, both hugely popular games worldwide that feature across different platforms – PC, Console and Mobile.

What does Arm Performance Studio offer to game developers?

Arm maintains consistent ecosystem support for game developers through a range of GPU tools and resources. The newly branded Arm Performance Studio (previously known as Arm Mobile Studio), which is free to download, is one such tool. It provides game developers with a range of profiling, performance analysis and debugging tools, so they can optimize the performance and efficiency of their gaming applications.

Learn more about the name change to Arm Performance Studio and a host of new features in this blog.

How can Arm Frame Advisor improve game development?

ArmFrame Advisor is a frame-based profiler for games supporting OpenGL ES 3.2 and Vulkan 1.1, which is now publicly available for game developers. It uses a layer driver to capture all API calls in a frame, with the analysis engine providing contextual feedback to game developers. This feedback identifies opportunities for developers to increase the performance of their applications through providing:

- Visualizations of the render graph and frame data flow;

- Information about best practice violations; and

- Information about budget violations, such as exceeding a GPU cycle count or GPU power budget.

More detailed information about Arm Frame Advisor following its first release in December 2023 can be found in this blog. Also, at GDC 2024, we will be highlighting how game developers can “achieve mobile GPU mastery” through a Frame Advisor demo on the Arm booth.

What is adaptive performance and how does it benefit game developers?

Adaptive performance covers various performance frameworks, including those from Samsung and Android. Unity has created integrations for both frameworks, so developers can use them to fine-tune their games and improve the overall performance.

Developers can work with these frameworks to simulate events that replicate changes in the device state that relate to real-world performance on mobile devices. For example, there will be simulated events around thermal throttling to identify any potential performance issues that can then be improved upon by the developer.

When a developer adopts a performance framework, they are empowered to take steps within their game that can rectify the situation with minimal degradation of the player experience on the affected device. Acknowledging that it is practically impossible to test all the devices in the diverse Android ecosystem, the developer is left with the means to react to conditions as they arise. Therefore, Arm is partnering with Google and Unity on adaptive performance to ensure platforms provide trustworthy and meaningful feedback which the game engine can then use to optimize game performance.

From a developer perspective, adaptive performance frameworks offer powerful tools to maintain the overall player experience. Developers can design and implement features in a way that gracefully degrades and test them with simulated events. Room for improvement remains, as these simulated events do not cause any changes in the operating system that happen in the real world. This means that it still remains difficult for developers to accurately evaluate the impact of the performance framework until they are deployed to end users.

In a GDC 2024 talk at the Google Developer Summit, Arm’s Syed Farhan Hassan will be presenting alongside Google and Samsung on enhancing game performance through Vulkan and Android adaptability technology.

What advantages does the Unreal Engine 5 desktop renderer bring to gaming?

The Unreal Engine 5 desktop renderer is designed to provide high-quality, real-time rendering for PC games and applications, including advanced lighting and texture features. This pushes the boundaries of graphical fidelity and realism in real-time rendering for games.

Arm has been working with Epic Games to enable the Unreal Engine 5 desktop renderer on Android. For developers, this ensures that desktop quality rendering and graphics are delivered by Immortalis GPUs on mobile devices. Showcasing this work, Arm has created the Steel Arms demo – which will be presented on the Arm booths at Mobile World Congress (MWC) 2024 and GDC 2024 – to test the developer experience with our GPU products and demonstrate how the renderer can enable high-quality graphics. These include rich bloom effects, high-quality physically based shading, vivid blur effects and real-time reflections. We are also currently working on an Unreal Engine integration of an adaptive performance framework in Steel Arms.

Also, at GDC 2024, Patrick Wang, a Game Developer at Arm, will be speaking about how Arm seamlessly integrated Lumen, a high-level lighting pipeline, to unlock lighting optimizations via ray tracing for Steel Arms.

How is Sentis driving AI innovation in game development?

Unity’s Sentis is a framework that aims to bring rapidly evolving AI model innovation into game development. It allows game developers to import and then run third-party AI models – which can be customized – across all Unity-supported mobile devices. Sentis focuses on the following AI-based use cases: object identification; customizable AI opponents; handwriting detection; depth estimation; speech recognition; and smart non-player characters (NPCs).

Unity’s ML-Agents is part of these AI-based use cases that are supported by Sentis. It allows game developers to train intelligent agents within games and simulations through a combination of deep reinforcement learning and imitation learning. This enables developers to create more compelling gameplay and an enhanced game experience. Arm is working with Unity to ensure that power across our CPUs and GPUs is sufficiently balanced during gameplay that uses ML-Agents to deliver a consistent gaming experience.

At GDC 2024, Yusuf Duman, a Software Engineer at Arm, will explain how Unity Sentis supports INT8 quantized networks for AI and ML optimizations in games.

Build better games, faster on Arm

With this broad range of mobile graphics techniques, technologies and features, developers can optimize their games for more stunning and immersive visual experiences, more captivating graphics and lower power consumption for longer gameplay. This is all happening on Arm-based mobile devices, with game developers able to leverage the power and efficiency of Arm-based processors to create better games that run smoothly on any device. Moreover, the wide range of tools, software and developer resources allow developers to bring their mobile games to market faster.

We are providing the technologies, tips, resources and insights, so the world’s developers can continue to run their games on Arm, now and in the future.

Learn more about how AI is supercharging mobile graphics and gaming in the guide below.

Click to read AI on MobileAny re-use permitted for informational and non-commercial or personal use only.