Seeing the Potential of Smart Vision

Collapsing bee populations, urban traffic headaches and automobile safety issues are three completely different problems. But they can be solved with a single solution: computer vision.

Beekeepers are on the front lines of one of the most worrisome environmental problems of our time: The death – due to things like pesticides, climate change and disease – of a third of the bee population every year. Checking and nurturing hives is a painstaking, centuries-old, manual practice. But Beewise, an Israeli startup, has created the world’s first autonomous beehive with a simple yet vital mission in mind: save the bees and keep their population stable over time.

The BeeHome relies on robotic brood box management and computer vision-based monitoring, critical operations that aren’t meant to replace the role of beekeepers but to equip them with tools to help their bees survive.

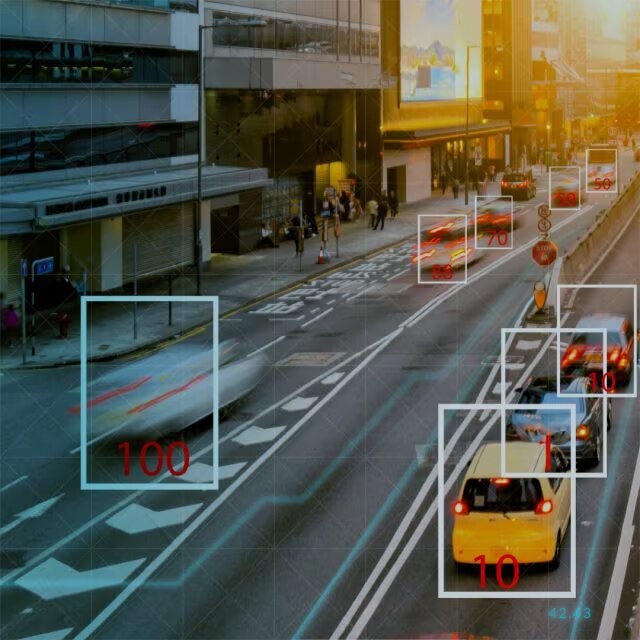

In South Korea, Pyeongtaek City, with a population of a half-million people, grapples with traffic congestion and pedestrian fatalities. As part of a citywide “smart city” overhaul, experts have deployed Nota.ai’s Nespresso platform – an automatic AI model compression solution – in vision devices to create an intelligent transportation system.

By implementing real-time traffic signal control, city planners tripled average traffic speeds during rush hours and by 25% during normal hours. That cut the city’s traffic-congestion-related costs (and probably pollution) and reduced the time spent by drivers stuck in traffic.

At startup emotion3D, the company’s goal is “Vision Zero,” a world in which there are no fatalities or severe injuries in road accidents. The Austrian company’s technology has two key components – Driver Monitoring (DMS) and Occupant Monitoring (OMS). The software detects driver drowsiness, distractions, or medical emergencies, crucial for intelligent vehicle safety measures. The software’s ML algorithms run efficiently on low-power devices for real-time responses, serving as the foundation for enhanced car safety and user experiences.

Eyes: Windows to … transformative technologies

Why are these and countless other innovative companies looking to vision to transform the world around us? It’s simple: Vision is the most important and complex sensory modality humans possess. Approximately 80% of everything we sense comes in through our eyes, and humans tend to rely more on sight for information about their environment more so than on hearing or smell.

This focus on vision is accelerating rapidly, in part because the technology can be deployed in a seemingly limitless number of areas, from transportation to homes and business to hospitals, factory floors and sporting arenas.

Among the applications:

- Cameras for image recognition and object detection.

- Augmented reality (AR) and virtual reality (VR) solutions that leverage computer vision to overlay digital information on the real world or create immersive virtual environments.

- Medical imaging capture and analysis, which can make diagnoses vastly more accurate with artificial intelligence.

- Autonomous systems.

- Gesture and emotion recognition: Computer vision can interpret human gestures and emotions by analyzing facial expressions and body movements.

- Security and surveillance enhancements with computer vision systems that can detect and track suspicious activities or individuals in real-time.

- Quality control and manufacturing, leveraging machine vision to inspect products for defects, sort items, and automate quality control processes.

Blurred visions?

The sky’s the limit, right? With any exciting, emerging technology there are always challenges.

Consider bandwidth and data management. The more smart-vision devices in the field, the more data is captured. Along with more advanced sensor capabilities, this can lead to a growing bandwidth crunch, since sending edge data to the cloud and back for analysis is time-consuming and expensive.

Another consideration is real-time processing and latency. A sensor-equipped vehicle needs to determine immediately (and therefore locally) whether the object bouncing on the road in front of the car is a child’s ball or a tumbleweed. Achieving real-time performance while computing on complex vision algorithms in resource-constrained environments can be a challenge.

Vision systems that rely on network connectivity can be vulnerable to security breaches. Unauthorized access to visual data or manipulation of computer vision algorithms can lead to privacy breaches, identity theft, or other security risks. MIT students tricked Google’s computer vision into seeing a pair of human skiers as a dog.

There are social and legal issues as well. Computer vision systems that collect and analyze visual data raise concerns about privacy, especially when the application is facial recognition. Retail stores are careful to anomize surveillance of in-store customers as they try to learn from floor-traffic patterns how to optimize their displays and store layout. But city surveillance cameras, facial-recognition technology and tracking systems are another matter entirely that requires serious policy conversations, bias and discrimination in computer-vision algorithms is also a real concern that can lead to unfair outcomes.

A decade or more ago, the gold rush of innovation around vision that we’re experiencing now would have been inconceivable. Too many vital technologies sat siloed, each either difficult or impossible to integrate with other important puzzle pieces to enable a frictionless innovation ecosystem. What’s different about today?

Seeing clearly

Arm. More than 30 years after its founding, the company that helped transform the mobile-telephony business offers technologies that power all corners of compute, from smart phones to the IoT to automotive, infrastructure and data centers and much more. The company has expanded into these areas because its technology offerings have been scaled and honed in recent years to recognize the fact that innovation will happen everywhere if developers have choice in their technologies, access to open standards and frameworks and a vibrant ecosystem in which to build their breakthrough products.

Arm sits at the intersection of software and hardware, with a computational footprint in vision alone that already huge: 90% of smart cameras are powered by Arm-based SoCs.

Arm meets increasing demand for AI and ML in advanced system thanks to its 64-bit processor architecture. Smart cameras are adopting higher resolutions like 4K and 8K to meet increasing imaging requirements, and the move to 64-bit processors means developers can design for the demands of multiple high-resolution streams while enabling faster frame rates for better object detection and recognition.

Arm helps accelerate system design with tools and software to make developers’ lives easier and their route to market faster. These include IP explorer, Arm Virtual Hardware, the Arm smart vision configuration kit and more.

Arm standards, such as these allow the ecosystem to reuse common parts of the software stack while focusing their resources and energy on product differentiation.

Arm’s presence in AI and ML is broad and deep: Most AI workloads today touch at least some aspect of Arm technology, and Arm has a broad array of processing technologies and solutions designed to support the quickly expanding number of vision applications at the edge, which low latency and efficient computing resources are crucial to product success.

Additionally, security in any application area has been a vital concern for Arm, since its processor technologies form the foundation of most digital applications. Arm helped found, for example, PSA Certified, an open security certification scheme for IoT hardware, software, and devices. The certification is implementation- and architecture-agnostic, so it can be applied to any chip, software, or device.

A similar industry initiative is Arm SystemReady, a compliance certification program and set of standards that aims to ensure interoperability and compatibility between generic off-the-shelf operating systems and Arm-based devices. SystemReady – with more than 100 certifications – is enabling faster development at scale.

If a picture tells a thousand words, then digital vision technologies hint at countless applications.

Leading companies are embracing an approach to innovation rooted in a broad array of specialized IP that enables heterogeneous systems (with CPUs, GPUs, NPUs and ISPs), as well as standardized, secure software, and innovative approaches to tooling and development for their IoT vision products.

It’s already having profound consequences for the acceleration. It wasn’t long ago that advanced parking lots deployed cameras above each parking spot that captured information and could tell drivers as they entered how many spaces were open on each floor. Today, advanced parking lots are doing the same thing with just one or two cameras, one at the entrance and the other at the exit that leverage number plate recognition AI and ML to achieve a similar but much more compute-efficient outcome.

Entirely new business models are popping up. For example, video surveillance-as-a-service (VSaaS) is the provision of video recording, storage, remote management and cybersecurity in the mix of on-premises cameras and cloud-based video-management systems. It allows, for instance, retailers to unlock value from the video data they capture so they can better serve customers and optimize business processes.

The future of vision is here and it’s starting to transform the world. That future is built on Arm.

Any re-use permitted for informational and non-commercial or personal use only.