20 Arm tech predictions for 2026 and beyond

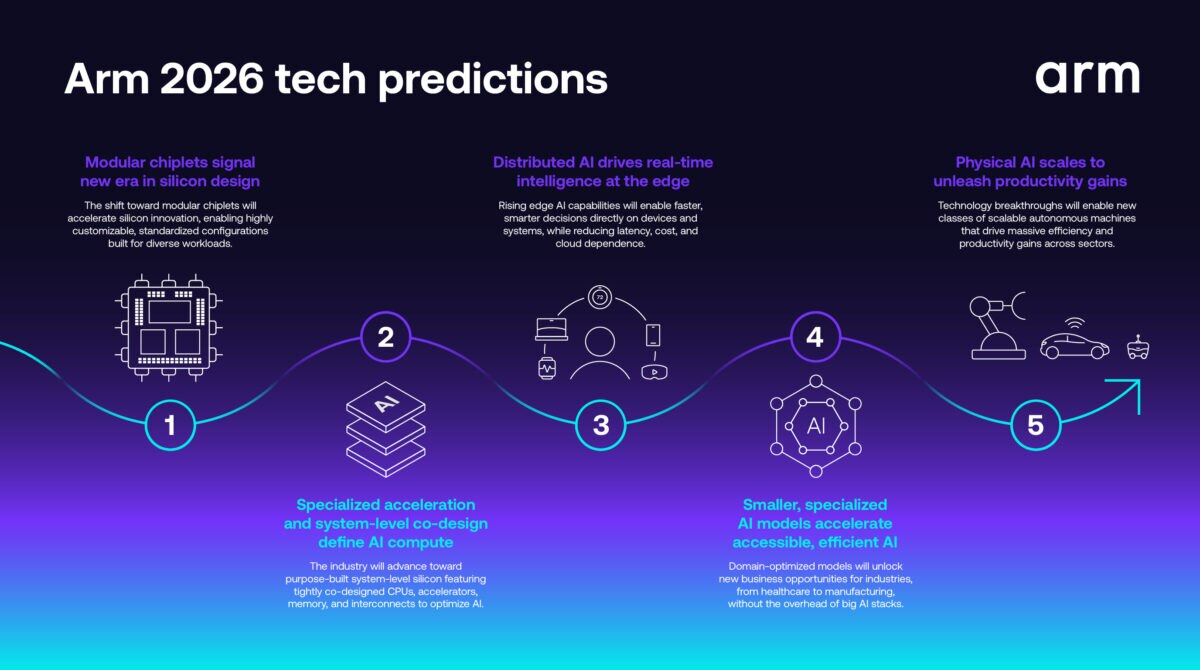

The world’s relationship with compute is changing — from centralized clouds to distributed intelligence that spans every device, surface, and system. In 2026, we will enter a new era of intelligent computing — where compute is increasingly modular, power-efficient, and seamlessly connected across cloud, physical and edge AI environments.

With this in mind, here are 20 Arm tech predictions that we believe could shape the next wave of innovation in 2026.

Silicon innovation

1. Modular chiplets redefine silicon design

As the industry pushes the limits of what’s possible in silicon, the shift from monolithic chips to modular-based chiplet designs will accelerate. By separating compute, memory, and I/O into reusable building blocks, designers can mix process nodes, reduce cost, and scale faster. The increasing focus on modularity will mark a shift from “bigger chips” to “smarter systems,” giving silicon teams the freedom to mix process nodes and rapidly tailor systems-on-chip (SoCs) for diverse workloads. This will fuel the ongoing rise of customizable chiplets – highly configurable blocks that combine general-purpose compute with domain-specific accelerators, memory tiles, or specialized AI engines – enabling silicon teams to build differentiated products without starting from scratch to dramatically shorten design cycles and lower barriers to innovation. Also expect industry-wide standardization to evolve, with emerging open standards enabling chiplets from different vendors to be combined reliably and securely. This will lower integration risk, expand the supply base, and unlock a marketplace of interoperable components rather than tightly coupled, single-vendor systems.

2. Smarter scaling through advanced materials and 3D integration

Silicon innovation in 2026 is likely to come from new materials and smarter stacking, like 3D stacking, chiplet integration and advanced packaging, not smaller transistors, enabling higher density and efficiency in high-performance chips. This “More-than-Moore” evolution is about vertical innovation – layering functionality, improving heat dissipation and boosting compute per watt – rather than lateral scaling. The approach will become essential to sustaining progress in high-performance, energy-efficient computing, while laying the foundation for more capable AI systems, denser data center infrastructure, and increasingly intelligent edge devices.

3. Secure-by-design silicon becomes non-negotiable

As AI systems become more autonomous and deeply embedded in critical infrastructure, secure-by-design silicon will shift from a commercial differentiator to a universal requirement. Adversaries are already probing AI systems for exploitable patterns and targeting the hardware itself, with this rising threat making built-in, hardware-level trust essential. Technologies like Arm Memory Tagging Extension (MTE), hardware root-of-trust, and confidential compute enclaves will be baseline expectations, not optional add-ons. Moreover, as people and enterprises increasingly store their most valuable digital assets inside AI systems – from proprietary datasets and business logic to user credentials, personal histories, and financial information – this will require a variety of security measures at a silicon level, including cryptographically enforced isolation, memory integrity, and runtime verification.

4. Specialized acceleration and system-level co-design will define AI compute and rise of converged AI data centers

The rise of domain-specific acceleration is redefining silicon performance, but not through a split between general-purpose compute and accelerators. Instead, the industry is moving towards purpose-built silicon that are co-designed with their software stacks at a system-level and then optimized for particular AI frameworks, data types, and workloads. Major cloud providers like AWS (Graviton), Google Cloud (Axion), and Microsoft Azure (Cobalt) are leading this shift, demonstrating that tightly integrated platforms – where purpose-built CPUs, accelerators, memory and interconnects are engineered together from the ground-up – are central to scalable, efficient AI that is accessible to developers. These developments are feeding into the acceleration of the next phase of infrastructure – the converged AI data center – which maximizes AI compute per unit of area to reduce the volume of power and related costs associated with powering AI.

AI everywhere, from cloud to physical to edge

5. Distributed AI compute pushes more intelligence to the edge

While the cloud will remain vital for large scale models, AI inference processing will continue to migrate out of the cloud and into devices, leading to quicker responses and decision-making. In 2026, edge AI will accelerate from basic analytics to real-time inference and adaptation on edge devices and systems, incorporating more complex models thanks to algorithmic advancements, model quantization and specialized silicon. This will see on-site inference and local learning as standard, reducing latency, cost, and cloud dependency, while redefining these edge devices and systems as self-sufficient compute nodes.

6. Cloud, edge, and physical AI begin to converge

In 2026, the long-standing debate between cloud vs. edge will start to fade as AI systems increasingly operate as a coordinated continuum focused on collaborative intelligence. Rather than treating cloud, edge, and physical intelligence as separate domains, companies will start designing AI tasks and workloads that target the layer they’re best suited for. For example, the cloud provides large-scale training and model refinement, the edge delivers low-latency perception and short-loop decisions close to the data, and physical systems – robots, vehicles, and machines – execute those decisions in real-world environments. This emerging pattern of distributed AI will help to support the deployment of reliable, efficient physical AI systems at scale.

7. World models to transform physical AI development

World models will emerge as a foundational tool for building and validating physical AI systems – from robotics and autonomous machines to molecular discovery engines. Advances in video generation, diffusion-transformer hybrids, and high-fidelity simulation will allow developers and engineers to construct rich virtual environments that accurately mirror real-world physics. These sandboxed “AI testbeds” will let teams train, stress-test, and iterate on physical AI systems before deployment, reducing risk and accelerating development cycles. For sectors like manufacturing, logistics, autonomous mobility, and drug discovery, world-model-driven simulation could become a competitive necessity, and a catalyst for the next wave of physical AI breakthroughs.

8. The ongoing rise of agentic and autonomous AI in physical and edge environments

AI will evolve from assistant to autonomous agent, with systems that perceive, reason, and act with limited oversight. Multi-agent orchestration will emerge more widely across robotics, vehicles, and logistics, while consumer devices will integrate agentic AI natively. In the automotive supply chain, there will be the emergence of systems that are agents, not just tools, with logistics optimization systems continuously monitoring supply flows and proactively reordering, re-routing, or alerting human supervisors rather than waiting for triggers. Meanwhile, factory automation could move toward “supervisory AI” that monitors production, detects anomalies, predicts throughput issues, and initiates corrective action autonomously.

9. Contextual AI will power the next range of user experiences

While generative AI at the edge across text, image, video and audio will continue to expand, the real breakthrough for on-device AI will be contextual. This will enable devices to understand and interpret environments, user intent and local data to unlock new layers of the user experience, from enhanced displays to proactive safety. Also, rather than responding to prompts, contextual AI systems will anticipate what users need, tailoring experiences with a level of precision and personalization not previously possible. And because the AI runs on-device, it aligns with the need for greater privacy, latency and power efficiency.

10. The growth of many purpose-built models rather than one large model

While large language models (LLMs) will continue to be important for training and inference in the cloud, the era of “one giant model” will start giving way to many smaller, specialized ones. These purpose-built models will be optimized for specific domains that run at the edge, with this already taking shape across multiple vertical sectors, from defect detection and quality inspection in manufacturing to diagnostic assistants and patient monitoring models in healthcare. For smaller enterprises, this offers new opportunities, as they don’t need to build bespoke “big AI” stacks, and can instead leverage accessible, domain-specific smaller models and focus on how to deploy these in particular contexts.

11. Small Language Models (SLMs) will become increasingly capable and accessible to enterprises

Breakthroughs in compression, distillation, and architecture design will shrink today’s complex reasoning models by orders of magnitude into small language models (SLMs) without sacrificing computing capabilities. These compact models will deliver near-frontier reasoning performance under dramatically smaller parameter counts, making them easier to deploy at the edge, cheaper to fine-tune, and efficient enough for power-constrained environments. This will be supported by the increasing adoption of ultra-efficient AI model training techniques, like model distillation and quantization, with these becoming the standard for the industry. In fact, expect training efficiency to become the central benchmark for AI models, with metrics, like “reasoning per joule”, already appearing in product literature and research papers.

12. Physical AI scales to deliver productivity gains across industries

The next multi trillion-dollar AI platform will be physical, with intelligence built into a new generation of autonomous machines and robots. Driven by breakthroughs in multimodal models and more efficient training and inference pipelines, physical AI systems will begin to scale, delivering new classes of autonomous machines that will help to reshape industries – including healthcare, manufacturing, transport, and mining – by delivering massive productivity gains, and operating in environments considered unsafe and dangerous for humans. Moreover, expect to see compute platforms that serve automation in both vehicles and robotics, with chips built for cars likely to get re-used and adapted for humanoids or factory robots. This will further enhance the economy of scale and faster development of physical AI systems.

Technology markets and devices

13 . Hybrid cloud maturity ushers in the next phase of multi-cloud intelligence

In 2026, enterprises won’t just be adopting multi-cloud architectures, they will be advancing into a more mature, intelligence-driven phase of hybrid cloud computing. This will be defined by:

- Greater autonomy in workload placement, with systems dynamically choosing the most efficient or secure execution environment;

- Standardized interoperability that allows data and AI models to move seamlessly between platforms;

- Energy-aware scheduling, where performance-per-watt becomes a first-class driver of deployment decisions; and

- Distributed AI coordination, enabling training, fine-tuning, and inference to happen wherever it makes the most sense across a heterogeneous infrastructure.

This requires a coordinated approach supported by open standards and power-efficient compute where AI models, data pipelines, and applications fluidly operate across multiple clouds, data centers, and edge environments.

14. From chip to factory floor, AI rewrites the automotive playbook

As AI-enhanced automotive functions become must-haves for the industry, AI will be deeply embedded across the entire automotive supply chain, from the chip in the vehicle to the industrial robots in the factories. In the AI-defined vehicle, expect advanced on-board AI for perception, prediction, driver-assistance and greater autonomy, particularly for advanced driver assistance systems (ADAS) and in-vehicle infotainment (IVI), with silicon being reshaped around these demands. Meanwhile, manufacturing in the automotive industry will be transformed, with factories becoming smarter and more automated with industrial robotics, digital twins and connected systems.

15. Smartphones get smarter as on-device AI becomes the standard

Smartphones in 2026 will continue to lean heavily on AI features, including camera and image recognition, real-time translation and assistant, that will be entirely processed on-device. Smartphones will essentially become a digital assistant, camera, and personal manager combined into one. Arm neural technology – which adds dedicated neural accelerators into Arm’s 2026 Mali GPUs – signals a big leap in mobile on-device graphics and AI. By the end of 2026, the latest flagship smartphones will have neural GPU pipelines, enabling features such as 4K gaming at higher frames per second, real-time visual compute, and more advanced on-device AI assistants, all without needing cloud connectivity.

16. Compute boundaries across all edge devices begin to disappear

The long-standing divisions between PC, mobile, IoT, and edge AI will begin to dissolve, giving way to a unified era of device-agnostic on-device intelligence. Rather than thinking in terms of product categories, users and developers will increasingly interact with a consistent computing fabric, one where experiences, performance, and AI capabilities flow seamlessly across different edge device form factors. A driver of this shift will be a new wave of cross-operating system (OS) compatibility and application portability. As OSs evolve to share more underlying frameworks, runtimes, and developer tools, software will increasingly be built once and deployed everywhere, from PC and smartphones to edge AI and IoT devices.

17. AI personal fabric connects every device

The AI experience will transcend devices to form a cohesive “personal fabric” with intelligence that moves fluidly with users across their digital lives. All edge devices – phones, wearables, PCs, vehicles, and smart home devices like thermostats, speakers, and security systems – will run AI workloads natively, enabling them to share context and learnings in real-time to anticipate user needs across every screen and sensor, and deliver seamless, personalized experiences. Then, as smaller AI models and heterogeneous compute continue to mature, everyday connected devices in the home will contribute to this intelligent ecosystem. Essentially, personal devices will evolve to become part of a collective, adaptive framework that understands the user and continuously learns from their interactions across environments.

18. AR and VR wearable growth across enterprise settings

AR and VR wearables, including headsets and smart glasses, will find their footing across a wider range of work environments – from logistics and maintenance to healthcare and retail. This will be largely due to advances in lightweight designs and extended battery life, making hands-free computing practical in more settings than ever before. These enterprise deployments will demonstrate the value of subtle, task-specific wearables that deliver information contextually to enhance productivity and safety. As form factors continue to shrink, AI capabilities grow, and connectivity becomes increasingly seamless, AR and VR wearable compute will evolve from novelty to necessity, a quiet but significant step toward a more ambient, assistive future for the workforce.

19. Sensemaking infrastructure reimagines IoT

The Internet of Things will become the “Internet of Intelligence.” Edge IoT devices will move beyond data collection and sensing to “sensemaking” – interpreting, predicting, and acting autonomously. This shift redefines IoT as a living infrastructure for context-aware decision-making, one where localized, low-power compute delivers real-time insights with minimal human intervention, driving a new era of autonomy and energy-efficient innovation.

20. Healthcare wearables go clinical

Next-generation health wearables will evolve from fitness companions into medical-grade diagnostic tools. These wearables will host AI models capable of analyzing biometric data locally – from heart rate variability to respiratory patterns – in real-time. Remote patient monitoring (RPM) is just one example of this wider transformation: a growing ecosystem of connected clinical-grade sensors which will enable continuous care, early detection, and personalized treatment insights.

The bottom line

Across cloud, edge and physical AI, every Arm prediction for 2026 shares a common thread: advanced intelligence-per-watt, everywhere. As the world enters a new era of computing, Arm’s position as the foundational compute platform powering the next wave of efficient, intelligent, scalable, and secure innovation has never been more vital. We cannot wait to see what happens next!

Any re-use permitted for informational and non-commercial or personal use only.