The Arm Ecosystem: Powering AI Everywhere – From Cloud to Edge

AI is reshaping technology faster than anyone imagined, becoming an integral part of everyday life. The Arm compute platform sits at the center of this transformation, with more than 310 billion Arm-based chips shipped to date in everything from consumer devices to AI-enabled vehicles and AI-first datacenters. This rapid AI evolution was brought to life today (May, 19) in Taipei, Taiwan, during the Arm COMPUTEX keynote by Chris Bergey, SVP and GM of Arm’s Client Line of Business, where he was joined on stage by senior leaders from two of our key partners, MediaTek and NVIDIA.

As Bergey explained, AI is changing everything, at an unprecedented pace. Arm and our world-leading ecosystem are committed to relentless innovation and continuous investments across hardware and software to enable the next wave of AI that will transform billions of lives.

The expanding Arm compute platform: At the heart of innovation at COMPUTEX 2025

Over the years, we have evolved our product offering to match the needs of the markets we serve. Today, Arm is a compute platform company focused on a system-level approach that allows our partners to integrate technology and scale faster to meet the demands of AI. From cloud to edge, Arm is demonstrating leadership in compute performance and efficiency.

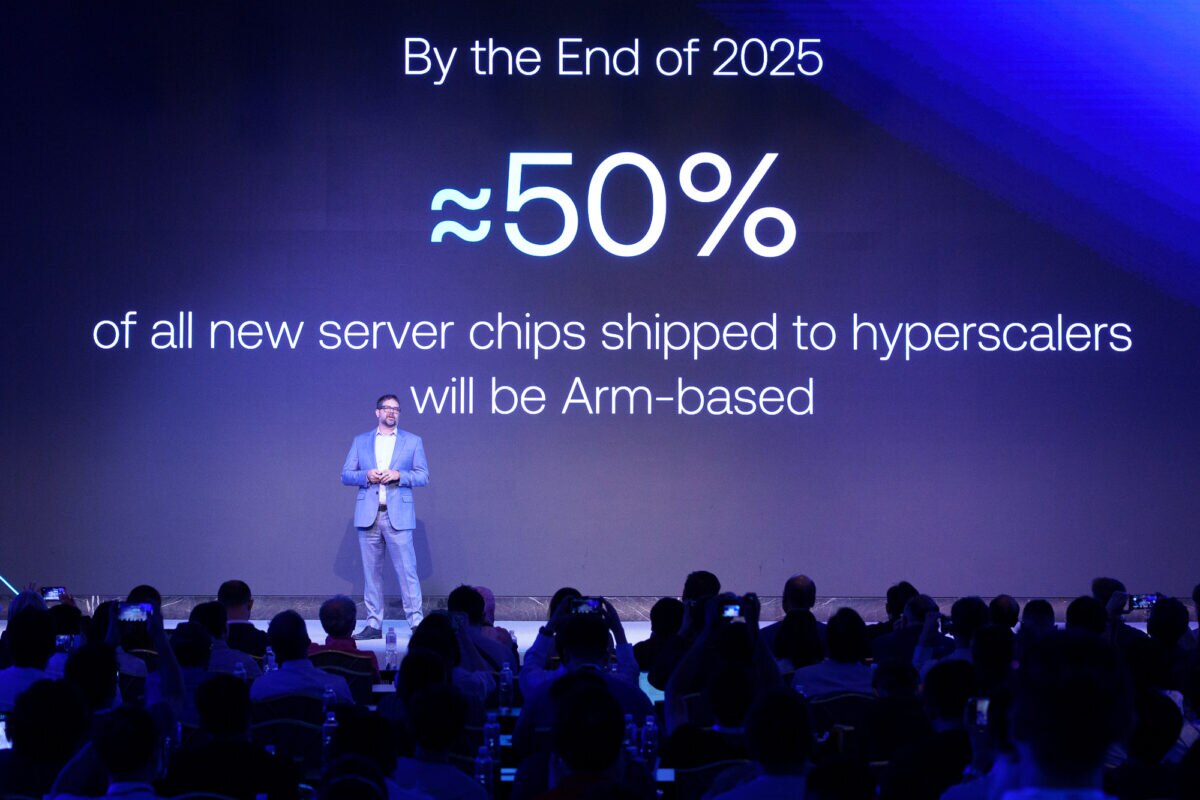

In the cloud and datacenter, close to 50 percent of all new server chips shipped to top hyperscalers in 2025 will be Arm-based, with AWS, Google and Microsoft expanding their own Arm-based datacenter chips. This growth is driven by the insatiable demand for Arm’s power-efficient compute to meet the immense computing demands of complex AI inference and training workloads. Thanks to our unwavering commitment to improving performance-per-watt, Arm-powered chips from leading hyperscalers are up to 40 percent more energy-efficient than other platforms. In fact, at COMPUTEX, NVIDIA shared the latest momentum around its Arm-based NVIDIA Grace CPU that is delivering performance and efficiency gains for demanding AI workloads across a range of real-world deployments, including ExxonMobil, Meta and high-performance computing centers in Texas and Taiwan.

Performance per watt is just as critical in edge devices. At last year’s COMPUTEX, we announced Arm Compute Subsystems (CSS) for Client, as the compute platform for consumer devices, including the latest flagship AI smartphones and next-generation AI PCs. Alongside double-digit performance gains, CSS for Client delivers tangible benefits for users – faster launches, and smoother, longer AI-based experiences on their favorite devices.

With 99 percent of all smartphones running on Arm, we are driving demand in other consumer device markets for more performance, long battery life and always-on efficiency. In fact, building today’s AI PCs looks increasingly like a modern smartphone – thin and light form factors, fanless designs, all-day battery life, always-on efficiency and leading-edge multimedia experiences, from video conferencing to streaming.

In recent years, demand for Arm-based PCs and tablets have surged, with the Arm compute platform set to power 40 percent of all shipments in 2025. The majority of the world’s most widely used applications now have Arm-native versions for Windows, bringing faster, more powerful PC experiences, including key AI PC use cases like chatbots and productivity enhancements. MediaTek recently raised the bar in the Chromebook market with the launch of the Arm-powered Kompanio Ultra SoC to deliver advanced AI and multimedia experiences to next-generation Chromebook Plus devices.

We are also seeing great momentum with NVIDIA’s DGX Spark, an AI desktop powered by the Grace Blackwell superchip with Armv9 CPUs, which puts high performance AI in the hands of millions with enough compute to run 200 billion parameter models. DGX Spark is already gaining traction among developers and researchers building powerful next-generation AI models. And, at COMPUTEX, NVIDIA announced that some of the world’s leading system manufacturers, including Acer, ASUS, Dell Technologies, GIGABYTE, HP, Lenovo and MSI, are set to build NVIDIA DGX Spark and DGX Station systems.

Pushing the boundaries of what’s possible with Armv9

Arm’s reach across all these markets is made possible by the ubiquitous Armv9 architecture, the foundation of modern computing. Since its introduction four years ago, many of the world’s latest mobile handsets and PCs are now powered by Armv9 CPUs, bringing a range of AI performance benefits to these devices. Later this year, the new Armv9 flagship CPU – Travis – will bring double-digit performance gains, with the further acceleration of AI workloads through our latest architecture feature, Scalable Matrix Extension (SME). This will be paired with Arm’s next-generation GPU – Drage – which unlocks sustained performance for longer gaming and richer multimedia content – to deliver Arm Lumex CSS for the future of edge AI on consumer devices.

Supercharging AI workloads on the Arm from cloud to edge

Alongside innovative hardware, Bergey reflected on Arm’s world-leading software ecosystem. Today, we have over 22 million developers building on Arm. The strength and breadth of the developer ecosystem is the reason why Arm continues to make significant investments in software, so developers can move faster, with less complexity.

With Arm Kleidi, our suite of AI software libraries announced at COMPUTEX last year, we’re giving developers instant access to performance optimizations for all Arm markets – automotive, cloud and datacenter, IoT, mobile and PC – across any AI model or workload, whether that’s audio, image, text or video. In just one year, we’ve integrated Arm KleidiAI with leading frameworks, including ExecuTorch and PyTorch, Hunyuan, llama.cpp, MediaPipe, MNN and ONNX Runtime. To date, Kleidi has achieved over 8 billion cumulative installs across Arm-based devices, with more planned for the future.

The ongoing AI transformation offers a once-in-a-lifetime opportunity

Looking ahead, AI’s relentless evolution and innovation continues. AI models are getting smarter, with 150 new foundational models covering audio, image, text and video generation in the past 18 months alone. AI assistants that used to live in the cloud are now being developed for the edge first, with a massive shift to AI inference at the edge. While billions are spent on training, it’s inference – where AI actually runs and adds value – that is driving future innovation and monetization opportunities. And AI agents are here and growing fast, enabling systems to independently execute complex tasks, collaborate with other agents, and operate autonomously at scale. This is laying the foundation for physical AI that goes beyond digital-only applications and brings AI into real-world, physical environments, like robotics. Just like today, scalable, efficient computing will matter more than ever.

Bergey closed the COMPUTEX keynote with a powerful message: the AI era presents a once-in-a-lifetime opportunity to redefine how technology impacts the world. However, realizing this potential will take ongoing innovation and deep collaboration across Arm’s world-leading ecosystem. With the Arm compute platform at the heart of this transformation from cloud to edge, we’re not just enabling AI everywhere now – we’re shaping its future. A future that is built on Arm.

Any re-use permitted for informational and non-commercial or personal use only.