Arm-native Google Chrome Enhances Windows on Arm Performance

Microsoft Windows 10 and Windows 11 incorporate Arm-native support, warranting the development of even more Arm-native apps for Windows. This support features additional tools to simplify app porting, enhance app performance, and reduce power consumption. As a result, many companies are now investing in Arm-native apps for Windows.

Previously Arm talked about the excellent momentum behind the ecosystem of Windows on Arm applications, with Google Chrome being one of the prime examples. However, we wanted to put this to the test by exploring the range of improvements that native Arm support is delivering for Google Chrome.

AArch64 Support for Google Chrome

The recent release of Google Chrome to add native AArch64 support for Windows provides a range of benefits for users, including:

- Enhanced performance: Arm-native support made web browsing on Google Chrome faster and more efficient with a significant performance boost compared to its emulated x86 version.

- Quicker web page loading: Websites with slow loading times now load much quicker as Arm-native. This improvement is due to optimized scripting, system tasks, and rendering processes.

- Improved JavaScript execution: JavaScript execution becomes notably faster when Arm-native, enhancing the responsiveness of web applications and interactive elements.

- Better battery life: The efficient power consumption of the Arm-native code allows users to engage more with their devices without needing to recharge regularly.

- Superior rendering speeds: Rendering times are drastically reduced, making web pages appear faster and smoother.

Performance comparison: Emulated x86 vs. Arm-native

To illustrate these benefits, we installed the Google Chrome release for x86_64 Windows, designated “Win64” (Version 125.0.6422.61 (Official Build)) and which runs emulated on Windows on Arm, as well as the native Chrome release for AArch64 Windows, designated “Arm64,” to analyze the performance of a popular news website.

Using the “Performance” tab in Google Chrome’s Developer Tools, we quantified load and rendering speeds.

Emulated x86 Version: The website took nearly 16 seconds to load, with significant time spent on scripting (4.4 seconds), system tasks (1.7 seconds), and rendering (0.9 seconds).

Arm-native Version: Scripting time was reduced to 1.5 seconds (almost 3x shorter), system time to 0.4 seconds (4.25x shorter) and rendering to 0.18 seconds (5x shorter), indicating markedly faster loading and rendering due to native Arm execution.

Performance tests on other news websites showed similar results.

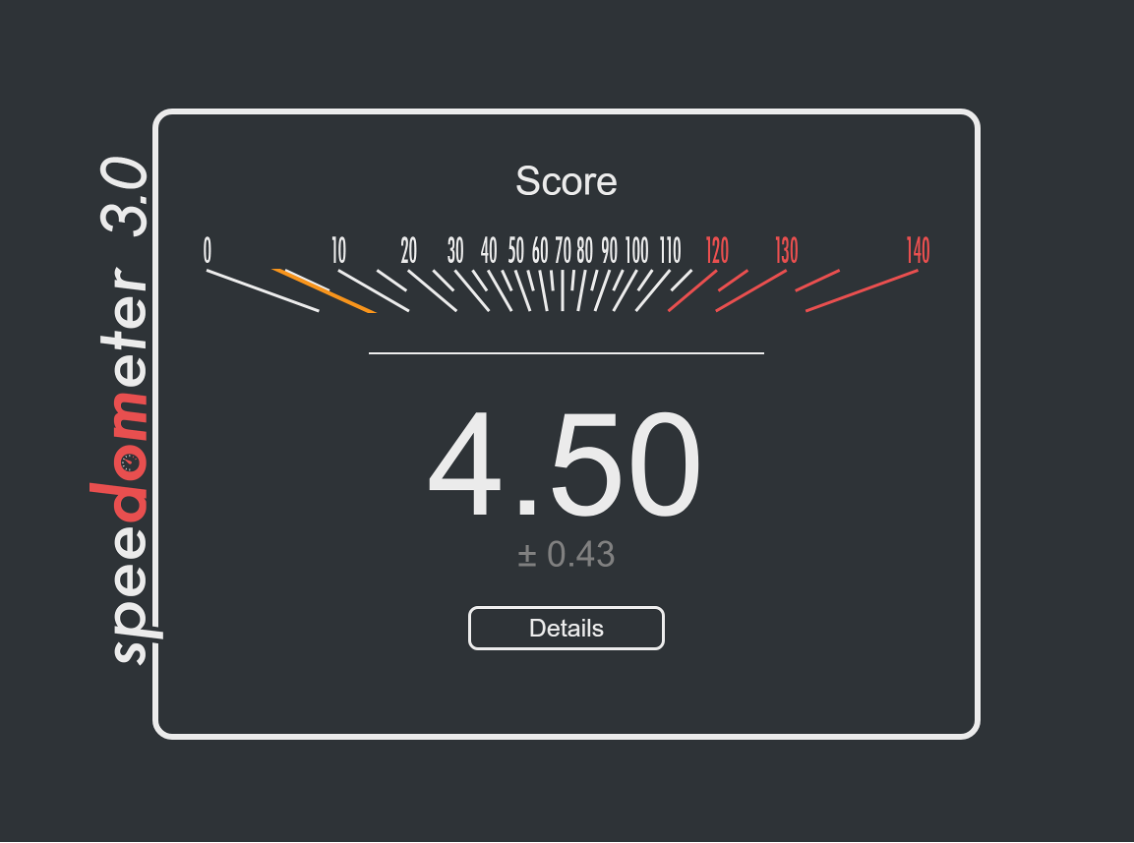

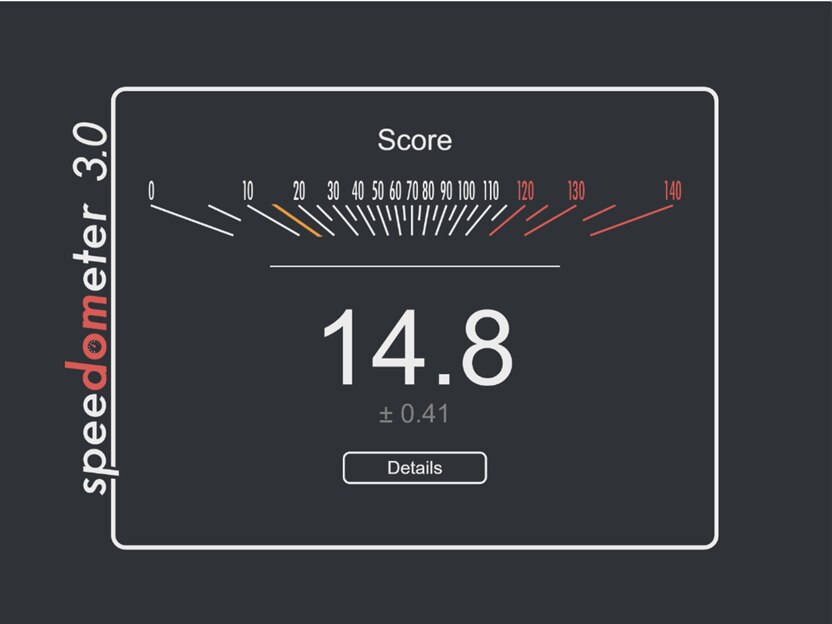

Speedometer 3.0 Benchmark

To further highlight the performance benefits of the Arm-native version of Google Chrome, we utilized the Speedometer 3.0 web browser benchmark. This is an open-source benchmark that measures the responsiveness of web applications by timing simulated user interactions across various workloads.

The benchmark tasks are designed to reflect practical web use cases, though some specifics pertain to Speedometer and should not be used as generic app development practices. This benchmark is created by the teams behind the major browser engines—Blink, Gecko, and WebKit—and has received significant input from companies including Google, Intel, Microsoft, and Mozilla.

Upon running the Speedometer 3.0 benchmark on the emulated x86 and Arm-native versions of Google Chrome (tested on Windows Dev Kit 2023), Arm-native support was found to significantly enhance the responsiveness of the web applications. This advantage is demonstrated above showing an Arm-native performance score that is more than three times better than on emulated x86. This further underscores the superior efficiency and performance of native Arm applications on Windows on Arm.

Running Inference with TensorFlow.js and MobileNet

TensorFlow.js is a JavaScript implementation of Google’s widely acclaimed TensorFlow library. It allows developers to use AI and machine learning (ML) when building interactive and dynamic browser-based applications. With TensorFlow.js, users can train and deploy AI models directly in the client-side environment, facilitating real-time data processing and analysis without the need for extensive server-side computation.

MobileNet is a class of efficient architectures designed specifically for mobile and embedded vision applications. It stands out due to its lightweight structure, enabling fast and efficient performance on devices with limited computational power and memory resources.

In Python applications that use TensorFlow, using MobileNet is straightforward:

Python:

model = MobileNet(weights='imagenet') Next, you perform predictions on your input image:

predictions = model.predict(input_image) Please refer to the tutorial for a better example of training and inference.

These predictions can then be converted to actual labels:

print('Predicted:', decode_predictions(predictions, top=3)[0]) Where decode_predictions is a hypothetical function that converts the model scores (probabilities) into labels describing the image content.

TensorFlow.js provides a similar interface:

model_tfjs = await tf.loadGraphModel(MOBILENET_MODEL_PATH); Predictions can run after eventual image pre-processing:

predictions = model_tfjs.predict(image); Afterward, you convert them to labels or classes:

labels = await getTopKClasses(predictions, 3); For a better sample web application, please refer to this example.

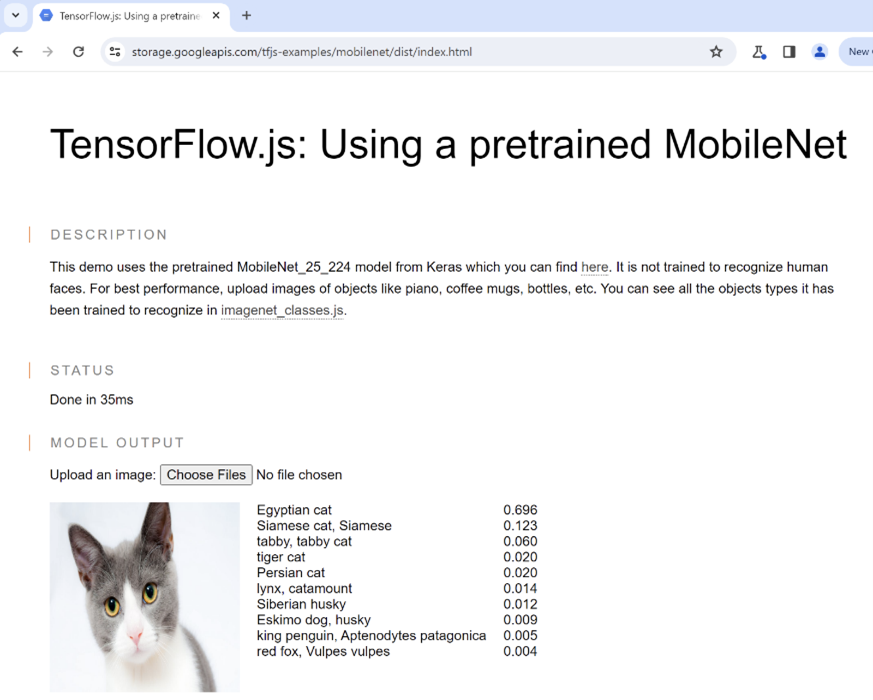

We ran the above web application in the emulated x86 Chrome web browser, as well as in the Arm-native version. Arm-native Chrome for Windows is available for anyone to download here.

The image below demonstrates the web app running in the Chrome web browser. The user interface of this application contains three core elements: a description section; a status indicator; and a model output display. The description section explains how the application was created. Upon uploading an image, the application springs into action, with the status component updating in real time to show the computation time. Once the image processing concludes, the model output takes center stage, revealing the recognized labels along with their corresponding scores.

The total processing time, including image preprocessing and AI inference, was almost 100 ms on the emulated x86 Chrome. The same operations took only 35 ms (approximately 33 percent as long) on the Arm-native version of Google Chrome. The inference results (recognized labels and scores) were identical, as the same image was used as input.

Delivering real performance for real needs

The integration of native Arm support in Google Chrome for Windows has led to significant performance enhancements, making web browsing faster, more efficient, and more responsive. These improvements are evident in both general web browsing and specific applications like TensorFlow.js with MobileNet, highlighting the growing importance of Arm-native support in the broader computing landscape. As more companies invest in Arm-native applications for Windows, users can anticipate continued advancements in efficiency and performance across a wide range of devices and applications.

At Arm, we are dedicated to driving innovation and delivering cutting-edge technology that empowers developers and enhances user experiences. The success of Arm-native support in Google Chrome exemplifies the transformative potential of Arm architecture in shaping the future of computing.

Check out Arm Learn for a series of step-by-step guides on Windows on Arm Development.

Any re-use permitted for informational and non-commercial or personal use only.