Arm Ethos-U85: Addressing the High Performance Demands of IoT in the Age of AI

As artificial intelligence (AI) continues to have more influence and impact in our day-to-day lives, the domain is migrating from cloud-based inferencing to edge and endpoint inferencing. Edge-based inferencing brings intelligence across a broad range of IoT devices, enabling data to be processed locally and decisions to be made in real time with increased data privacy and security.

How do Arm’s Ethos NPUs enhance AI performance at the edge and endpoints?

Arm has been developing edge AI accelerators to support the growing need of edge and endpoint inferencing workloads for several years. Through Arm’s Ethos-U55 and Ethos-U65 NPUs, we have two very successful products bringing high performance, energy efficient solutions for AI applications at the edge and endpoints.

Ethos-U55 is deployed in many Cortex-M based heterogenous systems. The Ethos-U65 extends the applicability of the Ethos-U family to Cortex-A-based systems, while delivering twice the on-device machine learning (ML) performance. Both products offer a unified toolchain for easy development and support for common ML network operations, including convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

What is the impact of transformer architecture on AI development?

Introduced in 2017, transformer architecture has revolutionised generative AI and become the architecture of choice for many new neural networks. Transformer-based models can process sequential data using attention mechanisms and have achieved state-of-the-art results in many AI tasks, such as machine translation, natural language understanding, speech recognition, segmentation and image captioning.

These models can be adapted and compressed to run efficiently on edge devices without compromising much on accuracy and showcasing state-of-the-art advancements across many edge and endpoint use cases.

What are the key advantages of the Ethos-U85 NPU for edge and endpoint workloads?

Building on the success of our previous Ethos-U family of NPUs, we have a new product offering, Ethos-U85. This brings an accelerator with the same high-performance, energy-efficient philosophy of previous Ethos-U NPUs, while enabling current and upcoming workloads on the edge and endpoints using transformer-based networks.

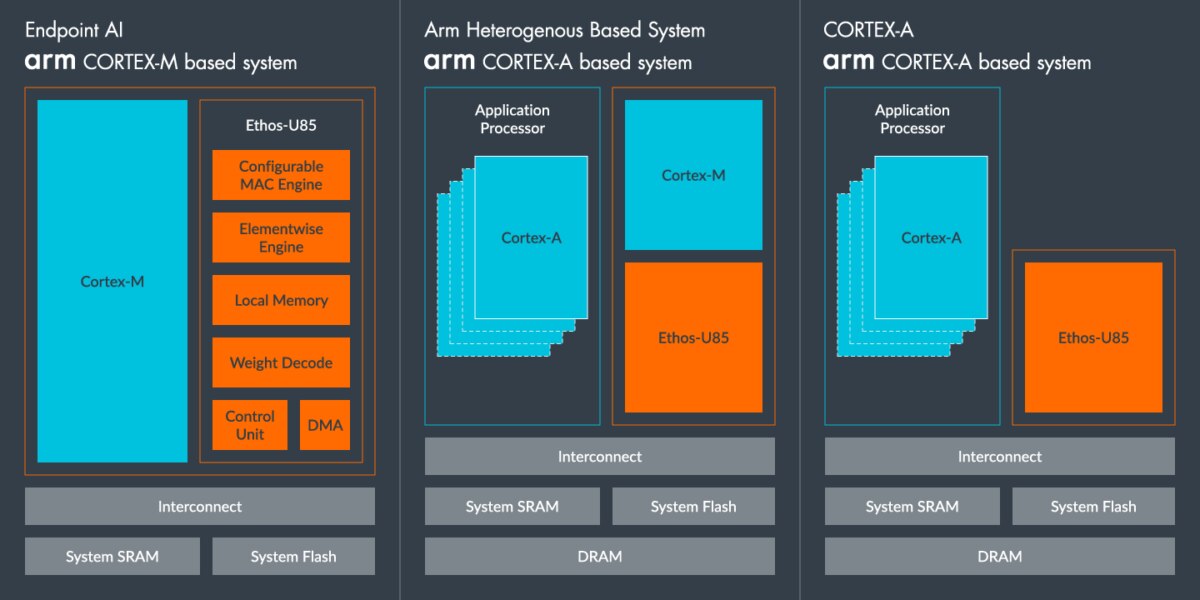

Ethos-U85 is our third generation NPU from Arm’s Ethos-U product line and the highest performing, most energy efficient Ethos NPU to date. It delivers a 4x performance uplift and 20% higher power efficiency compared to its predecessor, with up to 85% utilization on popular networks. This addresses IoT applications where we are seeing even greater performance demands, such as factory automation and commercial or smart home cameras. It is also designed to run with Cortex-M as well as Cortex-A-based systems and tolerates high DRAM latencies.

Some of the key features for Ethos-U85 include:

- Support for configurations from 128 to 2048 MACs/cycle – 256 GOPS/s to 4 TOP/s at 1GHz.

- Support for int8 weights and int8 or int16 activations.

- Support for transformer architecture networks, along with CNNs and RNNs.

- Hardware native support for 2/4 sparsity for double the throughput.

- Internal SRAM of 29 to 267 KB and up to six 128-bit AXI5 interfaces.

- Support for weight compression, with both standard and fast weight decoder.

- Support for extended compression.

In addition to the operators currently supported by the Ethos-U55 and U65, Ethos-U85 will include native hardware support for transformer networks and DeeplabV3 semantic segmentation network by supporting operations such as TRANSPOSE, GATHER, MATMUL, RESIZE BILINEAR, and ARGMAX.

Ethos-U85 also supports elementwise operator chaining. Chaining combines an elementwise operation with a previous operation to save the SRAM from having to write and then read the intermediate tensor. This can improve the efficiency of the NPU by reducing the amount of data that needs to be transferred between the NPU and memory. Chaining is one of several improved efficiency features in Ethos-U85 compared to Ethos-U65, along with fast weight decoder, improved power efficiency of the MAC array, and improved elementwise efficiency.

Ethos-U85 can be used in same flow of system configurations as it was for Ethos-U55 and Ethos-U65, and we are introducing the capability to directly drive Ethos-U85 from a Cortex-A-based system.

Ethos-U85 will also support the same software toolchain that is established with the previous Ethos-U line of products, which is using TFLmicro runtime. This will extend the value of investments that have already been made on systems using Cortex-A/Cortex-M with Ethos-U55/Ethos-U65, as Ethos-U85 builds on that and leverages that value to enable wider use cases based on transformer networks. In the future, we expect to enable support for ExecuTorch, which is a PyTorch runtime for edge devices.

Ethos-U85 supported operators will be accelerated on the NPU itself, while if there are any special operators that are not supported, then some of those can be accelerated on Cortex-M based systems using CMSIS-NN. For example, in the case of tinyLlama, the model was fully mapped on to Ethos-U85 with no fallback of operators to a CPU.

And finally, as part of Corstone-320, Ethos-U85 is built right at the heart of our latest IoT Reference Design Platform. This helps to accelerate the development and deployment of high performance systems-on-chip (SoCs) across a variety of AI-based IoT solutions.

Unleash every AI capability at the edge

Ethos-U85 will bring the compute power necessary to execute many state-of-the-art AI capabilities at the edge and on endpoint devices. As the world of AI develops, our partners will have reliable, efficient, and high performing Ethos-U based solutions. We expect to see Ethos-U85 deployed in emerging edge AI use cases, in smart home, retail or industrial settings, where there is demand for higher performance compute with support for the latest AI frameworks.

At Arm, we take pride in enabling our partners and ecosystem, with cutting-edge hardware and software solutions. With Ethos-U85, we are opening a world of possibilities of edge and endpoint-based AI inference use-cases that will transform the world. Arm is taking edge AI innovation to the next level as we continue to build the future of edge AI on Arm.

Learn more about the Arm Ethos-U85 here.

Any re-use permitted for informational and non-commercial or personal use only.