Introducing Cortex-A320: Ultra-efficient Armv9 CPU Optimized for IoT

In today’s evolving IoT landscape, where software complexity continues to increase, edge devices require more performance, efficiency, and security than ever. The Arm Cortex-A portfolio meets this demand by bringing advanced computing capabilities to power-constrained devices, delivering enhanced AI processing, robust security and optimized efficiency across diverse markets. The Cortex-A3xx series specifically delivers ultra-efficient solutions and optimized performance across various market segments, including consumer devices and cloud services. More importantly, it provides a powerful and scalable solution for the rapidly growing and highly diverse IoT market, making it particularly ideal for edge AI applications.

Edge AI requires increasingly higher compute performance, stronger security, and greater software flexibility. As software complexity grows, the Armv9 architecture has been introduced to provide advanced machine learning (ML) and AI capabilities, along with enhanced security features. This leading-edge architecture is now deployed in the ultra-efficient Cortex-A3xx tier, providing a robust foundation for next-generation edge AI applications.

Cortex-A320: The smallest Armv9 implementation

Today, Arm introduces the Cortex-A320 the first ultra-efficient Cortex-A processor implementing the Armv9 architecture. Cortex-A320 is an AArch64 CPU, based on the Armv9.2-A version of the architecture. Its microarchitecture has been derived from Cortex-A520 but has been significantly optimized to improve area and power.

Efficiency improvements of over 50% compared to the Cortex-A520 are achieved through multiple microarchitecture updates. These include a narrow fetch and decode datapath, densely banked L1 caches, a reduced-port integer register file, and other optimizations.

Significant microarchitecture innovations, such as efficient branch predictors and pre-fetchers, as well as memory system improvements, have also boosted Cortex-A320’s scalar performance, by more than 30% in SPECINT2K6, compared to its predecessor, Cortex-A35.

Most importantly, by integrating the Armv9 enhancements in the NEON and scalable vector extension (SVE2) vector processing technologies, Cortex-A320 delivers multiple folds (10x) of ML processing uplift compared to Cortex-A35, as measured in int8 General Matrix Multiplication (GEMM). With support for new data types, like BF16, and new dot product and matrix multiplication instructions, Cortex-A320 achieves up to 6x higher ML performance than Cortex-A53, the world’s most popular Armv8-A CPU.

The significant improvements in ML capabilities, combined with the high area and energy efficiency, qualify Cortex-A320 as the most efficient core in ML applications across all Arm Cortex-A CPUs.

Cortex-A320 also brings multifold ML performance increase against the Arm Cortex-M processors – for example, up to 8x higher GEMM performance compared to Cortex-M85, the highest performing Cortex-M CPU. This performance boost isn’t just due to the Armv9 enhancements in AI processing; it also stems from significantly improved memory access performance and increased frequencies in the Cortex-A320.

Additionally, thanks to its A-profile architecture, multi-core execution, and flexible memory management, both make Cortex-A320 a suitable candidate for extending performance to high-performance Cortex-M microcontrollers.

Optimized microarchitecture for high efficiency

Cortex-A320 is a single-issue, in-order CPU with a 32-bit instruction fetch, implementing an optimized 8-stage pipeline with a compact forwarding network, to achieve higher frequency points than Cortex-A520.

Cortex-A320 offers scalability within a cluster by supporting single-core to quad-core configurations. It features DSU-120T, a streamlined DynamIQ Shared Unit (DSU), which enables Cortex-A320-only clusters. DSU-120T is a minimal DSU implementation, significantly reducing complexity, area, and power consumption, thereby maximizing efficiency for low-end Cortex-A based designs.

Cortex-A320 supports up to 64KB L1 caches, and up to 512KB L2, and it has a 256-bit AMBA5 AXI interface to the external memory. The L2 cache and the L2 TLB can be shared between the Cortex-A320 CPUs, and the vector processing unit – which implements the NEON and SVE2 SIMD (Single Instruction, Multiple Data) technologies – can be either private in a single core complex or shared between 2 cores in a dual-core, or quad-core implementation.

Versatile benefits to target multiple markets

Cortex-A320 ensures compatibility with edge and infrastructure devices, while delivering efficiency and scalability. It benefits from the extensive open-source Linux support, a robust security ecosystem, and – more importantly – key Armv9 architecture advancements.

Apart from the ML improvements through the updates in the NEON and SVE2 vector processing technologies, the Armv9 architecture brings significant enhancements to security, which is key to any IoT and embedded system. Cortex-A320 brings important security features to the ultra-efficiency Cortex-A tier, like the Memory Tagging Extension (MTE) which provides enhanced memory safety, as well as Pointer Authentication (PAC) and Branch Target Identification (BTI), which mitigate against jump and return oriented programming attacks.

One of the key Armv9 features adopted by the Cortex-A320 is Secure EL2 (Exception Level 2). For more details, visit the Secure Virtualization page. Secure EL2 enhances software isolation in TrustZone, facilitating the secure execution of software containers on edge devices.

The Cortex-A320 leverages all these benefits across a wide range of applications, from low-end general purpose MPUs, smart speakers, and software-defined smart cameras, to factory floor autonomous vehicles, automated edge AI assistants, AI-enabled human-machine interfaces, and utility robot controllers. Apart from edge AI applications, other key market segments are also benefitting from Cortex-A320, like smartwatches and smart wearables, as well as infrastructure devices, such as Baseboard Management Controllers (BMC) for servers.

Cortex-A320 can also be an ideal fit for applications where a high-performance Cortex-M is traditionally used, like battery-operated MCU use-cases, or applications running a real-time operating system (RTOS), that require though to scale up performance through symmetric multi-processing, which is supported out of the box in the A-profile architecture.

It can also be a suitable candidate for RTOS applications that require Cortex-A memory management or address translation features, for enhanced software flexibility. For example, Cortex-A320 can be appropriate for use-cases that require downloading apps on an MCU device, thus a memory management unit (MMU) is necessary for code relocation across the memory map.

At the same time, due to the wider addressing space, Cortex-A320 can be an efficient solution for heterogeneous multicore use cases which combine a big Cortex-A with a microcontroller class core. Cortex-A320 enables Arm’s partners to use a small architecturally compatible core alongside the bigger Cortex-A processor, so that the memory architecture is simplified.

On the other hand, thanks to its A-profile characteristics, Cortex-A320 can provide out of the box Linux support and enable software portability for Android or any existing rich operating system. Cortex-A320 brings unprecedented levels of flexibility, to target multiple market segments, applications and operating systems.

Introducing the Armv9 heterogeneous Edge AI platform

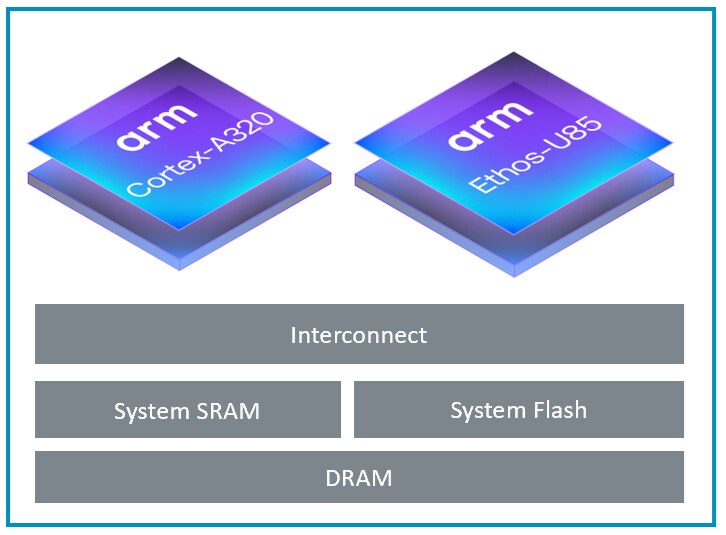

Our latest Ethos-U85 NPU is designed to tolerate the higher latency memories generally found in Cortex-A based systems and works well with the Cortex-A320.

The Ethos-U85 driver has now been updated so that Ethos-U85 can be driven directly by a Cortex-A320, without the need for a Cortex-M based ML island. This update improves latency and allows Arm partners to remove the cost and complexity of using a Cortex-M to drive the NPU.

Moreover, the memory access performance and the enhanced memory system of Cortex-A320, allow the execution of larger ML models, such as large language models (LLMs) of more than 1Bn parameters, which cannot run effectively on Cortex-M based systems due to the limited addressable memory space.

Ethos-U NPUs work with quantized datatypes to meet the cost and energy requirements of the most constrained edge AI use-cases. Any ML operators and datatypes that are not supported by the Ethos-U85 will fallback automatically to the Cortex-A320, exploiting the Neon/SVE2 engine for acceleration.

Due to the significant ML improvements in the Armv9 architecture, a quad-core Cortex-A320 can execute up to 256 GOPS, measured in 8-bit MACs/cycle when running at 2GHz. As a result, Cortex-A320 can run advanced ML and AI use-cases directly on the CPU, even without the need for an external accelerator. This can save system area, power and complexity, for devices targeting a wide range of ML and AI applications, for up to 0.25 TOPs.

A new era for Edge AI is coming

Bringing Armv9 security and unprecedented AI performance levels into the ultra-efficient Cortex-A tier, Cortex-A320 offers new possibilities to software developers to develop and deploy ever more demanding use-cases, opening a new era for edge AI devices. By combining the A-profile architecture and the software ecosystem around it, with efficiency and flexibility, Cortex-A320 brings scalability and versatility to target multiple markets in IoT and beyond.

Discover how the ultra-efficient Arm Cortex-A320 CPU is revolutionizing IoT with unmatched performance, security, and energy efficiency.

Any re-use permitted for informational and non-commercial or personal use only.