AR Has a Perception Problem. Here’s How We Plan to Solve It.

Augmented reality (AR) technology has a lot to get right. At a system level, it relies on the successful interaction of a range of factors such as sensor fusion and display technology. At a physical device level, it’s how we build something ergonomic enough that consumers are happy to wear it on their face for hours on end. And ultimately, content is king—the immersion has to hold up. Should any one aspect fail to deliver, the overall experience will be compromised.

And yet there’s one shortcoming of the AR experience that for me, stands out above all else: it’s called the vergence-accommodation conflict (VAC), and it’s the primary cause behind unwanted headaches and eye strain while using AR.

AR technologies today rely on the same stereoscopic techniques that date back to 1830 and our first understanding of why we have two eyes. By delivering slightly different images to each eye, we’re able to trick the brain into stereopsis—combining these two images into a single 3D image through which we can perceive depth.

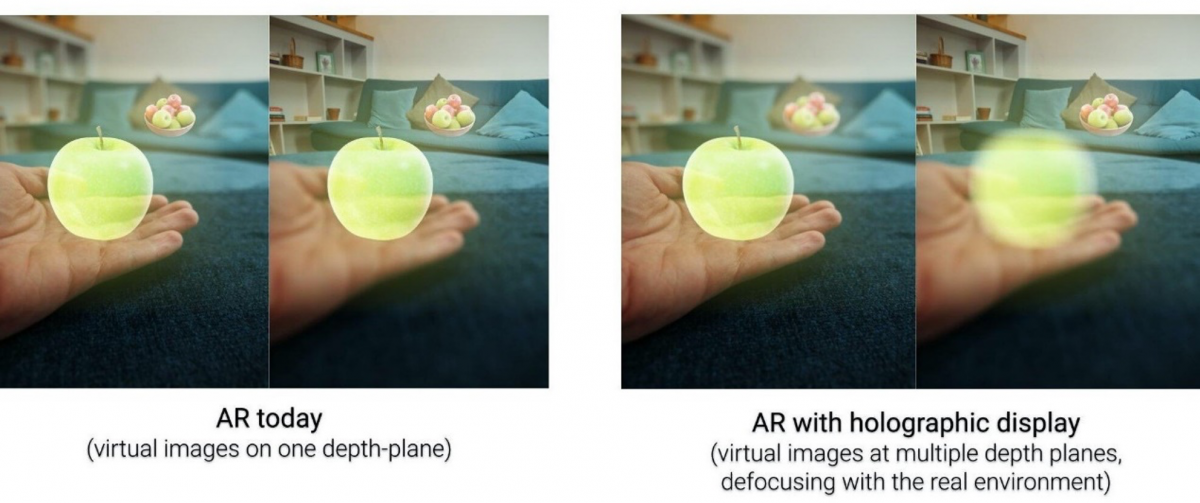

The problem with this technique is that while it enables us to perceive the depth of a scene, it does not enable us to focus on any one part of it. Lift your finger to your face and focus on it: naturally, this blog falls out of focus. Yet in AR, that doesn’t happen: everything remains in focus all the time.

To enable this to happen, we need a solution that not only gives users full depth perception but is capable of projecting multiple depth planes, as shown in the image above on the right. At VividQ, we believe that that solution is holographic display.

Holographic display 101

Holographic display is the process of engineering light to project three-dimensional holograms that possess a natural depth of field. We’ve all seen holograms as they appear in science fiction, yet it’s a common misunderstanding that holograms are actual images that people look at directly. As discussed in this Arm blog on the magic (and science) behind holograms, they are a set of instructions that tell multiple lasers how to intersect their beams in such a way that the illusion of a 3D object is created in mid-air.

Combining holographic display with computer generated holography (CGH), which is the method of creating holograms digitally from various 3D data sources, provides the information required to display a three-dimensional scene, with depth, to the end-user. This is crucial for the Augmented Reality (AR) space, as it mimics the real world in terms of how images are delivered the eye. It’s a far more natural experience.

Better AR experiences with holographic display technology

Holographic display promises to provide a far better AR experience in a range of use cases. AR gaming is practically synonymous with Pokémon Go, and it’s a good example of how holographic display and CGH will lead to a better user experience as AR gaming shifts from using the smartphone as a ‘lens’ to wearable devices such as headsets.

Developers will be able to populate real-world environments with more realistic 3D holographic imagery and characters that consumers will be able to seamlessly interact with. For example, NPCs (non-player characters) will actually look like they are part of the real-world environment and not just ‘pop up’ 2D images. More importantly, because holographic display does not generate VAC, consumers will also be able to play games for extended periods of time without feeling fatigued or nauseous.

The realistic depth of field enabled through holographic display is also going to be critical in making AR viable in industrial and medical applications, too. In any task requiring close-quarters physical precision, whether it’s operating on a complex machine or a human, our ability to focus on objects is critical. A virtual object overlay that’s dislocated from the environment will only get in the way—and undoubtedly increase the risk of error. And again, it’s essential that these AR devices don’t cause eye fatigue when used for long periods—some surgeries can take an excess of 10 hours, for example—because otherwise, adoption will fail.

Rethinking the hardware requirements for holographic display

Holographic display offers a far more natural AR experience over stereography. However, there’s a downside: creating hologram-style images through CGH and holographic display is incredibly compute-intensive in comparison. While the technology to generate and display such images exists today, it needs far more compute performance than is likely to ever be found in a head-mounted or handheld device.

This challenge is why VividQ was founded: we’ve created a new set of algorithms that enable holographic display for AR in consumer devices. Using our software development kit, OEMs can build VividQ technology into their devices, enabling them to take on a variety of AR-based applications. This leads to fast, scalable and high-quality display that allows for the real-time generation of holograms for AR applications.

VividQ algorithms go a long way to solving the performance challenge taking a totally different, bottom-up approach to how we calculate holographic content. We’ve progressed in the last few years from doing very, very basic monochrome holograms running on a fairly chunky desktop GPU, to building our software into an AR wearable prototype that delivers photorealistic image quality.

However, until recently this was still a tethered, PC-driven solution—not exactly portable. The crucial next stage was to enable our software for low power chip solutions and SoCs used in untethered AR wearable devices and smartphones, so consumers could take VividQ technology with them. That’s where our partnership with Arm comes in.

Our partnership with Arm

We believe that holographic display holds the key to enabling more realistic and immersive AR experiences in a wide range of consumer and industrial applications. Collaborating with Arm gives us access to the support, computational resources and applications we need to develop for mobile devices ranging from today’s smartphones to the wearable medical devices of the future.

The ubiquity of Arm IP among mass-market consumer electronics means we can accelerate the adoption and scalability of holographic display by bringing VividQ’s software to the Arm Mali GPUs found in many current smartphones. It means that in the near future, we will be able to deliver greatly improved AR experiences to mobile platforms and—we believe—solve a crucial challenge in enabling AR technology to fulfil its true potential.

Transform Your Business with Arm Technology

Arm technology scales from the tiniest of sensors to the largest of data centers, providing power-efficient intelligence upon which transformative applications and business models are built. Discover more about Arm solutions and get started on your journey to market.

Any re-use permitted for informational and non-commercial or personal use only.