Cloud AI, Edge AI, Endpoint AI. What’s the Difference?

Today, the majority of what we call artificial intelligence (AI) is machine learning (ML), a subset of AI that involves machines learning from sets of data. In general, the greater the amount of data to learn from, the more the AI is able to infer meaning and the more useful it becomes.

Hundreds of thousands of gigabytes of data are generated every day in AI applications ranging from consumer devices to healthcare, logistics to smart manufacturing. With this much data generated, the key consideration is where that data should be processed.

Arm defines three categories within the compute spectrum: cloud, edge and endpoint. We can employ ML to process data within each of these but choosing the most suitable category isn’t as simple as going where the most compute performance is—as performance is only one governing factor in ensuring the learnings inferred from data remain useful.

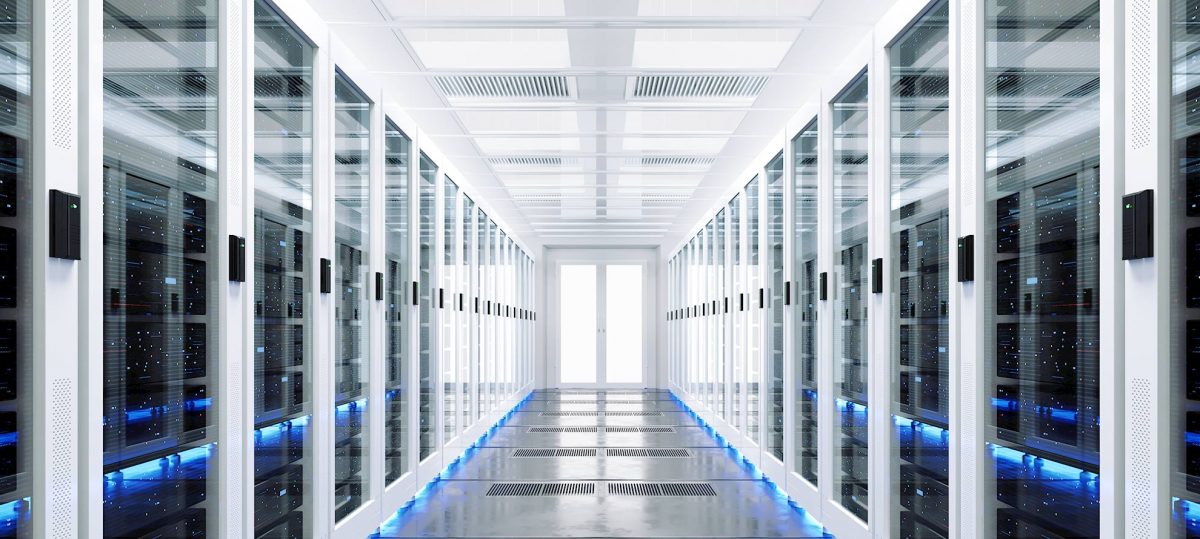

What is Cloud AI?

Cloud AI refers to AI processing within powerful cloud data centers. For a long time, cloud AI was the obvious choice of compute platform to crunch enormous amounts of data. Were it not for the concept of shunting data from the edge and endpoint into cloud servers for hyper-efficient processing, AI would not be at the stage of maturity it enjoys today.

It’s likely the majority of AI heavy lifting will always be performed in the cloud due to its reliability, cost-effectiveness and concentration of compute—especially when it comes to training machine learning (ML) algorithms on historic data that doesn’t require an urgent response. Many consumer smart devices rely on the cloud for their ‘intelligence’: for example, today’s smart speakers give the illusion of on-device intelligence yet the only on-device AI they are capable of is to listen out for the trigger word (‘keyword spotting’).

Cloud AI is undisputed in its ability to solve complex problems using ML. Yet as ML’s use cases grow to include many mission-critical, real-time applications, these systems will live or die on how quickly decisions can be made. And when data has to travel thousands of miles from device to data center, there’s no guarantee that by the time it has been received, computed and responded to it will still be useful.

Applications such as safety-critical automation, vehicle autonomy, medical imaging and manufacturing all demand a near-instant response to data that’s mere milliseconds old. The latency introduced in asking the cloud to process that weight of data would in many cases reduce its value to zero.

It’s for this reason that many companies are now looking past the cloud to processing AI elsewhere in the compute infrastructure, moving compute nearer the data.

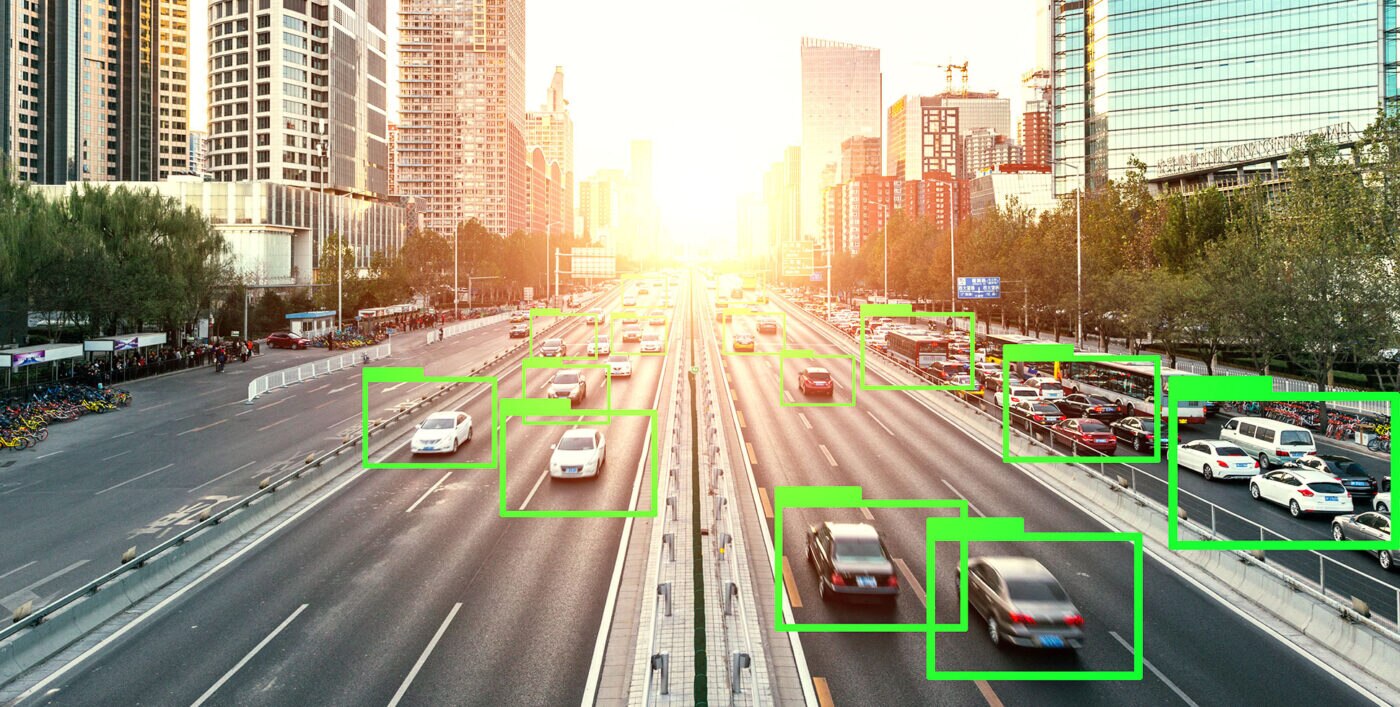

What is Edge AI?

In a world where data’s time to value or irrelevancy may be measured in milliseconds, the latency introduced in transferring data to the cloud threatens to undermine many of the Internet of Things (IoT’s) most compelling use cases.

Edge AI moves AI and ML processing from the cloud to powerful servers at the edge of the network such as offices, 5G base stations and other physical locations very near to their connected endpoint devices. By moving AI compute closer to the data, we eliminate latency and ensure that all of that data’s value is retained.

Basic devices such as network bridges and switches have given way to powerful edge servers that add data center-level hardware into the gateway between endpoint and cloud. These powerful new AI-enabled edge servers, driven by new platforms such as Arm Neoverse, are designed to increase compute while decreasing power consumption, creating massive opportunities to instrument our cities, factories, farms, and environment to improve efficiency, safety, and productivity.

Edge AI has the potential to benefit both the data and the network infrastructure itself. At a network level, it could be used to analyze the flow of data for network prediction and network function management, while enabling edge AI to make decisions over the data itself offers significantly reduced backhaul to the cloud, negligible latency and improved security, reliability and efficiency across the board.

Another key function of edge AI is sensor fusion: combining the data from multiple sensors to create complex pictures of a process, environment or situation. Consider an edge AI device in an industrial application, tasked with combining data from multiple sensors within a factory to predict when mechanical failure might occur. This edge AI device must learn the interplay between each sensor and how one might affect the other and apply this learning in real-time.

There’s also a key security and resilience benefit in moving sensitive data no further than the edge: The more data we move to a centralized location, the more opportunities arise for that data’s integrity to be compromised. As the nature of compute changes, the edge is playing an increasingly crucial role in supporting diverse systems with a range of power and performance requirements. To deliver on service level agreements at scale for enterprises, the edge must embrace cloud-native software principles.

Arm is enabling this through Project Cassini, an open, collaborative, standards-based initiative to deliver a cloud-native software experience across a secure Arm edge ecosystem.

What is Endpoint AI?

Arm defines endpoint devices as physical devices connected to the network edge, from sensors to smartphones and beyond. As so much data is generated at the endpoint, we can maximise the insight we gain from that data by empowering endpoint devices to think for themselves and process what they collect without moving that data anywhere.

Due to their powerful internal hardware, smartphones have long been a fertile test-bed for endpoint AI. A smartphone camera is a prime example: it’s gone from something that takes grainy selfies to being secure enough for biometric authentication and powerful enough for computational photography – adding background blur (or a pair of bunny ears) to selfies in real-time.

This technology is now finding its way into smaller IoT devices. You may hear it referred to as the ‘AIoT’. In February 2020, Arm announced its solution for adding AI into even the smallest Arm-powered IoT devices. The Arm Cortex-M55 CPU and Arm Ethos-U55 micro neural processing unit (microNPU) combine to boost the performance of Arm-based Internet of Things (IoT) solutions by nearly 500 times—while retaining the trademark energy-efficient, cost-effective benefits our technology is known for. This technology will help to bring the benefits of Arm-powered compute to the IoT’s most challenging environments.

TinyML is an emerging sub-field of Endpoint AI, or AIoT, that enables ML processing in some of the very smallest endpoint devices containing microcontrollers no bigger than a grain of rice and consuming mere milliwatts of power.

Of course, endpoint AI also has its limitations: these devices are far more constrained in terms of performance, power and storage than edge AI and cloud AI devices. Data collected by one endpoint AI sensor can also have limited value on its own, as without the ‘top-down’ view of other data streams that sensor fusion at the edge enables, it is harder to see the full picture.

A combined, secure approach

Cloud AI, Edge AI and Endpoint AI each have their strengths and limitations. Arm’s range of heterogeneous compute IP scales the complete compute spectrum, ensuring that whatever your AI workload, Arm has a solution to enable it to be processed efficiently by putting intelligent compute power where it makes the most sense.

Most importantly, Arm technology ensures that data used in AI processing remains secure, from cloud to edge to endpoint. The Arm Platform Security Architecture (PSA) provides a platform, based on industry best-practice, that enables security to be consistently designed in at both a hardware and firmware level, while PSA Certified assures device manufacturers that their IoT devices are built secure. Within Arm processors, Arm TrustZone security technology simplifies IoT security and offers the ideal platform on which to build a device that adheres to PSA principles.

Powering innovation through AI

AI is empowering change, driving innovation, and creating exciting new possibilities. Arm is forging a path to the future with solutions designed to support the rapid development of AI. Discover how Arm combines the hardware, software, tools, and strategic partners you need to accelerate development.

Any re-use permitted for informational and non-commercial or personal use only.